So, you want to track prices on Best Buy. Solid choice. It helps you track deals, spot inventory shifts, and even predict trends if you want to go there.

You’ve got two ways in: use a ready-made tool that handles the basics or build your own tool that tracks exactly what you care about. To make sure it keeps working without getting blocked, just wire in some proxies. We’ll show you how.

What is a Best Buy price tracker?

A Best Buy price tracking tool monitors product prices, then lets you know when something changes, like a price drop, a restock, or a pattern worth watching.

But what powers it is a scraper. One that loads the page, reads the price, saves it, and comes back again on schedule.

Top Best Buy price tracking tools and apps

Remember that first option we talked about, using a ready-made tool that handles the basics? Let’s look at what’s actually out there:

Visualping

Let’s start with Visualping. It gives you three ways to track a page. You can have it take screenshots of a selected area, like the price section, and compare them pixel by pixel. Or you can have it pull the raw HTML or text, then check for line-by-line changes. You can even target a specific DOM element using a CSS selector or XPath.

For most sites, that’s good enough. However, this is Best Buy, and Best Buy doesn’t just serve HTML and wish you luck. Its anti-scraping setup is built to punish tools like this. You might get a clean check or two, then the lag hits, the price vanishes, and the page pretends everything's fine while hiding the real data. The problem is that Visualping won’t always know that it’s been blocked.

Hexowatch

Hexowatch takes things up a level. It gives you 13 monitor types, from DOM elements and visual snapshots to keyword triggers, full HTML comparisons, sitemap changes, tech stack shifts, uptime monitoring, API response checks, WHOIS updates, and even backlinks. You can tweak sensitivity, schedule scans, and, most importantly, rotate proxies. That puts it miles ahead of basic tools.

But it reacts to page change as opposed to the better option, which would be adapting to those changes. If Best Buy serves a fake price or hides the right element behind a delayed script, Hexowatch will still log the page and tell you everything looks fine. It won’t warn you that it missed something. That makes it good enough for most websites but not nearly bulletproof enough for Best Buy.

Price Watch for Best Buy (iOS & Android)

If you’re tracking one or two Best Buy products casually from your phone, this app might do the trick. Price Watch for Best Buy works on both iOS and Android. You drop in a product URL or use the Share Sheet, and it tracks that item for price drops.

Users report that alerts sometimes don’t go off. There’s also no proxy rotation, content verification, or sign-in notices when Best Buy changes the rules mid-page.

PromptCloud

PromptCloud is a full-service data-as-a-service provider that builds custom Best Buy crawlers. You share the SKUs, data fields, and frequency; they send a sample; you review; and then they deploy a polished scraper via API, CrawlBoard, or CSV.

If you just want clean pricing data without managing code, proxies, or maintenance, it's a good choice. You point them to the Best Buy product prices you want tracked, and they give you the results.

Brandly360

Brandly360 gives you a clean dashboard that tracks price changes across Best Buy, Amazon, and other e-commerce sites. It lets you monitor product pages, banner promos, stock shifts, and digital shelf visibility with automated reports and alerts.

But it is not cheap. Plans start around $420 per month. That might make sense for big brands chasing e-commerce intelligence. If you are trying to track a few Best Buy prices once a day or just need basic alerts without heavy infrastructure, this is overkill. The price tag does not scale down well for lightweight or low-frequency use cases.

How to build your own Best Buy price tracker

Let’s not pretend Best Buy is just another e-commerce site. It is not. You are not going to scrape it with a basic requests call and a few BeautifulSoup selectors.

Best Buy is hostile. It flags anything that feels automated. Load the page too fast, skip steps, or try to read content before the JavaScript settles, and you will get blocked. Not every time, but often enough that basic tools stop being useful.

That is why we are building this differently. Our tracker starts with a Google search. Just like a real user would. It finds the right Best Buy product page, loads it in a full browser, waits for the dynamic content, and checks the price. If it meets your threshold, it sends you a heads-up.

The system rotates proxies, picks new device fingerprints, and interacts with the page in a way that feels human. It scrolls a little, waits, moves the mouse, and reads the price like a real shopper.

Anything less stops working sooner than you think. Let’s get to it.

Step 1: Pre-requisites

You’ll need tools, access, and a bit of setup to make it all work. So before we go anywhere, we’ll walk through everything you need.

Python 3 installed

We’re running this in Python, so first things first, make sure Python 3 is installed. Open your terminal and run:

python3 --version

If you see something like Python 3.x, you’re good. But if you get an error or the version starts with a 2, go to python.org and download the latest release. As of now, that’s Python 3.13.5.

Google Chrome (latest)

This tracker uses a stealth browser that mimics a real Chrome session. It checks your local build and mirrors it to avoid mismatch flags. Make sure you have the latest version of Chrome installed. If it's outdated, you risk version conflicts and detection.

To check your Chrome version:

- Open Chrome

- Click the three-dot icon

- Head to ‘Help’

- Click ‘About Google Chrome’

You’ll see the version number right there. That’s the one our undetected driver will sync with.

Undetected-chromedriver

Best Buy uses layered detection across fingerprints, device behavior, timing, and checks how content loads. Undetected-chromedriver was made for that kind of inspection. It reshapes how Chrome launches, reports its identity, and how it behaves under scrutiny.

- navigator.webdriver

- Browser plugin list

- Language settings

- Canvas and WebGL render paths

- Chrome runtime artifacts

- Window dimensions and zoom

- JS patch injection on load

You need this level of cover. Otherwise, Best Buy will detect your activity before you scrape anything. So to install the Chrome Driver, start by running this command on your browser:

pip install undetected-chromedriver

This will get you the brains of the operation. Now, for the hands that will do the lifting, run this command:

sudo apt install chromium-chromedriver

Selenium

The next thing we need is a headless browser. If you want a full breakdown of what that is, check out our guide. Now here’s the thing: Playwright, Puppeteer, and Selenium all work. You could get decent results with any of them, at least on paper.

But Best Buy checks your browser settings, timing, behavior, and how scripts render. That is why we are using Selenium. Undetected-chromedriver runs on it and modifies Chrome itself, not just the session. Playwright and Puppeteer can work, but their stealth relies on plugins. That’s not enough here.

Run the following command to install Selenium:

pip install selenium

MarsProxies residential proxies

If you’re thinking you might get away with using one IP for all your tracking, stop. That’s not how this works. Best Buy’s system is designed to cut off repeat traffic. If you don’t rotate IPs, it’s just a matter of when Best Buy will block your script.

You want residential U.S. proxies in different locations. We’re using 10 here, but if your tracker needs to watch multiple products, you’ll want 5 per product minimum. That gives you the buffer you need when one IP gets temporarily flagged.

Undetected-chromedriver can work with proxies, but username/password authentication doesn’t run well in scripts. Skip it and whitelist your machine’s IP with your proxy provider. MarsProxies makes that easy - we’ve covered it in our guide.

Now that your tools are lined up and the browser’s ready to blend in, it’s time to write the code.

Step 2: Create the script file and set up your folder

Start by opening your terminal and navigating to the location where you want to store the script and its dependencies. In this case, we’ll use the desktop:

cd Desktop

Now, create a new folder to hold your tracker:

mkdir Best Buy Price Tracking

Move into that folder:

cd Best Buy Price Tracking

Then, create your Python script file:

echo.>Best-Buy-Price-Tracker.py

That’s it. Now open the file in your preferred code editor. We’re ready to start writing the tracker.

Step 3: Import modules and configure your environment

Every part of your tracker depends on having the right tools loaded cleanly.

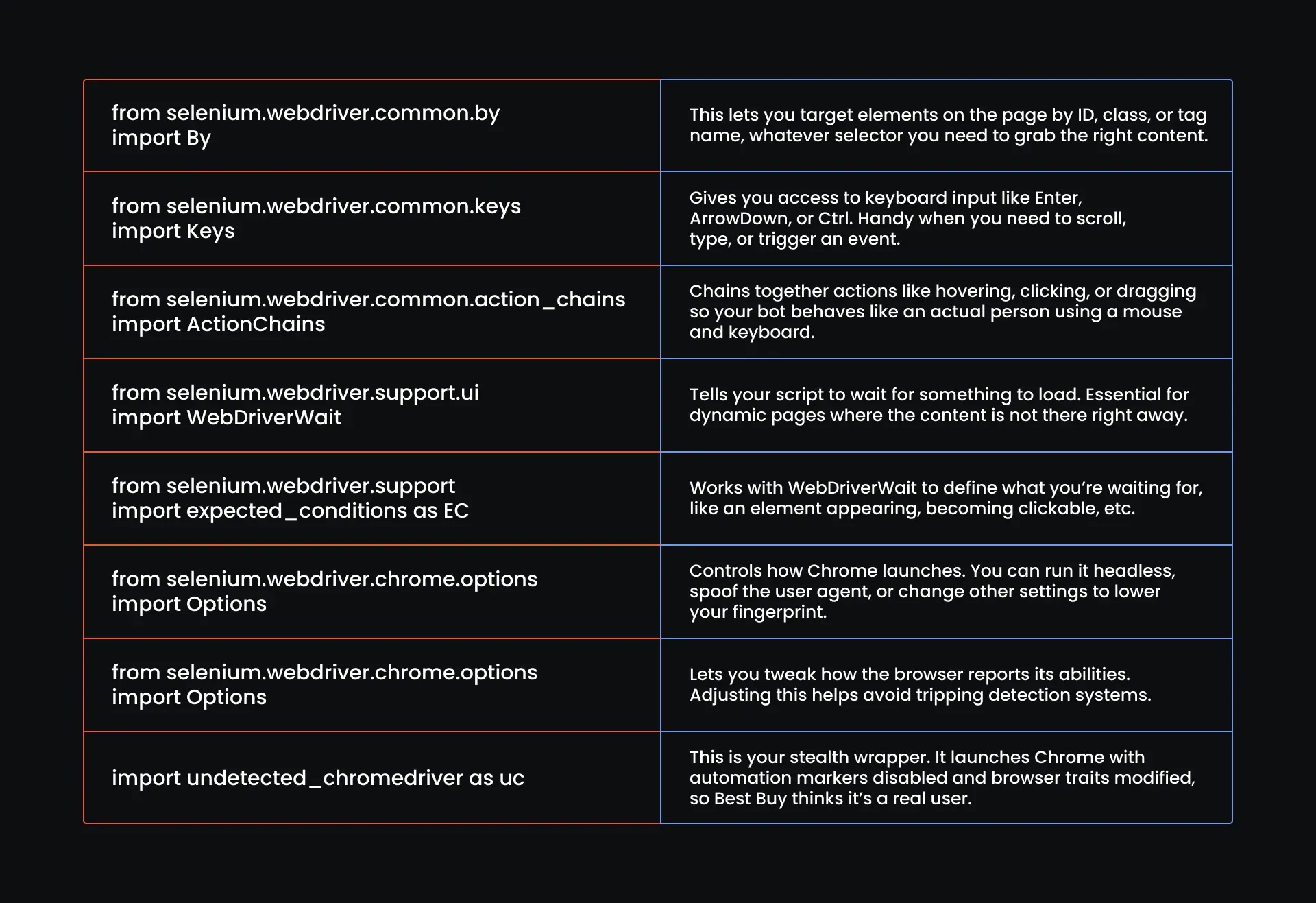

Browser control and stealth automation

These imports help you launch Chrome, mimic user actions, and fool every script Best Buy throws at you. This is the foundation of your stealth:

from selenium.webdriver.common.by import By

from selenium.webdriver.common.keys import Keys

from selenium.webdriver.common.action_chains import ActionChains

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.support import expected_conditions as EC

from selenium.webdriver.chrome.options import Options

from selenium.webdriver.common.desired_capabilities import DesiredCapabilities

import undetected_chromedriver as uc

Here’s what each one does:

Proxy, fingerprint & spoofing logic

These imports shuffle proxies, change who you appear to be, and throw in delays to make it all look normal. This is what buys you time on the site. Sometimes, that’s all you need.

Here are the imports that you'll need:

import time

import random

import os

import sys

import uuid

import logging

import itertools

import re

import base64

And here is what each one does:

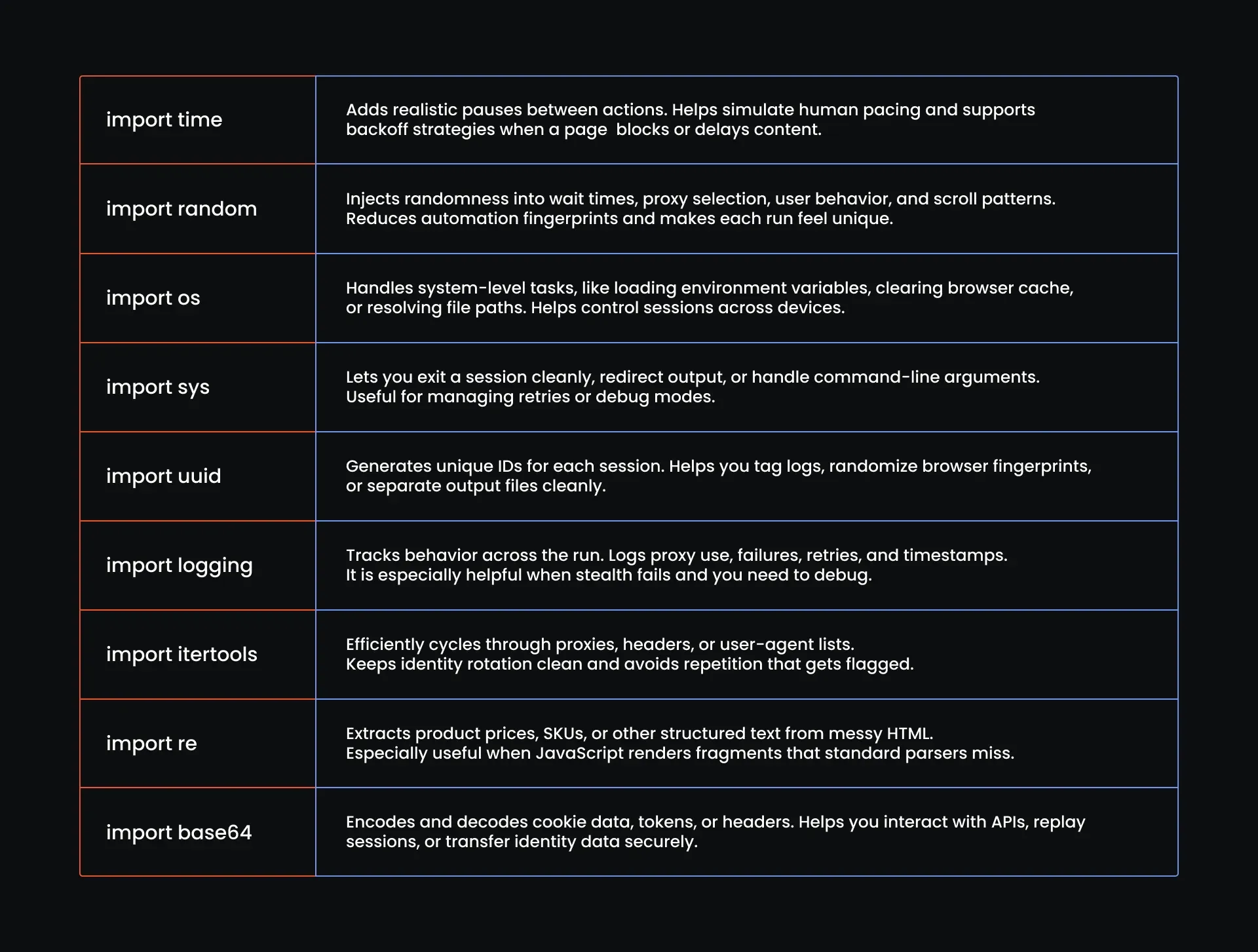

Alerting & Output

These imports handle the last step: getting the results out. They send alerts when a price hits, log what happened, and write clean data files you can use or audit later.

import smtplib

from email.message import EmailMessage

from email.mime.text import MIMEText

import csv

import json

import pandas as pd

from datetime import datetime

Here is what each one does:

That's it! We are now done with step 1. Your script should now look like this:

#Browser Control & Stealth Automation

from selenium.webdriver.common.by import By

from selenium.webdriver.common.keys import Keys

from selenium.webdriver.common.action_chains import ActionChains

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.support import expected_conditions as EC

from selenium.webdriver.chrome.options import Options

from selenium.webdriver.common.desired_capabilities import DesiredCapabilities

import undetected_chromedriver as uc

#Proxy, Fingerprint & Spoofing Logic

import time

import random

import os

import sys

import uuid

import logging

import itertools

import re

import base64

# Alerting & Output

import smtplib

from email.message import EmailMessage

from email.mime.text import MIMEText

import csv

import json

import pandas as pd

from datetime import datetime

Step 4: Configuring global settings and execution strategy

Now that you’ve loaded your tools, it’s time to give your script a brain. This is where you define how it should behave, including how many times it retries, how long it waits, what product to track, and where to log results.

Drop in these global settings before you go any further:

# Configure logging

logging.basicConfig(level=logging.INFO, format='[%(asctime)s] %(message)s')

# Global Settings

HEADLESS = False # Set to True later if you spoof it correctly

MAX_RETRIES = 5

WAIT_MIN = 1.5 # base human wait

WAIT_JITTER = 2.0 # added jitter to simulate inconsistency

GOOGLE_RETRY_LIMIT = 3

GOOGLE_BACKOFF_BASE = 3 # seconds (waits 3s, 6s, 9s...)

BLOCK_BACKOFF_STEPS = [5, 10, 20, 30] # seconds

# list of products to search

PRODUCTS = [

{"name": "Nintendo Switch 2", "threshold": 460.00}

]

# CSV Output setup

CSV_FILE = "price_log.csv"

Here is what this all means:

Your script should now look like this:

#Browser Control & Stealth Automation

from selenium.webdriver.common.by import By

from selenium.webdriver.common.keys import Keys

from selenium.webdriver.common.action_chains import ActionChains

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.support import expected_conditions as EC

from selenium.webdriver.chrome.options import Options

from selenium.webdriver.common.desired_capabilities import DesiredCapabilities

import undetected_chromedriver as uc

#Proxy, Fingerprint & Spoofing Logic

import time

import random

import os

import sys

import uuid

import logging

import itertools

import re

import base64

# Alerting & Output

import smtplib

from email.message import EmailMessage

from email.mime.text import MIMEText

import csv

import json

import pandas as pd

from datetime import datetime

# Your global settings and execution strategy

# Configure logging

logging.basicConfig(level=logging.INFO, format='[%(asctime)s] %(message)s')

# Global Settings

HEADLESS = False # Set to True later if you spoof it correctly

MAX_RETRIES = 5

WAIT_MIN = 1.5 # base human wait

WAIT_JITTER = 2.0 # added jitter to simulate inconsistency

GOOGLE_RETRY_LIMIT = 3

GOOGLE_BACKOFF_BASE = 3 # seconds (waits 3s, 6s, 9s...)

BLOCK_BACKOFF_STEPS = [5, 10, 20, 30] # seconds

# Example: list of products to search

PRODUCTS = [

{"name": "Nintendo Switch 2", "threshold": 460.00}

]

# CSV Output setup

CSV_FILE = "price_log.csv"

Step 5: Configuring your proxies

Our bot already has its imports. It has a brain and knows what to do and when to do it. Now we start giving it faces, and that begins with proxies.

Add your proxy strings

We’re loading 10 U.S. residential proxies from different states because we’re retrying 5 times. We’re going with MarsProxies residential proxies here because they’re fast, effective, and easy to manage.

And since undetected-chromedriver makes proxy authentication tricky inside the code, we’re skipping credentials altogether and whitelisting our IP instead.

For our code, we will create a list and drop in our proxies, then define a function that picks a proxy from that list randomly. This keeps the rotation unpredictable and clean. Since we’re whitelisting instead of using login credentials, you don’t need to change the structure of the proxy URL itself, just update the location and session parts.

Here’s the format you’ll follow:

http://ultra.marsproxies.com:44443/your-proxy-configuration-string

Stick to that layout and you’re good. Just swap in the right state or city, and tweak the session ID. This is what our proxy list looks like with 10 real U.S. locations already configured:

#Proxy Pool

PROXIES = [

"http://ultra.marsproxies.com:44443/country-us_city-acworth_session-5o10can2_lifetime-20m",

"http://ultra.marsproxies.com:44443/country-us_state-alabama_session-hk50878d_lifetime-20m",

"http://ultra.marsproxies.com:44443/country-us_state-arizona_session-0raeiekm_lifetime-20m",

"http://ultra.marsproxies.com:44443/country-us_city-berea_session-z74rc852_lifetime-20m",

"http://ultra.marsproxies.com:44443/country-us_city-camden_session-qjx1zf0r_lifetime-20m",

"http://ultra.marsproxies.com:44443/country-us_city-columbia_session-n81nr351_lifetime-20m",

"http://ultra.marsproxies.com:44443/country-us_city-daytonabeach_session-iffawas4_lifetime-20m",

"http://ultra.marsproxies.com:44443/country-us_state-delaware_session-jgvgyrmv_lifetime-20m",

"http://ultra.marsproxies.com:44443/country-us_city-denver_session-k038l41j_lifetime-20m",

"http://ultra.marsproxies.com:44443/country-us_city-fairborn_session-edkpbnq6_lifetime-20m"

]

Add timezones

You can pass the proxy check and still get burned. Best Buy looks for signs that do not line up. Say you’re using a U.S. residential proxy out of Ohio, but your browser’s timezone is stuck in Eastern Europe. That’s a red flag. Best Buy sees the gap and doesn’t say a word.

What you get instead is a soft block. The page loads, but what loads is a decoy. No price or useful DOM, just enough to fake a pass. You never know unless you’re watching.

To prevent that from happening, add this code block to your script right after the proxy list:

# Minimal city/state to timezone map (expand as needed)

TIMEZONE_MAP = {

"city-acworth": "America/New_York",

"city-berea": "America/New_York",

"city-camden": "America/New_York",

"city-columbia": "America/New_York",

"city-daytonabeach": "America/New_York",

"city-denver": "America/Denver",

"city-fairborn": "America/New_York",

"state-alabama": "America/Chicago",

"state-arizona": "America/Phoenix",

"state-delaware": "America/New_York"

# Add more if needed

}

This dictionary maps each proxy’s city or state to its correct timezone. The keys represent locations pulled from the proxy string, like "city-denver" or "state-alabama", and the values are the matching timezone identifiers.

When your scraper loads a proxy, it uses this map to find the right timezone and apply it to the browser session. That way, your IP location and browser clock stay in sync.

Add a function to extract your proxy’s location and match it with the appropriate timezone.

The proxy list is ready, and the timezone map is there. What’s missing is the link between the two. Add this function right after your map:

def extract_timezone(proxy_string):

match = re.search(r"(city|state)-([a-z]+)", proxy_string)

if not match:

raise ValueError(f"Could not extract location from: {proxy_string}")

loc_key = f"{match.group(1)}-{match.group(2)}"

timezone = TIMEZONE_MAP.get(loc_key)

if not timezone:

raise ValueError(f"No timezone mapped for: {loc_key}")

return timezone

This function takes each proxy string and pulls out the location part. It looks for either a city or a state identifier, something like city-denver or state-arizona, inside the string.

If it cannot find one, it throws an error and stops you right there. If it does find one, it builds a lookup key and checks the timezone map. No match? It flags that too and tells you the timezone was not found.

Add your identity pool

Next, you are going to add the identity pool. Because again, this is Best Buy. Every little crack gets noticed. One mismatch in fingerprinting, one wrong timezone, or one outdated user agent, and the site stops giving you real data.

This identity pool handles that. It gives your scraper a set of clean, believable device profiles to rotate through:

IDENTITY_POOL = [

{

"device_group": "desktop-windows",

"timezone": "America/New_York",

"user_agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/121.0.0.0 Safari/537.36",

"viewport": (1366, 768),

"platform": "Win32",

"hardware_concurrency": 8,

"max_touch_points": 0,

"webgl_vendor": "Intel Inc.",

"webgl_renderer": "Intel Iris Xe Graphics"

},

{

"device_group": "desktop-windows",

"timezone": "America/Chicago",

"user_agent": "Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/117.0.5938.92 Safari/537.36",

"viewport": (1600, 900),

"platform": "Win32",

"hardware_concurrency": 4,

"max_touch_points": 0,

"webgl_vendor": "NVIDIA Corporation",

"webgl_renderer": "NVIDIA GeForce GTX 1050/PCIe/SSE2"

},

{

"device_group": "desktop-mac",

"timezone": "America/Phoenix",

"user_agent": "Mozilla/5.0 (Macintosh; Intel Mac OS X 13_1) AppleWebKit/605.1.15 (KHTML, like Gecko) Version/16.1 Safari/605.1.15",

"viewport": (1440, 900),

"platform": "MacIntel",

"hardware_concurrency": 8,

"max_touch_points": 0,

"webgl_vendor": "Apple Inc.",

"webgl_renderer": "Apple GPU"

},

{

"device_group": "desktop-linux",

"timezone": "America/Denver",

"user_agent": "Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/119.0.0.0 Safari/537.36",

"viewport": (1280, 720),

"platform": "Linux x86_64",

"hardware_concurrency": 4,

"max_touch_points": 0,

"webgl_vendor": "X.Org",

"webgl_renderer": "AMD Radeon RX 560 Series (POLARIS11, DRM 3.35.0, 5.4.0-73-generic)"

},

{

"device_group": "desktop-windows",

"timezone": "America/New_York",

"user_agent": "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/118.0.5993.88 Safari/537.36",

"viewport": (1360, 768),

"platform": "Win32",

"hardware_concurrency": 2,

"max_touch_points": 0,

"webgl_vendor": "Google Inc.",

"webgl_renderer": "ANGLE (Intel, Intel HD Graphics 4600, Direct3D11 vs_5_0 ps_5_0)"

},

{

"device_group": "desktop-linux",

"timezone": "America/Chicago",

"user_agent": "Mozilla/5.0 (X11; Ubuntu; Linux x86_64; rv:109.0) Gecko/20100101 Firefox/119.0",

"viewport": (1920, 1080),

"platform": "Linux x86_64",

"hardware_concurrency": 4,

"max_touch_points": 0,

"webgl_vendor": "Mesa/X.org",

"webgl_renderer": "Mesa DRI Intel(R) HD Graphics 620 (KBL GT2)"

},

{

"device_group": "desktop-mac",

"timezone": "America/New_York",

"user_agent": "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/605.1.15 (KHTML, like Gecko) Version/15.1 Safari/605.1.15",

"viewport": (1280, 800),

"platform": "MacIntel",

"hardware_concurrency": 4,

"max_touch_points": 0,

"webgl_vendor": "Apple Inc.",

"webgl_renderer": "AMD Radeon Pro 560X OpenGL Engine"

},

{

"device_group": "desktop-windows",

"timezone": "America/Phoenix",

"user_agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:118.0) Gecko/20100101 Firefox/118.0",

"viewport": (1536, 864),

"platform": "Win32",

"hardware_concurrency": 8,

"max_touch_points": 0,

"webgl_vendor": "Microsoft",

"webgl_renderer": "Microsoft Basic Render Driver"

},

{

"device_group": "desktop-linux",

"timezone": "America/Denver",

"user_agent": "Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/115.0.0.0 Safari/537.36",

"viewport": (1366, 768),

"platform": "Linux x86_64",

"hardware_concurrency": 6,

"max_touch_points": 0,

"webgl_vendor": "Google Inc.",

"webgl_renderer": "ANGLE (AMD, Radeon RX 580 Series Direct3D11 vs_5_0 ps_5_0)"

},

{

"device_group": "desktop-windows",

"timezone": "America/New_York",

"user_agent": "Mozilla/5.0 (Windows NT 6.3; Trident/7.0; rv:11.0) like Gecko",

"viewport": (1024, 768),

"platform": "Win32",

"hardware_concurrency": 2,

"max_touch_points": 0,

"webgl_vendor": "Microsoft",

"webgl_renderer": "Direct3D11"

}

]

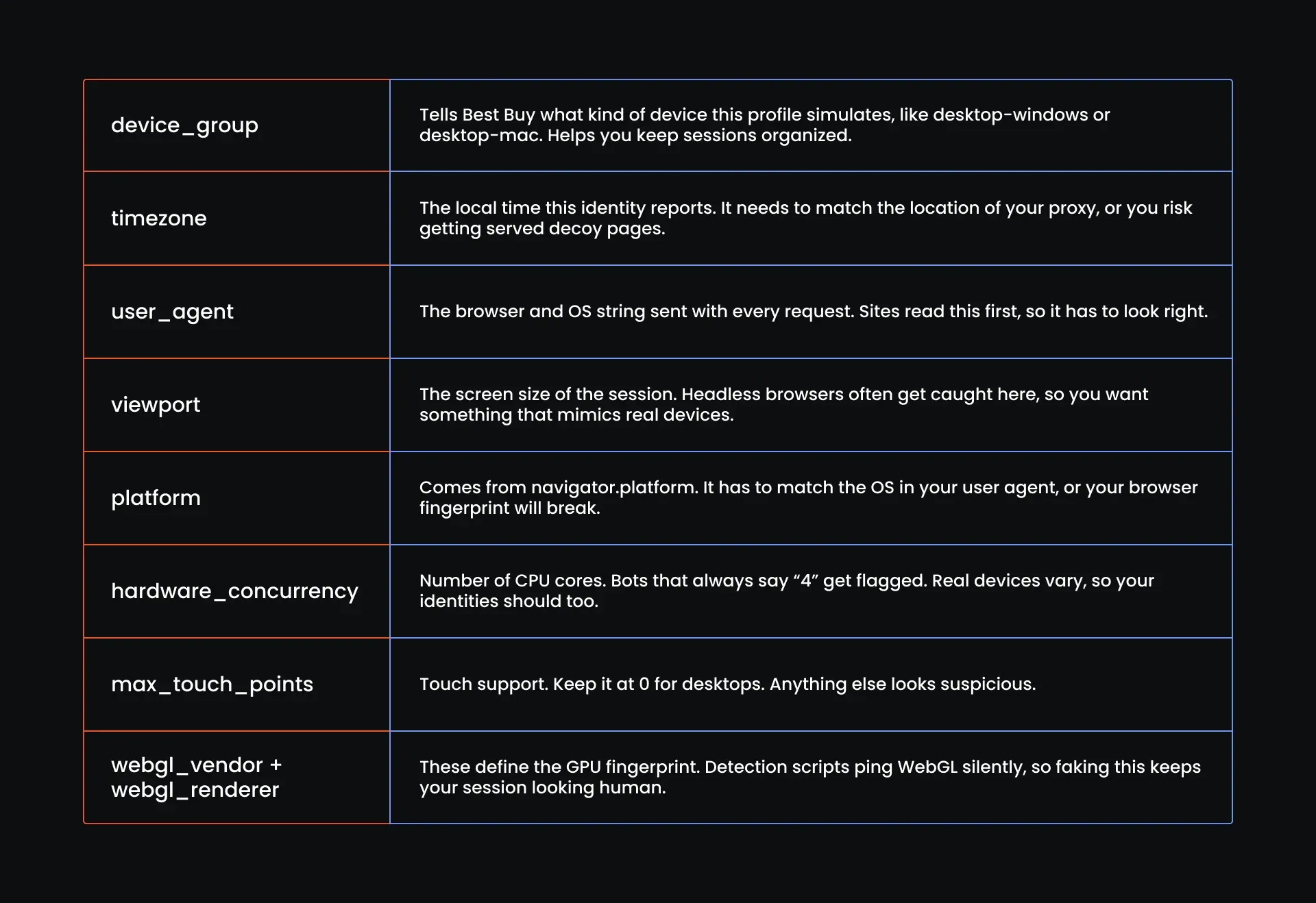

Here is what each identity in our list of dictionaries contains:

Add a function to extract a proxy and identity for each of your sessions

At this point, your script has everything it needs: proxies, timezones, and an identity pool that covers all the basics. But unless you connect them, none of it matters.

This function decides what proxy to use, grabs the correct timezone, and then filters your identity pool down to only the ones that make sense. If nothing fits, it shuts everything down before you waste a single request.

Let’s wire it in:

# Proxy assignment and identity logic

def assign_proxy_and_identity():

proxy = random.choice(PROXIES)

logging.info(f"Selected proxy: {proxy}")

try:

timezone = extract_timezone(proxy)

except ValueError as e:

logging.error(f"Failed to extract timezone: {e}")

raise

# Filter identities by matching timezone

matching_identities = [idn for idn in IDENTITY_POOL if idn["timezone"] == timezone]

if not matching_identities:

logging.error(f"No identities found for timezone: {timezone}")

raise Exception(f"No identities match timezone: {timezone}")

identity = random.choice(matching_identities)

logging.info(f"Assigned identity with user agent: {identity['user_agent']}")

return proxy, identity

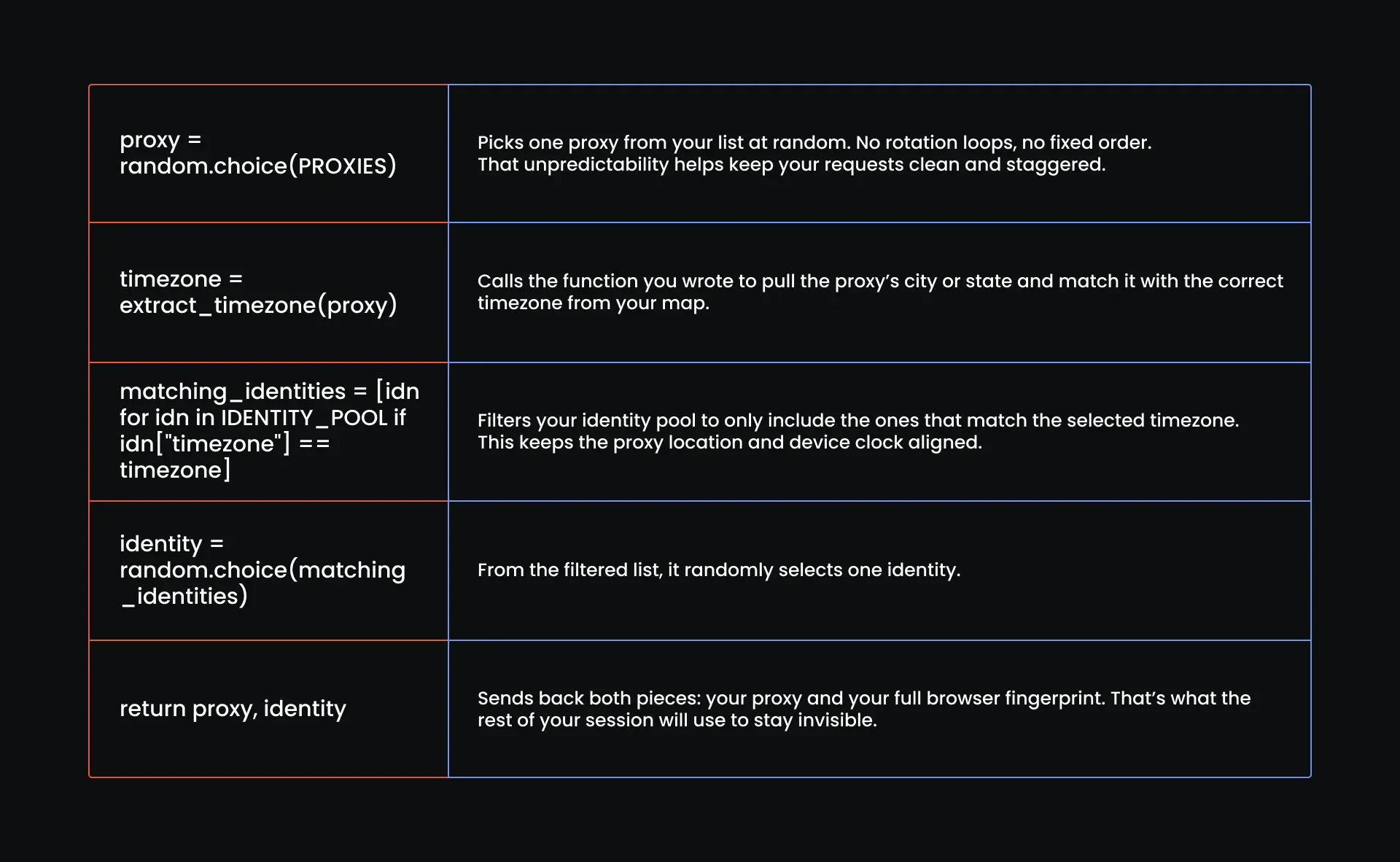

Here is a detailed breakdown of what this function does:

Step 6: Add your behavior profiles

Your bot is already wearing a good disguise. Now it needs to act the part. That means moving like a real user, because if the page loads and your click lands before the DOM is ready, Best Buy will catch you.

So now we train behavior. Think of this as muscle memory for your bot. These profiles give your bot the pauses and hesitations it needs to stay believable.

Add this block to your script:

# Each profile defines timing, interaction, and navigation tendencies

BEHAVIOR_PROFILES = [

{

"name": "Fast Clicker",

"base_delay": 0.5,

"scroll_pattern": "none",

"hover_before_click": False,

"re_click_probability": 0.05,

"slow_typing": False,

"move_mouse_between_actions": True,

},

{

"name": "Deliberate Reader",

"base_delay": 2.5,

"scroll_pattern": "linear",

"hover_before_click": True,

"re_click_probability": 0.02,

"slow_typing": True,

"move_mouse_between_actions": True,

},

{

"name": "Back-and-Forth Browser",

"base_delay": 1.2,

"scroll_pattern": "jittery",

"hover_before_click": True,

"re_click_probability": 0.1,

"slow_typing": True,

"move_mouse_between_actions": True,

},

{

"name": "Precise Shopper",

"base_delay": 1.0,

"scroll_pattern": "none",

"hover_before_click": False,

"re_click_probability": 0.01,

"slow_typing": True,

"move_mouse_between_actions": True,

},

{

"name": "Researcher",

"base_delay": 2.0,

"scroll_pattern": "linear",

"hover_before_click": True,

"re_click_probability": 0.03,

"slow_typing": True,

"move_mouse_between_actions": True,

},

{

"name": "Curious Clicker",

"base_delay": 1.7,

"scroll_pattern": "jittery",

"hover_before_click": True,

"re_click_probability": 0.2,

"slow_typing": False,

"move_mouse_between_actions": True,

},

{

"name": "Hesitant User",

"base_delay": 3.0,

"scroll_pattern": "none",

"hover_before_click": True,

"re_click_probability": 0.0,

"slow_typing": True,

"move_mouse_between_actions": True,

},

]

Let's meet our cast:

- Fast Clicker is that impatient buyer who just wants to hit “Add to Cart” and get on with it

- Deliberate Reader mimics a careful shopper by waiting longer and scrolling linearly, like someone reading specs or reviews

- Back-and-Forth Browser acts like someone second-guessing every step

- Precise Shopper is on a mission. They don’t scroll unless they have to

- Researcher reads everything. Their scrolls are smooth, delays are long, and typing feels almost too slow

- Curious Clicker behaves like a distracted user who sometimes pauses just long enough to fool a script

- Hesitant User moves slow and Hovers a lot. They scroll little, type slowly, and move like they’re worried about clicking the wrong thing. Best for cautious sessions.

Now that the behavior profiles are defined, this function picks one at random for each session:

# Function to randomly select a behavior profile for a session

def assign_behavior_profile():

return random.choice(BEHAVIOR_PROFILES)

That’s it. We’ve wired in the stealth. Your bot now looks human and acts human. At this point, your script should look like this:

#Browser Control & Stealth Automation

from selenium.webdriver.common.by import By

from selenium.webdriver.common.keys import Keys

from selenium.webdriver.common.action_chains import ActionChains

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.support import expected_conditions as EC

from selenium.webdriver.chrome.options import Options

from selenium.webdriver.common.desired_capabilities import DesiredCapabilities

import undetected_chromedriver as uc

#Proxy, Fingerprint & Spoofing Logic

import time

import random

import os

import sys

import uuid

import logging

import itertools

import re

import base64

# Alerting & Output

import smtplib

from email.message import EmailMessage

from email.mime.text import MIMEText

import csv

import json

import pandas as pd

from datetime import datetime

# Configure logging

logging.basicConfig(level=logging.INFO, format='[%(asctime)s] %(message)s')

# Global Settings

HEADLESS = False # Set to True later if you spoof it correctly

MAX_RETRIES = 5

WAIT_MIN = 1.5 # base human wait

WAIT_JITTER = 2.0 # added jitter to simulate inconsistency

GOOGLE_RETRY_LIMIT = 3

GOOGLE_BACKOFF_BASE = 3 # seconds (waits 3s, 6s, 9s...)

BLOCK_BACKOFF_STEPS = [5, 10, 20, 30] # seconds

# Example: list of products to search

PRODUCTS = [

{"name": "Nintendo Switch 2", "threshold": 460.00}

]

# CSV Output setup

CSV_FILE = "price_log.csv"

#Proxy pool

PROXIES = [

"http://ultra.marsproxies.com:44443/country-us_city-acworth_session-5o10can2_lifetime-20m",

"http://ultra.marsproxies.com:44443/country-us_state-alabama_session-hk50878d_lifetime-20m",

"http://ultra.marsproxies.com:44443/country-us_state-arizona_session-0raeiekm_lifetime-20m",

"http://ultra.marsproxies.com:44443/country-us_city-berea_session-z74rc852_lifetime-20m",

"http://ultra.marsproxies.com:44443/country-us_city-camden_session-qjx1zf0r_lifetime-20m",

"http://ultra.marsproxies.com:44443/country-us_city-columbia_session-n81nr351_lifetime-20m",

"http://ultra.marsproxies.com:44443/country-us_city-daytonabeach_session-iffawas4_lifetime-20m",

"http://ultra.marsproxies.com:44443/country-us_state-delaware_session-jgvgyrmv_lifetime-20m",

"http://ultra.marsproxies.com:44443/country-us_city-denver_session-k038l41j_lifetime-20m",

"http://ultra.marsproxies.com:44443/country-us_city-fairborn_session-edkpbnq6_lifetime-20m"

]

# Minimal city/state to timezone map (expand as needed)

TIMEZONE_MAP = {

"city-acworth": "America/New_York",

"city-berea": "America/New_York",

"city-camden": "America/New_York",

"city-columbia": "America/New_York",

"city-daytonabeach": "America/New_York",

"city-denver": "America/Denver",

"city-fairborn": "America/New_York",

"state-alabama": "America/Chicago",

"state-arizona": "America/Phoenix",

"state-delaware": "America/New_York"

# Add more if needed

}

def extract_timezone(proxy_string):

match = re.search(r"(city|state)-([a-z]+)", proxy_string)

if not match:

raise ValueError(f"Could not extract location from: {proxy_string}")

loc_key = f"{match.group(1)}-{match.group(2)}"

timezone = TIMEZONE_MAP.get(loc_key)

if not timezone:

raise ValueError(f"No timezone mapped for: {loc_key}")

return timezone

# Identites to use

IDENTITY_POOL = [

{

"device_group": "desktop-windows",

"timezone": "America/New_York",

"user_agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/121.0.0.0 Safari/537.36",

"viewport": (1366, 768),

"platform": "Win32",

"hardware_concurrency": 8,

"max_touch_points": 0,

"webgl_vendor": "Intel Inc.",

"webgl_renderer": "Intel Iris Xe Graphics"

},

{

"device_group": "desktop-windows",

"timezone": "America/Chicago",

"user_agent": "Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/117.0.5938.92 Safari/537.36",

"viewport": (1600, 900),

"platform": "Win32",

"hardware_concurrency": 4,

"max_touch_points": 0,

"webgl_vendor": "NVIDIA Corporation",

"webgl_renderer": "NVIDIA GeForce GTX 1050/PCIe/SSE2"

},

{

"device_group": "desktop-mac",

"timezone": "America/Phoenix",

"user_agent": "Mozilla/5.0 (Macintosh; Intel Mac OS X 13_1) AppleWebKit/605.1.15 (KHTML, like Gecko) Version/16.1 Safari/605.1.15",

"viewport": (1440, 900),

"platform": "MacIntel",

"hardware_concurrency": 8,

"max_touch_points": 0,

"webgl_vendor": "Apple Inc.",

"webgl_renderer": "Apple GPU"

},

{

"device_group": "desktop-linux",

"timezone": "America/Denver",

"user_agent": "Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/119.0.0.0 Safari/537.36",

"viewport": (1280, 720),

"platform": "Linux x86_64",

"hardware_concurrency": 4,

"max_touch_points": 0,

"webgl_vendor": "X.Org",

"webgl_renderer": "AMD Radeon RX 560 Series (POLARIS11, DRM 3.35.0, 5.4.0-73-generic)"

},

{

"device_group": "desktop-windows",

"timezone": "America/New_York",

"user_agent": "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/118.0.5993.88 Safari/537.36",

"viewport": (1360, 768),

"platform": "Win32",

"hardware_concurrency": 2,

"max_touch_points": 0,

"webgl_vendor": "Google Inc.",

"webgl_renderer": "ANGLE (Intel, Intel HD Graphics 4600, Direct3D11 vs_5_0 ps_5_0)"

},

{

"device_group": "desktop-linux",

"timezone": "America/Chicago",

"user_agent": "Mozilla/5.0 (X11; Ubuntu; Linux x86_64; rv:109.0) Gecko/20100101 Firefox/119.0",

"viewport": (1920, 1080),

"platform": "Linux x86_64",

"hardware_concurrency": 4,

"max_touch_points": 0,

"webgl_vendor": "Mesa/X.org",

"webgl_renderer": "Mesa DRI Intel(R) HD Graphics 620 (KBL GT2)"

},

{

"device_group": "desktop-mac",

"timezone": "America/New_York",

"user_agent": "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/605.1.15 (KHTML, like Gecko) Version/15.1 Safari/605.1.15",

"viewport": (1280, 800),

"platform": "MacIntel",

"hardware_concurrency": 4,

"max_touch_points": 0,

"webgl_vendor": "Apple Inc.",

"webgl_renderer": "AMD Radeon Pro 560X OpenGL Engine"

},

{

"device_group": "desktop-windows",

"timezone": "America/Phoenix",

"user_agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:118.0) Gecko/20100101 Firefox/118.0",

"viewport": (1536, 864),

"platform": "Win32",

"hardware_concurrency": 8,

"max_touch_points": 0,

"webgl_vendor": "Microsoft",

"webgl_renderer": "Microsoft Basic Render Driver"

},

{

"device_group": "desktop-linux",

"timezone": "America/Denver",

"user_agent": "Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/115.0.0.0 Safari/537.36",

"viewport": (1366, 768),

"platform": "Linux x86_64",

"hardware_concurrency": 6,

"max_touch_points": 0,

"webgl_vendor": "Google Inc.",

"webgl_renderer": "ANGLE (AMD, Radeon RX 580 Series Direct3D11 vs_5_0 ps_5_0)"

},

{

"device_group": "desktop-windows",

"timezone": "America/New_York",

"user_agent": "Mozilla/5.0 (Windows NT 6.3; Trident/7.0; rv:11.0) like Gecko",

"viewport": (1024, 768),

"platform": "Win32",

"hardware_concurrency": 2,

"max_touch_points": 0,

"webgl_vendor": "Microsoft",

"webgl_renderer": "Direct3D11"

}

]

# Proxy assignment and identity logic

def assign_proxy_and_identity():

proxy = random.choice(PROXIES)

logging.info(f"Selected proxy: {proxy}")

try:

timezone = extract_timezone(proxy)

except ValueError as e:

logging.error(f"Failed to extract timezone: {e}")

raise

# Filter identities by matching timezone

matching_identities = [idn for idn in IDENTITY_POOL if idn["timezone"] == timezone]

if not matching_identities:

logging.error(f"No identities found for timezone: {timezone}")

raise Exception(f"No identities match timezone: {timezone}")

identity = random.choice(matching_identities)

logging.info(f"Assigned identity with user agent: {identity['user_agent']}")

return proxy, identity

# Each profile defines timing, interaction, and navigation tendencies

BEHAVIOR_PROFILES = [

{

"name": "Fast Clicker",

"base_delay": 0.5,

"scroll_pattern": "none",

"hover_before_click": False,

"re_click_probability": 0.05,

"slow_typing": False,

"move_mouse_between_actions": True,

},

{

"name": "Deliberate Reader",

"base_delay": 2.5,

"scroll_pattern": "linear",

"hover_before_click": True,

"re_click_probability": 0.02,

"slow_typing": True,

"move_mouse_between_actions": True,

},

{

"name": "Back-and-Forth Browser",

"base_delay": 1.2,

"scroll_pattern": "jittery",

"hover_before_click": True,

"re_click_probability": 0.1,

"slow_typing": True,

"move_mouse_between_actions": True,

},

{

"name": "Precise Shopper",

"base_delay": 1.0,

"scroll_pattern": "none",

"hover_before_click": False,

"re_click_probability": 0.01,

"slow_typing": True,

"move_mouse_between_actions": True,

},

{

"name": "Researcher",

"base_delay": 2.0,

"scroll_pattern": "linear",

"hover_before_click": True,

"re_click_probability": 0.03,

"slow_typing": True,

"move_mouse_between_actions": True,

},

{

"name": "Curious Clicker",

"base_delay": 1.7,

"scroll_pattern": "jittery",

"hover_before_click": True,

"re_click_probability": 0.2,

"slow_typing": False,

"move_mouse_between_actions": True,

},

{

"name": "Hesitant User",

"base_delay": 3.0,

"scroll_pattern": "none",

"hover_before_click": True,

"re_click_probability": 0.0,

"slow_typing": True,

"move_mouse_between_actions": True,

},

]

# Function to randomly select a behavior profile for a session

def assign_behavior_profile():

return random.choice(BEHAVIOR_PROFILES)

Step 7: Launch undetected chromedriver

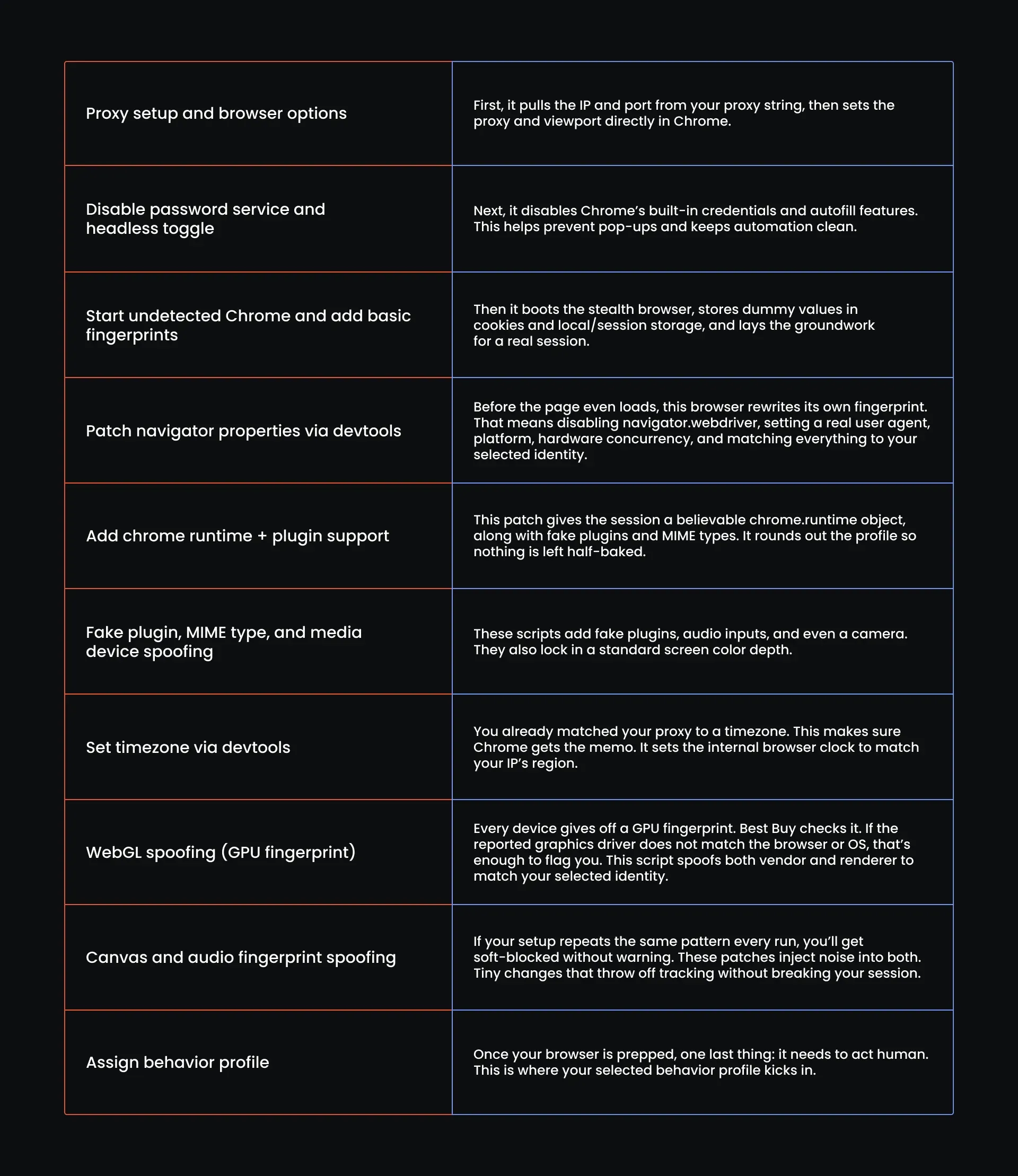

This is where it all comes together. The proxies, timezones, fingerprinting, and behavior logic - this function ties it all into one stealth browser session. Drop this in right after your behavior profile function. It’s long, but every line is worth it.

def launch_stealth_browser(proxy: str, identity: dict):

"""

Launch a stealthy undetected Chrome browser instance with proxy and identity settings applied.

"""

# Extract proxy credentials and IP

proxy_address = proxy.split("/")[2] # Extracts 'ultra.marsproxies.com:44443'

proxy_address = proxy.split("/")[2] # Gets host:port from your whitelisted proxy string

# Stealthy browser options

options = uc.ChromeOptions()

options.add_argument(f'--proxy-server=http://{proxy_address}')

options.add_argument(f"--window-size={identity['viewport'][0]},{identity['viewport'][1]}")

options.add_argument(f"--lang=en-US,en;q=0.9")

options.add_argument("--disable-blink-features=AutomationControlled")

options.add_experimental_option("prefs", {

"credentials_enable_service": False,

"profile.password_manager_enabled": False

})

if HEADLESS:

options.add_argument("--headless=new")

# Start undetected Chrome driver

driver = uc.Chrome(options=options)

driver.execute_script("window.localStorage.setItem('bb_test_key', 'value');")

driver.execute_script("window.sessionStorage.setItem('bb_test_key', 'value');")

driver.execute_script("document.cookie = 'bb_user_sim=1234567; path=/';")

driver.execute_cdp_cmd("Page.addScriptToEvaluateOnNewDocument", {

"source": f"""

Object.defineProperty(navigator, 'webdriver', {{get: () => undefined}});

Object.defineProperty(navigator, 'userAgent', {{get: () => "{identity['user_agent']}" }});

Object.defineProperty(navigator, 'platform', {{get: () => "{identity['platform']}" }});

Object.defineProperty(navigator, 'hardwareConcurrency', {{get: () => {identity['hardware_concurrency']} }});

Object.defineProperty(navigator, 'maxTouchPoints', {{get: () => {identity['max_touch_points']} }});

Object.defineProperty(navigator, 'languages', {{get: () => ['en-US', 'en'] }});

Object.defineProperty(navigator, 'language', {{get: () => 'en-US' }});

"""

})

# Enable DevTools protocol

driver.execute_cdp_cmd("Page.enable", {})

# Add fake chrome.runtime support

driver.execute_cdp_cmd("Runtime.evaluate", {

"expression": "Object.defineProperty(navigator, 'chrome', { get: () => ({ runtime: {} }) });"

})

driver.execute_script("""

Object.defineProperty(navigator, 'plugins', {

get: () => [1, 2, 3, 4, 5]

});

Object.defineProperty(navigator, 'mimeTypes', {

get: () => [1, 2, 3]

});

navigator.mediaDevices = {

enumerateDevices: () => Promise.resolve([

{ kind: 'audioinput', label: 'Built-in Microphone' },

{ kind: 'videoinput', label: 'Integrated Camera' }

])

};

Object.defineProperty(screen, 'colorDepth', {

get: () => 24

});

""")

# Proxy authentication via DevTools Protocol

driver.execute_cdp_cmd('Network.enable', {})

# Set timezone via DevTools

driver.execute_cdp_cmd("Emulation.setTimezoneOverride", {"timezoneId": identity["timezone"]})

# Inject WebGL vendor/renderer spoofing if neede

driver.execute_script("""

Object.defineProperty(navigator, 'platform', {get: () => '%s'});

Object.defineProperty(navigator, 'hardwareConcurrency', {get: () => %d});

Object.defineProperty(navigator, 'maxTouchPoints', {get: () => %d});

Object.defineProperty(navigator, 'languages', {get: () => ['en-US', 'en']});

Object.defineProperty(navigator, 'language', {get: () => 'en-US'});

Object.defineProperty(navigator, 'webdriver', {get: () => undefined});

""" % (

identity['platform'],

identity['hardware_concurrency'],

identity['max_touch_points']

))

behavior = assign_behavior_profile()

webgl_vendor = identity['webgl_vendor']

webgl_renderer = identity['webgl_renderer']

driver.execute_script(f'''

const getParameter = WebGLRenderingContext.prototype.getParameter;

WebGLRenderingContext.prototype.getParameter = function(parameter) {{

if (parameter === 37445) return "{webgl_vendor}";

if (parameter === 37446) return "{webgl_renderer}";

return getParameter(parameter);

}};

''')

logging.info(f" WebGL spoof applied: {webgl_vendor} / {webgl_renderer}")

# Canvas fingerprint spoofing and AudioBuffer

driver.execute_script('''

const origToDataURL = HTMLCanvasElement.prototype.toDataURL;

HTMLCanvasElement.prototype.toDataURL = function() {

return "data:image/png;base64,canvasfakestring==";

};

const origGetChannelData = AudioBuffer.prototype.getChannelData;

AudioBuffer.prototype.getChannelData = function() {

const results = origGetChannelData.apply(this, arguments);

for (let i = 0; i < results.length; i++) {

results[i] = results[i] + Math.random() * 0.0000001;

}

return results;

};

''')

logging.info("Stealth browser launched successfully.")

return driver, behavior

Let's break it down piece by piece:

Step 8: Verify your stealth setup is working

Instead of crossing your fingers and hoping Best Buy plays nice, do the smart thing. Run this quick check. It’ll show you whether your setup passes or fails, all before you ever hit the product page.

def verify_stealth_setup(driver, identity):

try:

# Go to a tool that reflects fingerprint info

driver.get("https://bot.sannysoft.com")

time.sleep(3)

# Step 1: Basic navigator checks using JS

navigator_checks = driver.execute_script("""

return {

webdriver: navigator.webdriver === undefined,

platform: navigator.platform,

hardwareConcurrency: navigator.hardwareConcurrency,

languages: navigator.languages,

userAgent: navigator.userAgent

};

""")

if not navigator_checks['webdriver']:

logging.warning("webdriver flag detected — browser likely flagged as bot")

return False

if navigator_checks['platform'] != identity['platform']:

logging.warning(f"Platform mismatch: expected {identity['platform']}, got {navigator_checks['platform']}")

return False

if navigator_checks['userAgent'] != identity['user_agent']:

logging.warning(f"User-Agent mismatch: expected {identity['user_agent']}, got {navigator_checks['userAgent']}")

return False

# Step 2: Timezone check via JS

browser_tz = driver.execute_script("return Intl.DateTimeFormat().resolvedOptions().timeZone")

if browser_tz != identity['timezone']:

logging.warning(f"Timezone mismatch: expected {identity['timezone']}, got {browser_tz}")

return False

# Passed all checks

logging.info("Stealth verification passed.")

return True

except Exception as e:

logging.error(f"Error during stealth verification: {e}")

return False

This part of the script is your tripwire. It loads Sannysoft in the background and compares your browser’s fingerprint against what a real user would show. You pass, you scrape. You fail, it tells you what broke.

It checks your webdriver status, platform, language stack, CPU core count, and user agent. Then it checks your timezone. All of it gets matched against the identity profile you assigned earlier. Any mismatch gets logged, and the session ends before you ever touch a product page.

Step 9: Define a function to perform a Google search

Once your identity passes the fingerprint check, it is safe to move forward. Most bots head straight to the product link. That’s fast, but not smart. When you start from Google like a real user would, you get cleaner sessions and fewer flags. It’s slower by seconds, but safer by miles. Drop this in after your stealth check:

def perform_google_search(driver, behavior, query="nintendo switch 2 best buy"):

try:

logging.info("Navigating to Google Search...")

driver.get("https://www.google.com")

# Wait for the search input to appear

search_box = WebDriverWait(driver, 10).until(

EC.presence_of_element_located((By.NAME, "q"))

)

# Simulate human typing with typos and backspaces

typed = ""

typo_chance = 0.15 if behavior["slow_typing"] else 0.05

base_delay = behavior["base_delay"]

for char in query:

if random.random() < typo_chance:

wrong_char = random.choice("abcdefghijklmnopqrstuvwxyz")

search_box.send_keys(wrong_char)

time.sleep(base_delay + random.uniform(0, 0.5))

search_box.send_keys(Keys.BACKSPACE)

time.sleep(base_delay + random.uniform(0, 0.5))

search_box.send_keys(char)

time.sleep(base_delay + random.uniform(0, 0.3))

search_box.send_keys(Keys.ENTER)

# Wait for search results to load

WebDriverWait(driver, 10).until(

EC.presence_of_element_located((By.ID, "search"))

)

time.sleep(base_delay + random.uniform(1.5, 2.5)) # Simulate scanning results

logging.info("Scanning for Best Buy link...")

links = driver.find_elements(By.CSS_SELECTOR, 'div#search a')

for link in links:

href = link.get_attribute("href")

if href and "bestbuy.com" in href:

if behavior["hover_before_click"]:

ActionChains(driver).move_to_element(link).perform()

time.sleep(base_delay + random.uniform(0.5, 1.0))

ActionChains(driver).move_to_element(link).pause(random.uniform(0.8, 2)).click().perform()

logging.info(f" Clicked Best Buy link: {href}")

# ADD REFRESH LOGIC HERE

time.sleep(random.uniform(6, 8)) # Let initial Best Buy page load

driver.refresh()

logging.info("Refreshed Best Buy page to trigger JS-based price injection")

return True

logging.warning(" No Best Buy link found in search results.")

return False

except Exception as e:

logging.error(f"Error during Google search: {e}")

return False

The function opens a clean Google homepage. It waits for the search bar, then starts typing like a human would, complete with random typos and backspaces. Every step adjusts based on the selected behavior profile.

Once the full query is entered, it presses Enter and scans the results for the first Best Buy link. When it finds one, it clicks through, waits for the content, then triggers a full-page refresh. That last move forces delayed elements, like prices tucked behind JavaScript, to load properly.

Step 10: Define a function to extract prices from Best Buy

This is where it comes together. This function reaches into the page and pulls the price. If it fails, none of it matters. So let’s write the function that finally makes it real:

def extract_price_from_bestbuy(driver, behavior):

try:

logging.info("Waiting for Best Buy product page to load key elements...")

# Wait for common layout elements that appear first

WebDriverWait(driver, 60).until(

EC.presence_of_element_located((By.CSS_SELECTOR, "header, footer, div.shop-header"))

)

time.sleep(random.uniform(5.2, 10.2))

# Simulate realistic mouse-driven scroll

for _ in range(5):

offset = random.randint(200, 600)

driver.execute_script(f"window.scrollBy(0, {offset});")

time.sleep(random.uniform(5.2, 10.2))

# Simulate random user interactions: hover & click on safe visible items

potential_clicks = driver.find_elements(By.CSS_SELECTOR, "a, button")

interactive_targets = random.sample(potential_clicks, min(3, len(potential_clicks)))

# Safe hover only — no clicking

for el in interactive_targets:

try:

if el.is_displayed() and el.is_enabled():

ActionChains(driver).move_to_element(el).pause(random.uniform(0.6, 1.4)).perform()

logging.info(f" Hovered on: {el.tag_name}")

time.sleep(random.uniform(1.0, 2.0))

except Exception:

continue

# Wait for price container

try:

WebDriverWait(driver, 60).until(

EC.visibility_of_element_located((By.CSS_SELECTOR, "div[data-testid='large-customer-price']"))

)

logging.info("Price element is now visible.")

except Exception as e:

logging.warning(f"Timed out waiting for price to become visible: {e}")

return None

# Simulate reading time before extraction

time.sleep(random.uniform(5.2, 10.2))

# Smooth hover on the price zone (simulated)

try:

price_zone = driver.find_element(By.CSS_SELECTOR, "div[data-testid='large-customer-price']")

ActionChains(driver).move_to_element(price_zone).pause(random.uniform(0.8, 1.6)).perform()

except:

pass # Continue even if no specific price box

# Extract price from all possible span elements

price_elements = driver.find_elements(By.CSS_SELECTOR, "div[data-testid='large-customer-price']")

for el in price_elements:

text = el.text.strip()

if "$" in text and re.search(r"\$\d{2,4}(?:\.\d{2})?", text):

logging.info(f"Price found: {text}")

return text

logging.warning("No matching price span found.")

return None

except Exception as e:

import traceback

logging.error(f"Price extraction failed: {e}")

traceback.print_exc()

# Bonus: capture HTML and screenshot for debug

timestamp = datetime.now().strftime("%Y%m%d_%H%M%S")

driver.save_screenshot(f"debug_price_fail_{timestamp}.png")

with open(f"debug_price_fail_{timestamp}.html", "w", encoding="utf-8") as f:

f.write(driver.page_source)

return None

This function doesn’t assume anything. It waits for visible proof that the page has begun to load by checking for headers, footers, and key containers. Then it slows down. It scrolls just enough to wake up lazy-loaded content and simulates the kind of scattered movement a real user might create without thinking.

Once that’s done, it finds interactive elements and hovers over them. These actions create visible, traceable mouse behavior. That motion leaves a trail Best Buy can see. Then it locks on the price box, pauses like it’s reading, and only then does it extract. If anything breaks, it pauses, logs the error, and starts again.

Step 11: Add a function to detect Best Buy soft blocks

Even with stealth, timing, and clean headers, Best Buy sometimes slips in a fake load. Looks like a page, acts like a page, but gives you nothing useful. That’s why this function exists. It spots those soft blocks early so your tracker knows when not to trust the content.

def detect_block(driver):

try:

html = driver.page_source.lower()

if "unusual traffic" in html or "detected unusual traffic" in html:

logging.warning("Block detected: Google CAPTCHA page.")

return "captcha"

if "are you a human" in html or "please verify you are a human" in html:

logging.warning("Block detected: Generic bot check.")

return "bot_check"

if "automated queries" in html or "too many requests" in html:

logging.warning("Block detected: Rate limit or abuse prevention.")

return "rate_limit"

if "access denied" in html or "403 forbidden" in html:

logging.warning("Block detected: Forbidden page.")

return "forbidden"

if len(html.strip()) < 1000:

logging.warning("Block suspected: Empty or tiny page.")

return "empty"

return None

This function looks for signs that the page loaded isn't the real thing. It checks for CAPTCHAs in the DOM, scans for generic error messages like “access denied” or “please wait,” and detects when the main price container is gone. These are the tells. They show up when the site suspects you’re a bot but doesn’t want to say it out loud.

If this function finds any of those flags, it knows the data’s tainted, and it kills the session early. That saves you from logging garbage, wasting proxy rotation, or alerting on phantom price drops.

Step 12: Add functions to extract the price, send an email alert, and log to csv

We’re adding one function to grab the price, another to send an alert straight to your inbox, and a third to log the data to CSV for tracking over time.

def clean_price_string(price_str):

match = re.search(r"\$(\d{2,5}(?:\.\d{2})?)", price_str)

return float(match.group(1)) if match else None

def send_price_alert(product_name, price, threshold):

msg = MIMEText(f" Price Drop Alert \n\n{product_name} has dropped to ${price:.2f} — below your threshold of ${threshold:.2f}.\nTime to buy!")

msg["Subject"] = f"Time to Buy: {product_name} is now ${price:.2f}"

msg["From"] = "youremailaddress"

msg["To"] = "youremailaddress"

with smtplib.SMTP("smtp.gmail.com", 587) as server:

server.starttls()

server.login("oyouremailadress", "yourpassword")

server.send_message(msg)

def log_price_to_csv(product_name, price, threshold):

with open("price_alerts.csv", mode="a", newline="", encoding="utf-8") as f:

writer = csv.writer(f)

writer.writerow([datetime.now().isoformat(), product_name, price, threshold])

Step 13: Write your main function

Every tool is in place. Now we write the part that runs it all. The main function launches everything: browser, behavior, search, extraction, and alerts. Here’s how it works:

for attempt in range(MAX_RETRIES):

try:

query = "nintendo switch 2 best buy"

proxy, identity = assign_proxy_and_identity()

driver, behavior = launch_stealth_browser(proxy, identity)

logging.info(f"Behavior profile: {behavior['name']}")

if not verify_stealth_setup(driver, identity):

raise Exception("Stealth verification failed.")

for google_attempt in range(1, GOOGLE_RETRY_LIMIT + 1):

logging.info(f"Google search attempt {google_attempt}")

success = perform_google_search(driver, behavior, query=query)

if detect_block(driver):

raise Exception("Blocked during Google search.")

if success:

logging.info("Google search succeeded.")

price = extract_price_from_bestbuy(driver, behavior)

if detect_block(driver):

raise Exception("Blocked during Best Buy visit.")

cleaned_price = clean_price_string(price)

if cleaned_price:

logging.info(f" Parsed price: {cleaned_price:.2f}")

for product in PRODUCTS:

if product["name"].lower() in query.lower(): # crude match

if cleaned_price <= product["threshold"]:

logging.info(f" {product['name']} is below threshold of ${product['threshold']:.2f}")

send_price_alert(product["name"], cleaned_price, product["threshold"])

log_price_to_csv(product["name"], cleaned_price, product["threshold"])

driver.quit() # Clean exit

sys.exit(0) # Hard exit after success

else:

logging.info(f" Price is above threshold — no alert needed.")

else:

logging.warning("Failed to parse price as float.")

break

else:

logging.warning("Google search failed. Retrying...")

time.sleep(GOOGLE_BACKOFF_BASE * google_attempt)

else:

raise Exception("All Google search retries failed.")

driver.quit()

break # All good

except Exception as e:

logging.error(f"Attempt failed due to: {e}")

backoff = BLOCK_BACKOFF_STEPS[min(attempt, len(BLOCK_BACKOFF_STEPS) - 1)]

logging.info(f"Waiting {backoff}s before trying new proxy + identity.")

try:

driver.quit()

except:

pass

time.sleep(backoff)

else:

logging.critical("All attempts failed. Exiting.")

sys.exit(1)

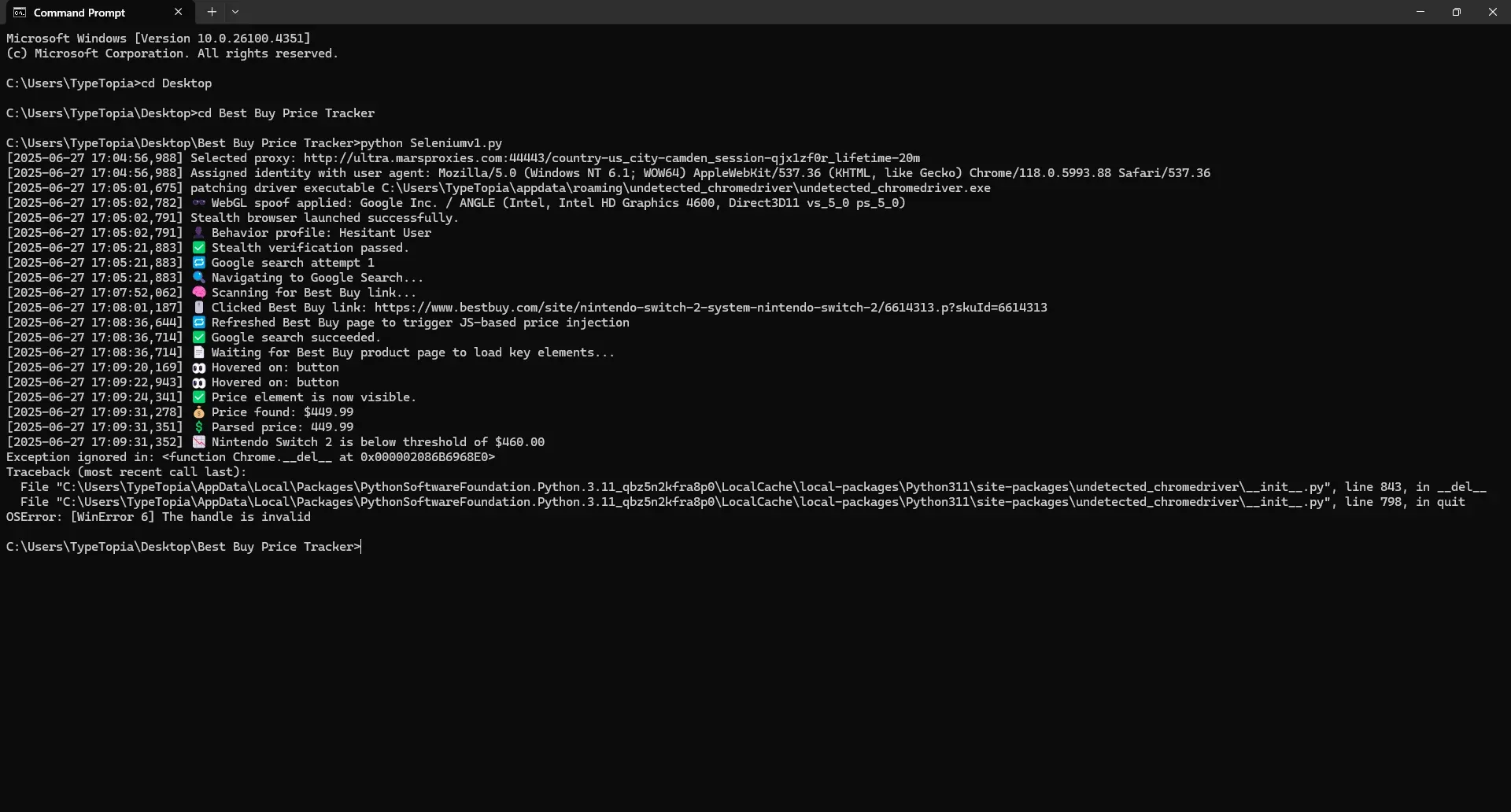

This is the main loop. It runs the entire tracking attempt from start to finish: proxy rotation, identity spoofing, fingerprint checks, page scraping, alerting, and logging.

It begins by defining the product search query. Then it pulls a fresh proxy and matching identity using assign_proxy_and_identity(), and launches a stealth browser with launch_stealth_browser(). Both of these were defined earlier to make sure each session looks like a real user from the same time zone as the proxy.

Once the browser is up, the script calls verify_stealth_setup() to make sure the session passes all fingerprint checks. If that looks good, it searches on Google using perform_google_search(), then checks for soft blocks with detect_block().

When it lands on Best Buy, it runs extract_price_from_bestbuy() to grab the current price, runs that through clean_price_string(), and compares it to the threshold set in the product list.

If the price is low enough, it fires off send_price_alert() and writes a record using log_price_to_csv(). If anything breaks along the way, the session is cleaned up, a new setup is pulled, and the process tries again.

Step 14: Run your tracker

Here is your fully working price tracking script for Best Buy:

# Your Import statements

import re

import base64

import pandas as pd

from selenium.webdriver.common.by import By

from selenium.webdriver.common.keys import Keys

from selenium.webdriver.common.action_chains import ActionChains

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.support import expected_conditions as EC

from selenium.webdriver.chrome.options import Options

from selenium.webdriver.common.desired_capabilities import DesiredCapabilities

import undetected_chromedriver as uc

import smtplib

from email.message import EmailMessage

from email.mime.text import MIMEText

import time

import random

import os

import sys

import csv

import json

from datetime import datetime

import itertools

import uuid

import logging

# Configure logging

logging.basicConfig(level=logging.INFO, format='[%(asctime)s] %(message)s')

# Global Settings

HEADLESS = False # Set to True later if you spoof it correctly

MAX_RETRIES = 5

WAIT_MIN = 1.5 # base human wait

WAIT_JITTER = 2.0 # added jitter to simulate inconsistency

GOOGLE_RETRY_LIMIT = 3

GOOGLE_BACKOFF_BASE = 3 # seconds (waits 3s, 6s, 9s...)

BLOCK_BACKOFF_STEPS = [5, 10, 20, 30] # seconds

# Example: list of products to search

PRODUCTS = [

{"name": "Nintendo Switch 2", "threshold": 460.00}

]

# CSV Output setup

CSV_FILE = "price_log.csv"

#Proxy pool

PROXIES = [

"http://ultra.marsproxies.com:44443/country-us_city-acworth_session-5o10can2_lifetime-20m",

"http://ultra.marsproxies.com:44443/country-us_state-alabama_session-hk50878d_lifetime-20m",

"http://ultra.marsproxies.com:44443/country-us_state-arizona_session-0raeiekm_lifetime-20m",

"http://ultra.marsproxies.com:44443/country-us_city-berea_session-z74rc852_lifetime-20m",

"http://ultra.marsproxies.com:44443/country-us_city-camden_session-qjx1zf0r_lifetime-20m",

"http://ultra.marsproxies.com:44443/country-us_city-columbia_session-n81nr351_lifetime-20m",

"http://ultra.marsproxies.com:44443/country-us_city-daytonabeach_session-iffawas4_lifetime-20m",

"http://ultra.marsproxies.com:44443/country-us_state-delaware_session-jgvgyrmv_lifetime-20m",

"http://ultra.marsproxies.com:44443/country-us_city-denver_session-k038l41j_lifetime-20m",

"http://ultra.marsproxies.com:44443/country-us_city-fairborn_session-edkpbnq6_lifetime-20m"

]

# Minimal city/state to timezone map (expand as needed)

TIMEZONE_MAP = {

"city-acworth": "America/New_York",

"city-berea": "America/New_York",

"city-camden": "America/New_York",

"city-columbia": "America/New_York",

"city-daytonabeach": "America/New_York",

"city-denver": "America/Denver",

"city-fairborn": "America/New_York",

"state-alabama": "America/Chicago",

"state-arizona": "America/Phoenix",

"state-delaware": "America/New_York"

# Add more if needed

}

def extract_timezone(proxy_string):

match = re.search(r"(city|state)-([a-z]+)", proxy_string)

if not match:

raise ValueError(f"Could not extract location from: {proxy_string}")

loc_key = f"{match.group(1)}-{match.group(2)}"

timezone = TIMEZONE_MAP.get(loc_key)

if not timezone:

raise ValueError(f"No timezone mapped for: {loc_key}")

return timezone

# Identites to use

IDENTITY_POOL = [

{

"device_group": "desktop-windows",

"timezone": "America/New_York",

"user_agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/121.0.0.0 Safari/537.36",

"viewport": (1366, 768),

"platform": "Win32",

"hardware_concurrency": 8,

"max_touch_points": 0,

"webgl_vendor": "Intel Inc.",

"webgl_renderer": "Intel Iris Xe Graphics"

},

{

"device_group": "desktop-windows",

"timezone": "America/Chicago",

"user_agent": "Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/117.0.5938.92 Safari/537.36",

"viewport": (1600, 900),

"platform": "Win32",

"hardware_concurrency": 4,

"max_touch_points": 0,

"webgl_vendor": "NVIDIA Corporation",

"webgl_renderer": "NVIDIA GeForce GTX 1050/PCIe/SSE2"

},

{

"device_group": "desktop-mac",

"timezone": "America/Phoenix",

"user_agent": "Mozilla/5.0 (Macintosh; Intel Mac OS X 13_1) AppleWebKit/605.1.15 (KHTML, like Gecko) Version/16.1 Safari/605.1.15",

"viewport": (1440, 900),

"platform": "MacIntel",

"hardware_concurrency": 8,

"max_touch_points": 0,

"webgl_vendor": "Apple Inc.",

"webgl_renderer": "Apple GPU"

},

{

"device_group": "desktop-linux",

"timezone": "America/Denver",

"user_agent": "Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/119.0.0.0 Safari/537.36",

"viewport": (1280, 720),

"platform": "Linux x86_64",

"hardware_concurrency": 4,

"max_touch_points": 0,

"webgl_vendor": "X.Org",

"webgl_renderer": "AMD Radeon RX 560 Series (POLARIS11, DRM 3.35.0, 5.4.0-73-generic)"

},

{

"device_group": "desktop-windows",

"timezone": "America/New_York",

"user_agent": "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/118.0.5993.88 Safari/537.36",

"viewport": (1360, 768),

"platform": "Win32",

"hardware_concurrency": 2,

"max_touch_points": 0,

"webgl_vendor": "Google Inc.",

"webgl_renderer": "ANGLE (Intel, Intel HD Graphics 4600, Direct3D11 vs_5_0 ps_5_0)"

},

{

"device_group": "desktop-linux",

"timezone": "America/Chicago",

"user_agent": "Mozilla/5.0 (X11; Ubuntu; Linux x86_64; rv:109.0) Gecko/20100101 Firefox/119.0",

"viewport": (1920, 1080),

"platform": "Linux x86_64",

"hardware_concurrency": 4,

"max_touch_points": 0,

"webgl_vendor": "Mesa/X.org",

"webgl_renderer": "Mesa DRI Intel(R) HD Graphics 620 (KBL GT2)"

},

{

"device_group": "desktop-mac",

"timezone": "America/New_York",

"user_agent": "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/605.1.15 (KHTML, like Gecko) Version/15.1 Safari/605.1.15",

"viewport": (1280, 800),

"platform": "MacIntel",

"hardware_concurrency": 4,

"max_touch_points": 0,

"webgl_vendor": "Apple Inc.",

"webgl_renderer": "AMD Radeon Pro 560X OpenGL Engine"

},

{

"device_group": "desktop-windows",

"timezone": "America/Phoenix",

"user_agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:118.0) Gecko/20100101 Firefox/118.0",

"viewport": (1536, 864),

"platform": "Win32",

"hardware_concurrency": 8,

"max_touch_points": 0,

"webgl_vendor": "Microsoft",

"webgl_renderer": "Microsoft Basic Render Driver"

},

{

"device_group": "desktop-linux",

"timezone": "America/Denver",

"user_agent": "Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/115.0.0.0 Safari/537.36",

"viewport": (1366, 768),

"platform": "Linux x86_64",

"hardware_concurrency": 6,

"max_touch_points": 0,

"webgl_vendor": "Google Inc.",

"webgl_renderer": "ANGLE (AMD, Radeon RX 580 Series Direct3D11 vs_5_0 ps_5_0)"

},

{

"device_group": "desktop-windows",

"timezone": "America/New_York",

"user_agent": "Mozilla/5.0 (Windows NT 6.3; Trident/7.0; rv:11.0) like Gecko",

"viewport": (1024, 768),

"platform": "Win32",

"hardware_concurrency": 2,

"max_touch_points": 0,

"webgl_vendor": "Microsoft",

"webgl_renderer": "Direct3D11"

}

]

# Proxy assignment and identity logic

def assign_proxy_and_identity():

proxy = random.choice(PROXIES)

logging.info(f"Selected proxy: {proxy}")

try:

timezone = extract_timezone(proxy)

except ValueError as e:

logging.error(f"Failed to extract timezone: {e}")

raise

# Filter identities by matching timezone

matching_identities = [idn for idn in IDENTITY_POOL if idn["timezone"] == timezone]

if not matching_identities:

logging.error(f"No identities found for timezone: {timezone}")

raise Exception(f"No identities match timezone: {timezone}")

identity = random.choice(matching_identities)

logging.info(f"Assigned identity with user agent: {identity['user_agent']}")

return proxy, identity

# Each profile defines timing, interaction, and navigation tendencies

BEHAVIOR_PROFILES = [

{

"name": "Fast Clicker",

"base_delay": 0.5,

"scroll_pattern": "none",

"hover_before_click": False,

"re_click_probability": 0.05,

"slow_typing": False,

"move_mouse_between_actions": True,

},

{

"name": "Deliberate Reader",

"base_delay": 2.5,

"scroll_pattern": "linear",

"hover_before_click": True,

"re_click_probability": 0.02,

"slow_typing": True,

"move_mouse_between_actions": True,

},

{

"name": "Back-and-Forth Browser",

"base_delay": 1.2,

"scroll_pattern": "jittery",

"hover_before_click": True,

"re_click_probability": 0.1,

"slow_typing": True,

"move_mouse_between_actions": True,

},

{

"name": "Precise Shopper",

"base_delay": 1.0,

"scroll_pattern": "none",

"hover_before_click": False,

"re_click_probability": 0.01,

"slow_typing": True,

"move_mouse_between_actions": True,

},

{

"name": "Researcher",

"base_delay": 2.0,

"scroll_pattern": "linear",

"hover_before_click": True,

"re_click_probability": 0.03,

"slow_typing": True,

"move_mouse_between_actions": True,

},

{

"name": "Curious Clicker",

"base_delay": 1.7,

"scroll_pattern": "jittery",

"hover_before_click": True,

"re_click_probability": 0.2,

"slow_typing": False,

"move_mouse_between_actions": True,

},

{

"name": "Hesitant User",

"base_delay": 3.0,

"scroll_pattern": "none",

"hover_before_click": True,

"re_click_probability": 0.0,

"slow_typing": True,

"move_mouse_between_actions": True,

},

]

# Function to randomly select a behavior profile for a session

def assign_behavior_profile():

return random.choice(BEHAVIOR_PROFILES)

# Here's the complete stealth launch function based on the provided context.

def launch_stealth_browser(proxy: str, identity: dict):

"""

Launch a stealthy undetected Chrome browser instance with proxy and identity settings applied.

"""

# Extract proxy credentials and IP

proxy_address = proxy.split("/")[2] # Extracts 'ultra.marsproxies.com:44443'

proxy_address = proxy.split("/")[2] # Gets host:port from your whitelisted proxy string

# Stealthy browser options

options = uc.ChromeOptions()

options.add_argument(f'--proxy-server=http://{proxy_address}')

options.add_argument(f"--window-size={identity['viewport'][0]},{identity['viewport'][1]}")

options.add_argument(f"--lang=en-US,en;q=0.9")

options.add_argument("--disable-blink-features=AutomationControlled")

options.add_experimental_option("prefs", {

"credentials_enable_service": False,

"profile.password_manager_enabled": False

})

if HEADLESS:

options.add_argument("--headless=new")

# Start undetected Chrome driver

driver = uc.Chrome(options=options)

driver.execute_script("window.localStorage.setItem('bb_test_key', 'value');")

driver.execute_script("window.sessionStorage.setItem('bb_test_key', 'value');")

driver.execute_script("document.cookie = 'bb_user_sim=1234567; path=/';")

driver.execute_cdp_cmd("Page.addScriptToEvaluateOnNewDocument", {

"source": f"""

Object.defineProperty(navigator, 'webdriver', {{get: () => undefined}});

Object.defineProperty(navigator, 'userAgent', {{get: () => "{identity['user_agent']}" }});

Object.defineProperty(navigator, 'platform', {{get: () => "{identity['platform']}" }});

Object.defineProperty(navigator, 'hardwareConcurrency', {{get: () => {identity['hardware_concurrency']} }});

Object.defineProperty(navigator, 'maxTouchPoints', {{get: () => {identity['max_touch_points']} }});

Object.defineProperty(navigator, 'languages', {{get: () => ['en-US', 'en'] }});

Object.defineProperty(navigator, 'language', {{get: () => 'en-US' }});

"""

})

# Enable DevTools protocol

driver.execute_cdp_cmd("Page.enable", {})

# Add fake chrome.runtime support

driver.execute_cdp_cmd("Runtime.evaluate", {

"expression": "Object.defineProperty(navigator, 'chrome', { get: () => ({ runtime: {} }) });"

})

driver.execute_script("""

Object.defineProperty(navigator, 'plugins', {

get: () => [1, 2, 3, 4, 5]

});

Object.defineProperty(navigator, 'mimeTypes', {

get: () => [1, 2, 3]

});

navigator.mediaDevices = {

enumerateDevices: () => Promise.resolve([

{ kind: 'audioinput', label: 'Built-in Microphone' },

{ kind: 'videoinput', label: 'Integrated Camera' }

])

};

Object.defineProperty(screen, 'colorDepth', {

get: () => 24

});

""")

# Proxy authentication via DevTools Protocol

driver.execute_cdp_cmd('Network.enable', {})

# Set timezone via DevTools

driver.execute_cdp_cmd("Emulation.setTimezoneOverride", {"timezoneId": identity["timezone"]})

# Inject WebGL vendor/renderer spoofing if neede

driver.execute_script("""

Object.defineProperty(navigator, 'platform', {get: () => '%s'});

Object.defineProperty(navigator, 'hardwareConcurrency', {get: () => %d});

Object.defineProperty(navigator, 'maxTouchPoints', {get: () => %d});

Object.defineProperty(navigator, 'languages', {get: () => ['en-US', 'en']});

Object.defineProperty(navigator, 'language', {get: () => 'en-US'});

Object.defineProperty(navigator, 'webdriver', {get: () => undefined});

""" % (

identity['platform'],

identity['hardware_concurrency'],

identity['max_touch_points']

))

behavior = assign_behavior_profile()

webgl_vendor = identity['webgl_vendor']

webgl_renderer = identity['webgl_renderer']

driver.execute_script(f'''

const getParameter = WebGLRenderingContext.prototype.getParameter;

WebGLRenderingContext.prototype.getParameter = function(parameter) {{

if (parameter === 37445) return "{webgl_vendor}";

if (parameter === 37446) return "{webgl_renderer}";

return getParameter(parameter);

}};

''')

logging.info(f" WebGL spoof applied: {webgl_vendor} / {webgl_renderer}")

# Canvas fingerprint spoofing and AudioBuffer

driver.execute_script('''

const origToDataURL = HTMLCanvasElement.prototype.toDataURL;

HTMLCanvasElement.prototype.toDataURL = function() {

return "data:image/png;base64,canvasfakestring==";

};

const origGetChannelData = AudioBuffer.prototype.getChannelData;

AudioBuffer.prototype.getChannelData = function() {

const results = origGetChannelData.apply(this, arguments);

for (let i = 0; i < results.length; i++) {

results[i] = results[i] + Math.random() * 0.0000001;

}

return results;

};

''')

logging.info("Stealth browser launched successfully.")

return driver, behavior

def verify_stealth_setup(driver, identity):

try:

# Go to a tool that reflects fingerprint info

driver.get("https://bot.sannysoft.com")

time.sleep(3)

# Step 1: Basic navigator checks using JS

navigator_checks = driver.execute_script("""

return {

webdriver: navigator.webdriver === undefined,

platform: navigator.platform,

hardwareConcurrency: navigator.hardwareConcurrency,

languages: navigator.languages,

userAgent: navigator.userAgent

};

""")

if not navigator_checks['webdriver']:

logging.warning("webdriver flag detected — browser likely flagged as bot")

return False

if navigator_checks['platform'] != identity['platform']:

logging.warning(f"Platform mismatch: expected {identity['platform']}, got {navigator_checks['platform']}")

return False

if navigator_checks['userAgent'] != identity['user_agent']:

logging.warning(f"User-Agent mismatch: expected {identity['user_agent']}, got {navigator_checks['userAgent']}")

return False

# Step 2: Timezone check via JS

browser_tz = driver.execute_script("return Intl.DateTimeFormat().resolvedOptions().timeZone")

if browser_tz != identity['timezone']:

logging.warning(f"Timezone mismatch: expected {identity['timezone']}, got {browser_tz}")

return False

# Passed all checks

logging.info(" Stealth verification passed.")

return True

except Exception as e:

logging.error(f"Error during stealth verification: {e}")

return False

def perform_google_search(driver, behavior, query="nintendo switch 2 best buy"):

try:

logging.info(" Navigating to Google Search...")

driver.get("https://www.google.com")

# Wait for the search input to appear

search_box = WebDriverWait(driver, 10).until(

EC.presence_of_element_located((By.NAME, "q"))

)

# Simulate human typing with typos and backspaces

typed = ""