What Is a Headless Browser? Benefits, Use Cases & Tools

You may have used a tool that runs on a headless browser: maybe Lighthouse, Screaming Frog, or some internal crawler someone else wrote.

But what actually makes these tools tick? What does it mean to run a browser “headless”? And why is this setup so popular with devs who care about speed, stealth, or scaling?

This guide is here to answer those questions. We’ll walk you through what a headless web browser is, why it matters for everything from automated tests to web scraping, and how it compares across tools like Selenium, headless Chrome, and more. We’ll also cover the benefits, smart proxy usage, and how to get everything running without drowning in config options.

If you’ve got five minutes, we’ll make it worth your time.

What is a headless browser?

When most people hear the word “browser,” they picture Chrome, Firefox, Safari, maybe even Brave or Opera. You click a tab, type in a URL, and something appears on screen. That’s the everyday experience.

But it’s not the only way to browse.

There’s another kind of browsing: it's quiet, controlled, and designed for people who want more from the web. You might be scraping product listings, testing how a site renders for users across different regions, or running UI checks in a CI/CD pipeline automatically after every deployment.

For tasks like those, there’s something called a headless browser.

How a headless browser works

It’s still a full browser under the hood. Same engine, same execution, same ability to request pages and interact with them. But it runs without a graphical user interface. No tabs. No windows. Nothing you see on screen.

Instead, a headless browser works entirely through code. You control it from your terminal or script, often using tools like Puppeteer, Playwright, or Selenium. It opens pages, clicks buttons, runs JavaScript, and you never see it happen.

That’s why headless browsers are so powerful for browser automation. No visual overhead. No extra memory eaten up by rendering tabs - just speed and control.

And despite being “invisible,” a headless browser still does everything a regular browser does. It downloads assets, parses HTML and CSS, runs AJAX requests, and even builds a complete DOM.

The difference? A headless browser doesn’t bother to render anything visually, unless you ask it to, like when you want to capture a screenshot or generate a PDF.

It also makes automation safer and faster. No GUI means fewer system dependencies, making headless browsers perfect for Docker containers, remote environments, or large-scale bot deployments.

Why use headless browsers?

Why someone turns to a headless browser depends on what they're trying to get done. An automation engineer might be chasing speed. A QA tester? Repeatability. An SEO? Insights Googlebot sees but humans don’t.

But no matter who you are, the reason you’re here is simple: you want something that regular browsers just can’t give you. So let’s unpack what that something is.

Faster execution

When you're browsing, you want the full visual experience. But with headless browsers, that’s the first thing to toss. No visual animations or screen rendering. Just code doing its job, silently.

In headless mode, the browser skips the fluff and gets straight to business. Whether you're running Selenium or Puppeteer, your scripts perform faster depending on the page and its level of load.

You can queue up headless browser testing across thousands of URLs or deploy browser automation in the cloud without the GPU and memory demands of a full graphical desktop environment, though resource use still scales with workload and concurrency.

Automation

In headless browser mode, scripts load pages, fill forms, click buttons, and run JavaScript without wasting resources drawing graphics.

This leaner approach makes browser automation faster and also cleaner. Fewer dependencies mean fewer chances for graphical bugs or timing issues.

That’s why automation engineers, cybersecurity specialists, and DevOps teams prefer using a headless browser library when setting up automated tests or deploying bots inside CI/CD pipelines.

Lower resource usage

Ever wondered why browsers feel heavy when you open dozens of tabs? It’s because every page isn’t just sitting there. It’s actively rendering visuals, eating RAM, and chewing up CPU time.

A headless browser skips that overhead.

With headless browsers, scripts load and interact with pages just like a normal browser would, but without the cost of visual rendering. That means less memory consumed, less CPU stress, and fewer GPU cycles wasted.

For web scraping, browser automation, or headless testing, this efficiency is gold. Headless browser tools make it possible to run hundreds of automated tests at once without needing a beefy machine.

Common headless browser use cases

When used in the right context, a headless browser is a far more efficient tool than a regular one. No wasted rendering, no GUI slowing things down. But what does that actually look like in practice? How does that efficiency translate into real-world results?

Automated headless testing

Every time you click a button on a website, there’s a lot happening behind the scenes: APIs firing, JavaScript running, and DOM elements reacting. For developers, it’s not enough to build these functions, they need to test them. And when you’re on a tight release cycle, hiring people to manually test every interaction isn’t scalable.

That’s where automated headless testing comes in. Using headless browser tools like Selenium, Playwright, or Puppeteer, you can script test flows that simulate real user behavior like logging in, submitting forms, clicking nav items, and verifying that everything works as intended.

Since headless browsers don’t need to “see” the interface, they run in headless mode, skipping the rendering and focusing purely on logic. This speeds up automated tests, reduces memory use, and avoids flaky results caused by GUI-related hiccups.

Headless browser testing has become a core part of modern CI/CD pipelines, especially in agile teams pushing code weekly or even daily. This setup gives you a fast, lightweight testing layer that just works.

Web scraping

Most businesses today know the data they need lives online. But getting to it is the hard part.

You might be staring at the perfect product listing, pricing grid, or legal filing, only to realize there’s no API, no export button, and no structured data. That’s where web scraping enters the picture.

And to do it right, you often need a headless browser.

Why? Because modern sites use JavaScript-heavy frameworks. That data you want probably doesn’t exist in the raw HTML. It shows up only after the page fully renders. And traditional scraping tools can’t see it.

But headless browser testing tools like Puppeteer can. In headless mode, these tools load the page like a user would, wait for the data to appear, and then extract it. This makes it possible to:

- Train AI models on real, current data from public sources

- Run competitor monitoring campaigns without raising flags

- Collect market intelligence that’s fresh, relevant, and custom-tailored

Whether you’re working with structured listings or scraping dynamic content behind login walls, a headless web browser gives you the realism and control you need without any of the visual overhead.

Performance monitoring

If you're a technical SEO specialist or just someone who wants to truly understand your site’s performance, one of the most effective ways to do it is by running your own tests. Not just looking at frontend load times, but digging into real performance metrics using headless tools like Puppeteer or Playwright.

Say you want to test things like:

- Time to first byte (server speed)

- First contentful paint (when something actually shows up)

- Time to interactive (when the page can respond to clicks)

- Largest contentful paint (how long your biggest image takes)

- Third-party script impact (ads, analytics, chat widgets)

- Core Web Vitals overall

You don’t need a GUI to test these. You can spin up headless Chrome with tools like Puppeteer.

Headless browsers allow you to load the full page in headless mode, including all the JavaScript, third-party assets, and dynamic content, just as a real user would experience it, without ever launching a visible browser window. That means you can benchmark your site’s performance with precision while keeping the process lightweight and fully automated.

Screenshot generation

Need to take a screenshot? If it’s a one-off, manual tools work fine. But when you need to take hundreds across multiple devices or regions and do it automatically, there’s no better solution than a headless browser.

Headless browser tools like Puppeteer and Playwright let you script everything: when to open a page, what to wait for, what part to capture, and where to save the image - all in headless mode, without ever launching a visual browser.

That’s why dev teams use headless browser setups to:

- Capture full-page screenshots across mobile, tablet, and desktop viewports

- Monitor how their landing pages render around the world using proxies

- Archive product pages as part of compliance, audits, or content verification

- Auto-generate screenshots after every code push

Unlike GUI tools, headless browsers give you pixel-perfect snapshots of what a real user would see, even if the page is JavaScript-heavy or slow to load. You can wait for specific DOM elements to appear, strip out banners or modals, or scroll down before capturing.

One thing all these headless browser use cases have in common is that they involve sending lots of automated requests. And if you send too many requests from the same IP, websites notice. You’ll start getting CAPTCHAs, blocks, or worse, bans.

That’s why a rotating residential proxy pool matters. It lets you assign a different IP address to each batch of requests, making your headless browser traffic look more human and far less predictable.

This is essential if you’re running headless browser jobs that hit hundreds or thousands of pages in a short window. A smart proxy setup keeps things smooth, fast, and under the radar.

Best headless browser tools

Let’s look at the most reliable headless browser tools used by developers, QA testers, and automation engineers.

Puppeteer

Puppeteer is a headless browser library for Node.js that gives you full control over Chrome or Chromium. You don’t have to launch a visible window or manually click anything. Instead, you write JavaScript or TypeScript scripts, and Puppeteer runs the show behind the scenes.

This headless browser connects to the browser using the Chrome DevTools Protocol, meaning you can automate almost anything: clicking links, capturing screenshots, scraping data, generating PDFs, or running automated scripts.

Because it operates at such a deep level, Puppeteer is incredibly reliable for headless browser operations. You can run web scraping jobs, automate browsers, and push clean builds without missing a beat.

It’s open-source, actively maintained, and one of the best headless browser tools if you want real precision without unnecessary complexity. Here are the key features of Puppeteer:

- Full control over Chrome or Chromium

Puppeteer taps directly into Chrome’s engine by using the Chrome DevTools Protocol, the same API Chrome’s own Inspect tools use. That gives you real, granular control: you can capture console logs, simulate slow connections, throttle CPU performance, tweak network conditions, or dive deep into page structure.

- Automatic headless mode (with a toggle)

By default, Puppeteer launches Chrome in headless mode to maximize speed and minimize resource consumption. But if you need to debug your automation, flipping a simple switch lets you revert to a visible browser window. This gives you the flexibility to troubleshoot and visualize your script in real time, all without reworking your code.

- Advanced page interactions

Even though Puppeteer runs in headless mode, its interactions feel remarkably human. It smoothly handles form inputs, button clicks, hover events, and even intricate dropdown selections. Suppose you're automating web scraping tasks or running headless browser testing. Puppeteer allows you to automate interactions that closely resemble user actions, configurable to match real-world patterns. It scrolls dynamically loaded pages just as a human user would, helping you capture all necessary data, every time.

- Network control (request/response interception)

Network interception is a powerful Puppeteer headless browser feature that lets you take total control of your network activity. Instead of blindly loading everything, you can filter out unnecessary resources like images or tracking scripts during browser automation or web scraping. This trims bandwidth usage and boosts speed, making your automated scripts lighter and quicker.

- Built-in screenshot and PDF generation

When running headless browser testing, manually verifying layouts can drain your team's time. Puppeteer’s built-in screenshot feature solves this effortlessly by automatically capturing visual snapshots after each test. Additionally, its PDF generation capability lets you quickly archive visual evidence for compliance or audits.

Playwright

Playwright is an open-source headless browser library created by Microsoft, designed specifically for developers who need streamlined browser automation. Unlike traditional headless browser solutions, Playwright supports multiple browser engines right out of the box: Chromium (powering Chrome and Edge), Firefox, and WebKit (the engine behind Safari), making it one of the best headless browsers out there.

Here’s why that matters in your day-to-day workflow: Imagine you're responsible for running automated headless browser scripts, ensuring your website renders perfectly everywhere. Playwright eliminates the headache of juggling multiple scripts or handling browser inconsistencies manually. Instead, you can set up automated scripts once and reliably execute them across every major browser.

This cross-browser flexibility enhances the accuracy of your headless testing, reduces the complexity of maintaining multiple scripts, and significantly streamlines your headless browser automation workflows.

Here are some of the key features of Playwright:

- Multi-browser support

One of the smartest things about Playwright is how it handles cross-browser automation in headless browsers. You don’t have to duplicate your scripts for Chrome, Firefox, and Safari. You build once, and Playwright runs it across all three engines cleanly.

- Automatic waiting

Instead of manually coding pauses or guesswork delays, Playwright waits smartly for DOM elements to appear, network calls to finish, or navigations to complete. That makes your automated scripts much more stable, especially when you're integrating them into fast-moving CI/CD pipelines.

- Advanced network control

Playwright’s advanced network control gives you serious power over every request and response during headless browser automation. You can block ads, simulate slow networks, modify API responses, or mock endpoints, all directly inside your headless browser scripts. It’s a huge advantage when you’re testing edge cases or streamlining web scraping workflows.

- Native parallelism

With native parallelism built in, you can run multiple automated scripts at the same time across isolated headless browser contexts. One script tests login, another checks checkout, and a third monitors analytics tags - all running in parallel without collisions. It’s the kind of clean browser automation that keeps workflows fast and predictable, even inside high-pressure CI/CD pipelines.

- Built-in test runner

Most headless browser testing setups need you to bolt together a runner, an assertion library, a reporter, and a debug tool. Playwright skips the mess. Its built-in @playwright/test handles all of it out of the box: running tests, capturing snapshots, tracking results, and helping you debug fast. That saves hours you’d otherwise lose gluing half a dozen packages together, and it keeps your browser automation ready for real CI/CD pressure.

Selenium

Built originally as a full-on web testing framework, Selenium is battle-tested and flexible. But it’s also showing its age. Compared to lightweight headless browser options like Puppeteer and Playwright, Selenium can feel sluggish and heavy.

It requires more setup, is more prone to breaking when sites update, and even in headless mode, it’s not exactly built for speed runs.

If you’re building complex, cross-browser automated headless browser scripts - the kind that need to simulate a real person clicking through a real UI - Selenium still earns its place. But if you’re chasing pure efficiency for things like web scraping, headless browser testing, or tight CI/CD workflows, modern headless browser libraries are a better fit.

Here are some of the key features of Selenium:

- Cross-browser automation

One reason Selenium became the gold standard in headless testing is its deep support for cross-browser automation in headless browsers. It doesn’t just work with Chrome or Firefox - it controls Safari, Edge, Opera, and even Internet Explorer through native drivers like ChromeDriver and GeckoDriver. This wide compatibility made it a top choice for enterprises running automated tests across dozens of environments.

- Multi-language support

Not every team codes in JavaScript. That’s why Selenium’s multi-language support is a game-changer. You can write your headless browser automated scripts in Java, Python, C#, Ruby, JavaScript, or even Kotlin, depending on what fits your project best. This flexibility made Selenium a natural choice for companies with big, mixed tech stacks. It still matters today, especially when you’re weaving automation or headless testing into broader CI/CD pipelines that rely on multiple languages.

- WebDriver Protocol

At the heart of Selenium is the WebDriver Protocol, a W3C standard that defines how a browser should be remotely controlled by code. This separation between your test scripts and the browser makes Selenium incredibly flexible across environments. But it’s also part of why Selenium can feel slower compared to newer headless browser libraries like Playwright.

- Grid support for parallel headless browser testing

Selenium Grid is what you turn to when a single headless browser instance isn’t enough. It lets you distribute your automated scripts across multiple devices and operating systems at once, slashing your total run time. Sure, setting up a full Grid can feel clunky compared to the smooth parallelism you get out of the box with Playwright. But for heavyweight headless testing across lots of browsers, it’s still a workhorse that gets the job done.

HTMLUnit

HTMLUnit is a veteran, older than Selenium, even. But here’s the catch: it is not a real headless browser like Playwright or Selenium WebDriver. It’s a Java-based browser simulation that parses HTML and executes JavaScript in a lightweight environment.

That makes HTMLUnit incredibly fast for simple tasks like loading static pages, submitting forms, and crawling basic websites. If you just need to verify that a login form works or scrape a no-JavaScript page, it’s fine.

But if you throw modern web apps at it, like sites built with React, Angular, or Vue, HTMLUnit starts to crack. It simply can’t handle dynamic rendering, deep JavaScript execution, or modern client-side frameworks. For serious headless browser testing, automation, or web scraping today, tools like Playwright and Puppeteer leave HTMLUnit far behind.

Here are the key features of HTMLUnit:

- Lightweight and fast

HTMLUnit moves fast because it doesn’t pretend to be a real browser. It doesn’t load stylesheets, render images, or build complex visual layouts. Instead, it focuses on parsing the underlying HTML and handling basic JavaScript. That makes it ideal for quick tasks like verifying form submissions, crawling simple web structures, or running rapid smoke tests in CI/CD pipelines.

- Cookie and header management

It lets you fully manage cookies, custom headers, and authentication states during automation. This means you can simulate logged-in sessions, pass API keys, tweak user agents, or carry cookies across multiple requests without needing a full headless browser like Chrome.

- Simulates different browsers

You can configure it to pretend to be Chrome, Firefox, or Internet Explorer by adjusting the user agent and tweaking how it handles certain DOM behaviors. This is helpful when you're doing basic headless automation or web scraping against sites that gate access based on detected browser type. But keep in mind, this is a simulation, not true emulation. Unlike headless Playwright, HTMLUnit doesn’t use a real rendering engine, so it can’t fully replicate the quirks and behaviors of actual browsers.

How to implement headless browsing

Enough theory. It’s time to get your hands dirty. If you’re serious about headless browsing, the next step is knowing which tool fits your workflow.

Want full control over Chrome and simple scripting? We’ll walk you through Puppeteer. Need multi-browser flexibility for headless testing across Chrome, Firefox, and Safari? We’ll show you how Playwright handles it cleanly. Prefer a battle-tested tool that supports lots of languages and complex headless automation? We’ve got Selenium lined up too.

Setting up Puppeteer

Follow these simple steps to configure Puppeteer and run a basic script:

Step 1: Install Puppeteer

You don’t have to manually install Chrome to start headless browsing. Puppeteer already bundles a compatible version of Chromium, the open-source engine behind Chrome. All you need to do is open your terminal and run one command. Here it is:

npm install puppeteer

If PowerShell or your terminal throws an error saying 'npm' is not recognized, don’t worry, it just means you need Node.js installed first. Download Node.js from the official website, install it, and try the command again.

Once Node and npm are installed, you’ll be ready to spin up Chrome in headless mode for web scraping, automated scripts, or clean CI/CD pipeline runs.

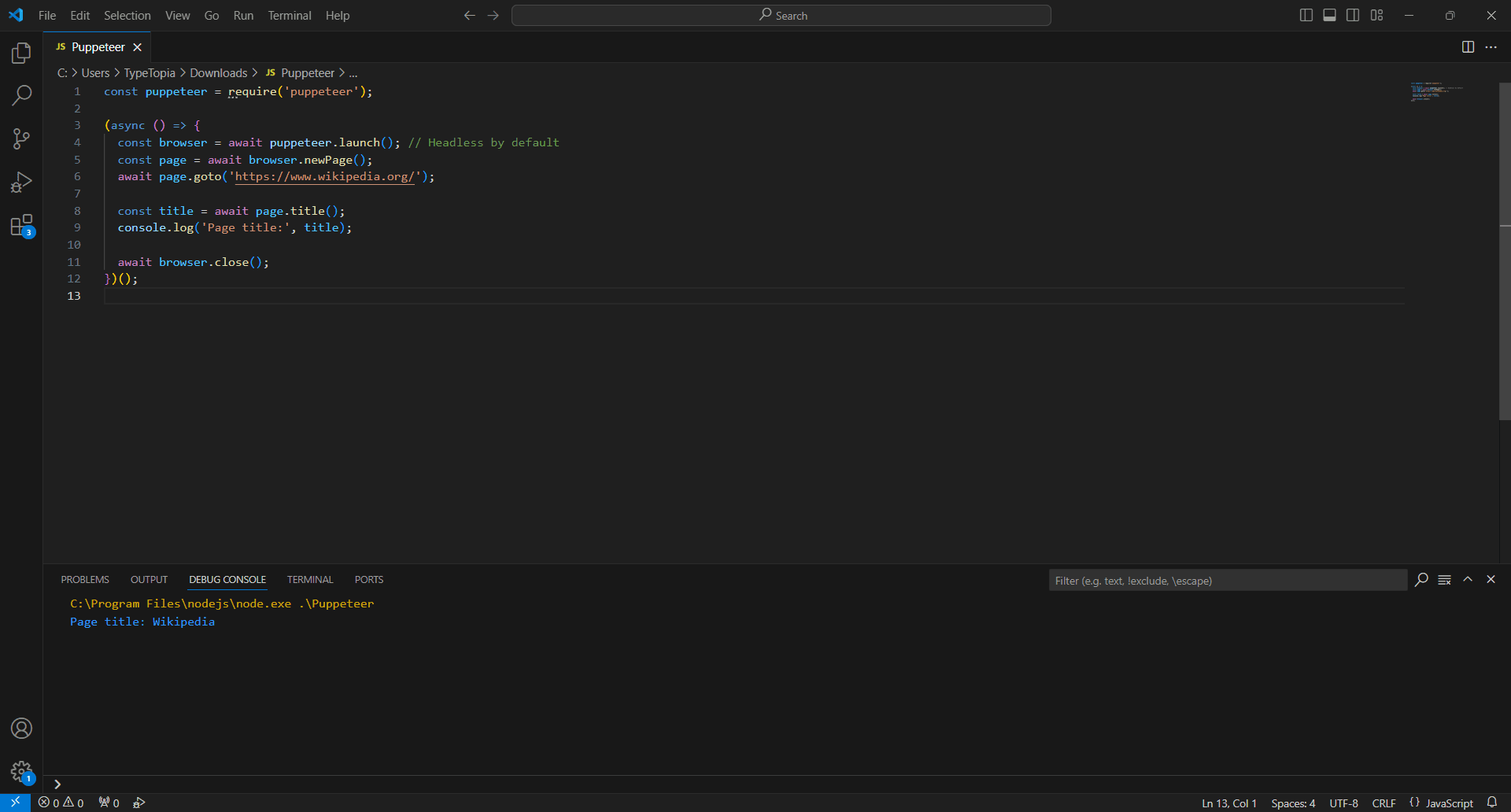

Step 2: Create a basic script

We'll start small and practical: launch Chrome in headless mode, visit the Wikipedia homepage, and print the page title. It’s the first real-world taste of headless automation and headless browsing.

Open up your favorite code editor. We’re rolling with Visual Studio Code for this, and create a new JavaScript file. Then run the following code:

const puppeteer = require('puppeteer');

(async () => {

const browser = await puppeteer.launch(); // Headless by default

const page = await browser.newPage();

await page.goto('https://www.wikipedia.org/');

const title = await page.title();

console.log('Page title:', title);

await browser.close();

})();

And just like that, you’ve built the foundation for real automation. From here, you can start steering the script anywhere you need it to go.

Setting up Playwright

Now that you’ve seen how easy it is to work with Puppeteer, let’s raise the bar. Playwright gives you even more control across browsers, and setting it up is just as simple. Here’s how to do it:

Step 1: Install Playwright

Open your terminal and run this command:

npm install -D @playwright/test

Playwright is a Node.js library, which means it runs inside a Node.js environment. If Node.js isn't installed on your machine, the npm install -D @playwright/test command won’t work because npm (Node Package Manager) comes bundled with Node.js.

You can grab it easily at nodejs.org. Once Node and npm are set up, the Playwright installation will work smoothly.

Step 2: Install browsers

Because Playwright controls three major engines, Chromium, Firefox, and WebKit, it lets you install browsers separately, but in one command. It's easy. Just pop this into your terminal:

npx playwright install

Step 3: Create your first script

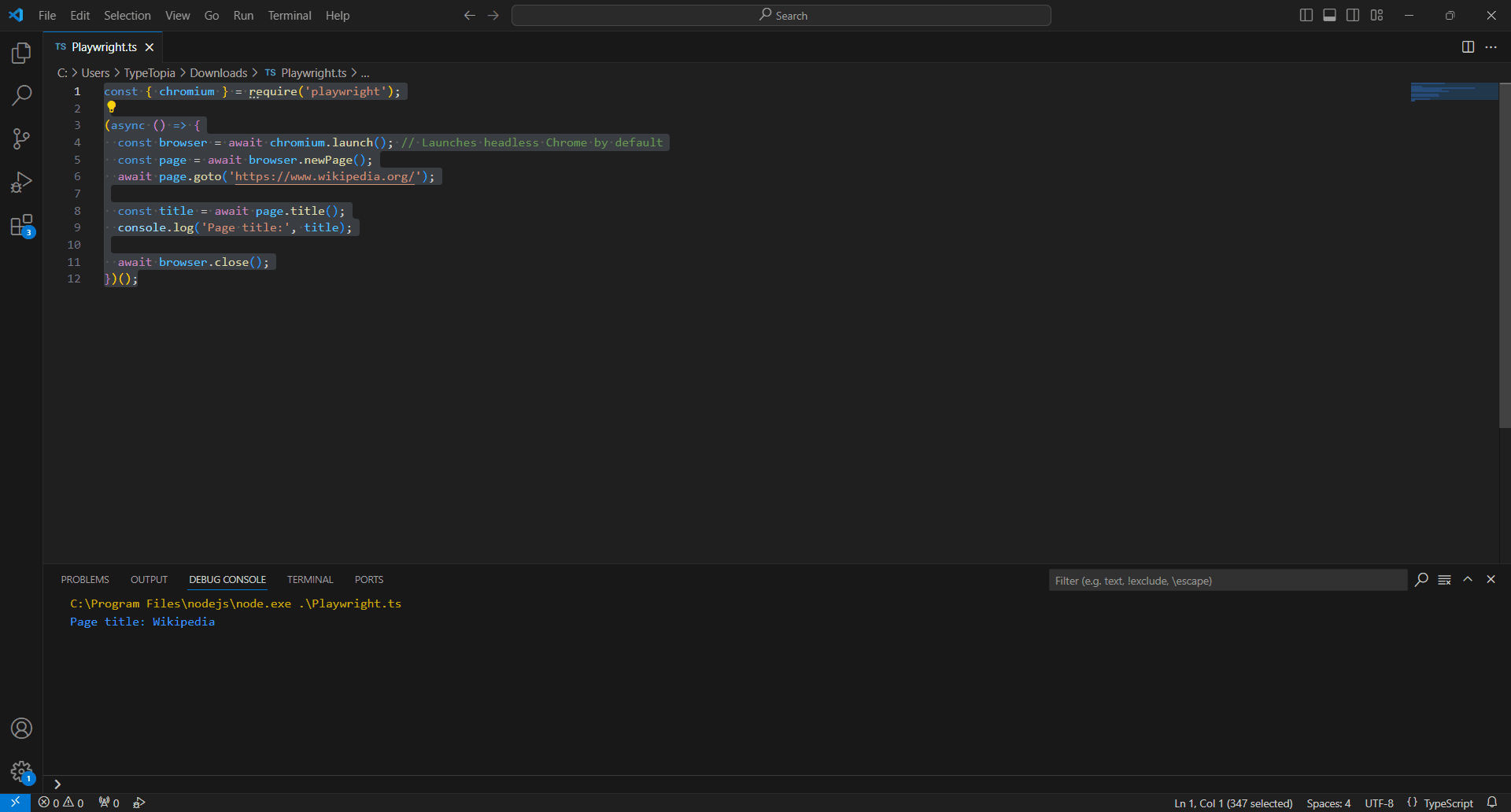

Let’s keep things simple for your first Playwright script, just like we did with Puppeteer. We’ll open a headless browser, visit Wikipedia, and print out the page title.

Open your favorite code editor (Visual Studio Code is a great option), create a new JavaScript file, and paste this in:

const { chromium } = require('playwright');

(async () => {

const browser = await chromium.launch(); // Launches headless Chrome by default

const page = await browser.newPage();

await page.goto('https://www.wikipedia.org/');

const title = await page.title();

console.log('Page title:', title);

await browser.close();

})();

With the groundwork in place, you can now customize the script to fit your workflow and use case.

Setting up Selenium

While it's not always the lightest option, Selenium is still a trusted choice when your headless testing or automation needs to span multiple browsers, environments, and programming languages.

Here’s the straightforward way to get Selenium ready for headless browser testing:

Step 1: Install Selenium

The process of installing Selenium shifts depending on the language you're coding in. Java, Python, C#, and Ruby all have slightly different paths. For simplicity and consistency, we’ll stick with JavaScript.

It keeps things straightforward and ties in perfectly with the examples we’re building here. To install Selenium for JavaScript, pop this into your terminal:

npm install selenium-webdriver

Once that’s done, your Selenium toolkit will be ready to power headless browser testing across real websites.

Step 2: Download a browser driver

Here’s something Selenium doesn’t do: it won’t hand you a browser engine out of the box.

That’s a major contrast with tools like Puppeteer or Playwright, which bundle Chromium for you. With Selenium, you need to manually download a driver that matches your installed browser version.

Since we’re using Chrome for our automation setup, we’ll go with ChromeDriver.

So head over to the official ChromeDriver download page and pick a version that matches your system’s Chrome install. If you’re unsure which version of Chrome you’re running, just open Chrome and type:

chrome://settings/help

After downloading the right driver, unzip the folder. You’ll see a file called chromedriver.exe.

You don’t need to add it to your system PATH unless you plan on reusing it often across many projects. For simplicity, just drop the chromedriver.exe file into the same folder where your Selenium test script lives. That way, your code can find and launch it without any extra setup.

It’s a small move, but it avoids versioning headaches and keeps your headless browser testing flow smooth.

Step 3: Point Selenium to the ChromeDriver executable

Let’s tie it all together. Once you’ve installed Selenium and downloaded ChromeDriver, you’re ready to test.

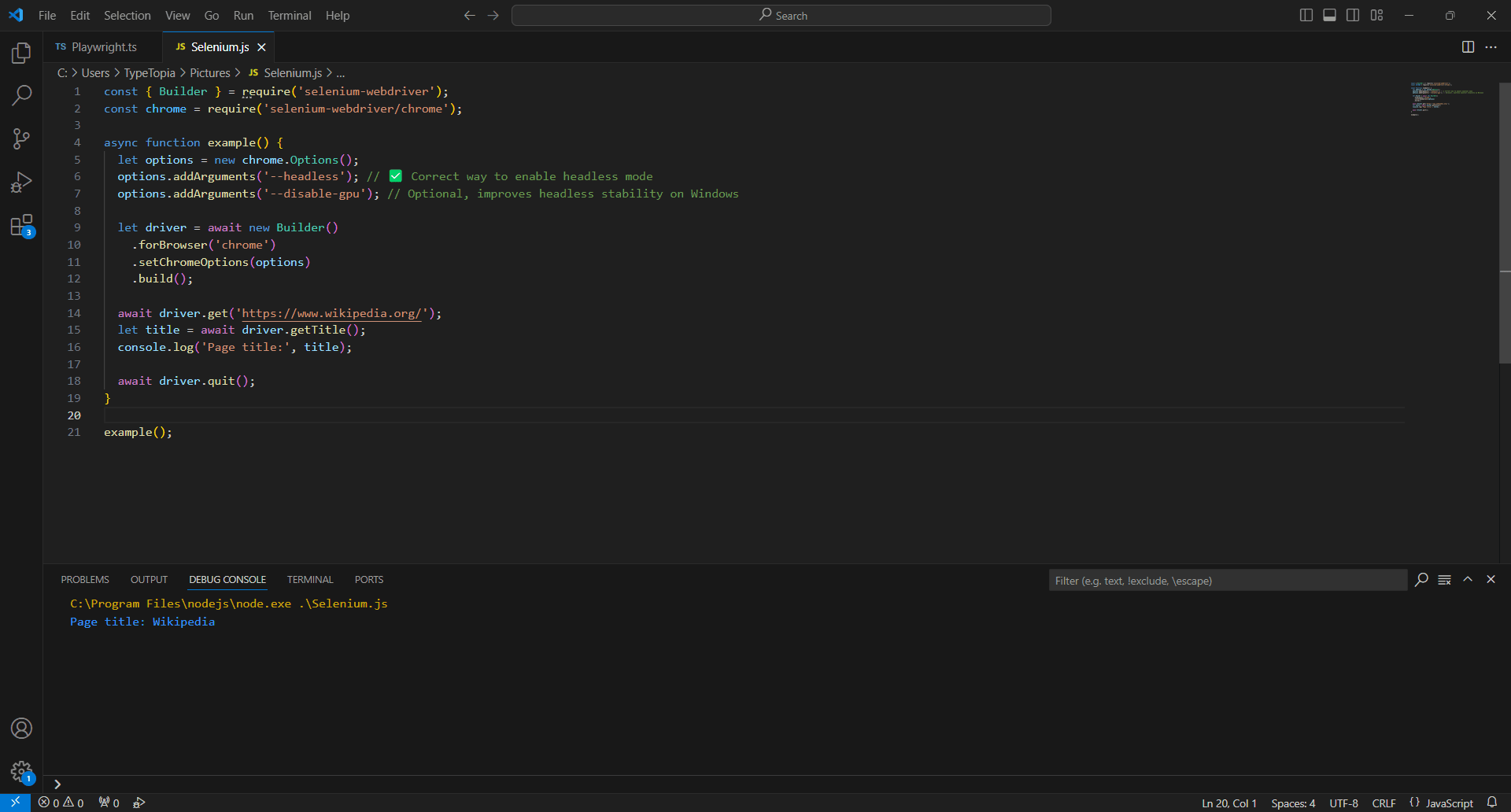

Here’s a simple script to run headless Chrome using Selenium in JavaScript:

const { Builder } = require('selenium-webdriver');

const chrome = require('selenium-webdriver/chrome');

async function example() {

let options = new chrome.Options();

options.addArguments('--headless'); // Correct way to enable headless mode

options.addArguments('--disable-gpu'); // Optional, improves headless stability on Windows

let driver = await new Builder()

.forBrowser('chrome')

.setChromeOptions(options)

.build();

await driver.get('https://www.wikipedia.org/');

let title = await driver.getTitle();

console.log('Page title:', title);

await driver.quit();

}

expample();

Best headless browser practices

Hidden pitfalls can derail your headless browsing workflow fast. These best practices will help you sidestep common traps and get the most out of your headless browser library.

Handle JavaScript rendering carefully

If you’ve ever scraped a page and gotten… well, nothing, just a bare shell with no data, that’s not your script misfiring. That’s JavaScript.

Modern websites don’t serve content all at once anymore. What loads first is often just a scaffold. The real stuff comes in after the fact, triggered by JavaScript. So if your headless browser dives in too early, you’ll miss the point entirely.

Here's how you can avoid this problem, depending on the browser you use:

Puppeteer

Puppeteer doesn’t “know” when a page is done rendering your data. It knows when the page has technically loaded (load, domcontentloaded), but not when that async JavaScript has finished painting the stuff you actually care about.

If you’re doing browser automation for scraping, testing, or CI/CD workflows, that gap matters.

Luckily, Puppeteer gives you full control over that wait. You can use waitForSelector() to pause until a specific element appears, or waitForNetworkIdle to sit tight until the browser stops making requests. There’s even waitForTimeout() if you need a custom delay.

It’s not “smart” waiting, but it’s precise. You decide when the page is ready, and Puppeteer listens.

Playwright

Unlike Puppeteer, which makes you define what to wait for, Playwright bakes that logic in by default. It doesn’t just fire off your script and hope the page is ready. It pauses until the DOM is stable or a key element shows up. Unless you manually override it, Playwright is already doing the smart waiting for you.

Selenium

With Selenium, timing becomes your responsibility. Unlike Playwright, which pauses for DOM stability, or Puppeteer, where you can slot in clean, readable waits like waitForSelector(), Selenium expects you to know when the content appears and forces you to build your own waiting logic around it.

This often means importing separate wait libraries, defining global strategies, or cluttering your script with try/catch blocks to avoid brittle failures. None of it is intuitive, and if you’re doing browser automation at scale, it’s easy to miss a beat.

Rotate proxies to avoid detection

Even the best headless browser library can’t outrun an IP ban.

The moment you start using browser automation at scale, especially for web scraping or automated scripts, you’re putting pressure on a site’s defenses. And most of them are ready. Flood them with requests from a single IP, and you’ll trigger rate limits, get flagged as a bot, or worse, blacklisted completely.

Proxies solve this neatly. With a rotating residential proxy pool, your headless browsing doesn’t stick out. Every few requests, the IP changes. From the outside, it looks like traffic from a normal mix of users, not a bot farm.

Let's look at how you can configure proxies across the different tools we've talked about:

Puppeteer

Setting up a proxy in Puppeteer is simple. You just launch headless Chrome with a proxy server argument, then handle the credentials using page.authenticate().

Here is the code:

const puppeteer = require('puppeteer');

(async () => {

const browser = await puppeteer.launch({

headless: true,

args: ['--proxy-server=http://ultra.marsproxies.com:44443']

});

const page = await browser.newPage();

await page.authenticate({

username: 'mr45184psO8',

password: 'MKRYlpZgSa_country-us_city-miami_session-iuzoneff_lifetime-30m'

});

await page.goto('https://httpbin.org/ip');

console.log(await page.content());

await browser.close();

})();

Use 'http://' even for HTTPS sites. Puppeteer handles SSL behind the scenes. And make sure to call page.authenticate() immediately after newPage() to avoid 407 errors.

Playwright

Unlike Puppeteer, which binds the proxy to the entire browser instance, Playwright lets you define proxy settings per browser or even per context. That’s a win if you're juggling multiple proxy identities in a single headless browser session.

Here’s how it looks in action:

const { chromium } = require('playwright');

(async () => {

const browser = await chromium.launch({

proxy: {

server: 'http://ultra.marsproxies.com:44443',

username: 'mr45184psO8',

password: 'MKRYlpZgSa_country-us_city-miami_session-iuzoneff_lifetime-30m'

}

});

const page = await browser.newPage();

await page.goto('https://httpbin.org/ip');

console.log(await page.content());

await browser.close();

})();

Selenium

Selenium doesn’t make proxy authentication easy. While it handles basic proxy routing through Chrome flags just fine, it completely skips support for proxies that require a username and password.

Here’s the workaround: whitelist your IP at the proxy level. Once that’s done, you can drop the credentials and pass the proxy address straight into your headless Chrome launch. Like this:

const { Builder } = require('selenium-webdriver');

const chrome = require('selenium-webdriver/chrome');

(async () => {

const proxy = 'http://ultra.marsproxies.com:44443';

const options = new chrome.Options();

options.addArguments(`--proxy-server=${proxy}`);

const driver = await new Builder()

.forBrowser('chrome')

.setChromeOptions(options)

.build();

await driver.get('https://httpbin.org/ip');

console.log(await driver.getPageSource());

await driver.quit();

})();

Manage timeouts and delays

If your web scraping scripts act too quickly when clicking, scrolling, and submitting at machine speed, they’ll stick out like a sore thumb. That’s why adding short, natural-looking delays is a smart move.

Here's how you can manage delays and timeouts in conjunction with proxies to avoid detection:

Puppeteer

This code gives you a glimpse on how to implement timeouts in conjunction with proxies:

const puppeteer = require('puppeteer');

// Helper function for delay

function delay(time) {

return new Promise(function(resolve) {

setTimeout(resolve, time);

});

}

(async () => {

const browser = await puppeteer.launch({

headless: true,

args: ['--proxy-server=http://ultra.marsproxies.com:44443']

});

const page = await browser.newPage();

// Authenticate to the proxy server

await page.authenticate({

username: 'mr45184psO8',

password: 'MKRYlpZgSa_country-us_city-miami_session-iuzoneff_lifetime-30m'

});

// Go to the target page

await page.goto('https://httpbin.org/ip', {

waitUntil: 'networkidle2', // Wait until all network activity stops

timeout: 30000 // 30-second timeout for loading

});

// Introduce a delay to mimic human-like behavior

await delay(2000); // Wait 2 seconds

// Extract and print page content

const content = await page.content();

console.log(content);

await browser.close();

})();

The timeout option inside page.goto() ensures the script doesn’t wait forever. If the page doesn’t load within 30 seconds, Puppeteer exits with an error.

The waitUntil: 'networkidle2' condition tells Puppeteer to wait until the page has stopped making network requests, which is especially useful for dynamic sites.

Then, the custom delay() function adds a 2-second pause before interacting with the content. This pause mimics natural user behavior, which can help you avoid detection during web scraping or headless browser testing.

Playwright

Use this script to implement timeouts with Playwright:

const { chromium } = require('playwright');

// Helper delay function

function delay(time) {

return new Promise(resolve => setTimeout(resolve, time));

}

(async () => {

const browser = await chromium.launch({

proxy: {

server: 'http://ultra.marsproxies.com:44443',

username: 'mr45184psO8',

password: 'MKRYlpZgSa_country-us_city-miami_session-iuzoneff_lifetime-30m'

}

});

const context = await browser.newContext();

const page = await context.newPage();

// Set navigation timeout to 30 seconds

page.setDefaultNavigationTimeout(30000);

// Go to the target page

await page.goto('https://httpbin.org/ip', {

waitUntil: 'networkidle' // Wait for no ongoing network requests

});

// Introduce a short delay to mimic human behavior

await delay(2000); // 2-second pause

const content = await page.content();

console.log(content);

await browser.close();

})();

We combine proxy authentication, timeout settings, and delay injection to control the pace and behavior of browser automation. The setDefaultNavigationTimeout(30000) line ensures that any navigation (like goto) that takes longer than 30 seconds will be aborted.

This protects your script from hanging on slow pages. The waitUntil: 'networkidle' condition waits until there are no active network connections, making it reliable for pages that load content asynchronously.

Finally, the delay() function introduces a 2-second pause to simulate real user pauses between actions. This kind of pacing is especially helpful when doing web scraping or automated scripts on sites with bot protection, as it makes your traffic look more natural.

Selenium

Selenium doesn’t handle timeouts and waits as gracefully as headless browser libraries like Puppeteer or Playwright. In Selenium, you have to manage multiple types of timeouts like pageLoad, script, and implicit separately, and they don’t always behave predictably.

Here is how the script looks in JavaScript:

const { Builder, By, until } = require('selenium-webdriver');

const chrome = require('selenium-webdriver/chrome');

// Helper delay function

function delay(time) {

return new Promise(resolve => setTimeout(resolve, time));

}

(async () => {

const proxy = 'http://ultra.marsproxies.com:44443';

const options = new chrome.Options();

options.addArguments(`--proxy-server=${proxy}`);

const driver = await new Builder()

.forBrowser('chrome')

.setChromeOptions(options)

.build();

try {

// Set a 30-second timeout for page loads

await driver.manage().setTimeouts({ pageLoad: 30000 });

// Navigate to the page

await driver.get('https://httpbin.org/ip');

// Optional: Wait for a specific element to appear (if needed)

await driver.wait(until.elementLocated(By.css('body')), 10000);

// Wait 2 seconds to mimic human behavior

await delay(2000);

const content = await driver.getPageSource();

console.log(content);

} finally {

await driver.quit();

}

})();

This Selenium script uses a headless Chrome browser routed through a proxy server and introduces both a page load timeout and an artificial delay to mimic human behavior. It starts by configuring the proxy via Chrome options, then sets a 30-second timeout to ensure the page doesn’t hang indefinitely.

Conclusion

If you’ve made it all the way here, hats off, you now speak fluent headless. You’ve walked through how a headless web browser works, how tools like Puppeteer and Playwright compare, and what it takes to stay undetected (using residential proxies) when bots are doing the heavy lifting. You’ve seen the good (speed), the bad (authentication headaches), and the ugly (Selenium’s timing quirks). But now? Now you know better. May your scripts run green on the first try, your proxies stay unbanned, and your DOM waits never timeout. High five, coder. You’ve got this.