Google Flights is a popular flight search service that aggregates flight schedules, airlines, and fares in one place. It holds a wealth of flight data, including airfare prices, timetables, and airline details.

Unfortunately, Google does not provide a public API for this platform. This means if you want to collect information at scale, for example, to monitor airfare trends or build your own comparison tool, you’ll need to scrape this website.

In this guide, we’ll cover three methods to do so: using Python (with Playwright), utiliizing a third-party Google Flights API, and employing no-code tools. We’ll also discuss how to manage anti-scraping measures and compare these approaches side by side.

Scraping this amazing service can unlock valuable flight data for many purposes. Whether you’re tracking flight prices, comparing airline options, or conducting market research, Google Flights provides a wealth of information useful for decision-making.

For instance, you could track how flight prices change over time, analyze price trends, find the best times to book, and compare fares across airlines and dates.

However, without the right tools and methods, attempting to collect this data can be quite challenging due to dynamic content and anti-scraping protections, but we are here to help you avoid these issues. Let’s dive in and see how to scrape Google Flights, shall we?

How to scrape Google Flights with Python

One way to scrape Google Flights is by writing a custom Google Flights scraper in Python. Google Flights is a JavaScript-heavy site that loads results dynamically, so a normal HTTP request won’t return all the flight data.

Instead, you can automate a headless browser using a tool like Playwright (or Selenium). This allows your script to perform a search on the Google Flights page, wait for the results to load, and then extract data from the page’s HTML. Below is a step-by-step overview using Playwright, including proxy usage for scaling.

Step 1: Set up Python and Playwright

First, install Playwright:

pip install playwright

Add its browser drivers:

playwright install

You’ll also want to install any parsing libraries (like beautifulsoup4) if you plan to parse HTML, though Playwright can get text directly using CSS selectors. Initialize a new Python script and import the necessary modules.

Step 2: Launch a headless browser

Use Playwright to launch a headless browser and open the Google Flights page. For example:

import asyncio

from playwright.async_api import async_playwright

async def fetch_flights(departure, destination, date):

async with async_playwright() as p:

browser = await p.chromium.launch(headless=True)

context = await browser.new_context()

# Optionally, set a proxy for this context to avoid IP blocks

# context = await browser.new_context(proxy={"server": "http://<PROXY_IP>:<PORT>"})

page = await context.new_page()

await page.goto("https://www.google.com/travel/flights")

# Fill the search form

await page.fill("input[aria-label='Where from?']", departure)

await page.fill("input[aria-label='Where to?']", destination)

await page.fill("input[aria-label='Departure date']", date)

await page.keyboard.press("Enter") # start search

await page.wait_for_selector("li.pIav2d") # wait for flight listings to load

# Extract flight results

flights = []

flight_items = await page.query_selector_all("li.pIav2d")

for item in flight_items:

airline = await item.query_selector("div.sSHqwe.tPgKwe.ogfYpf")

price = await item.query_selector("div.FpEdX span")

time = await item.query_selector("span[aria-label^='Departure time']")

# Get inner text from elements

airline_name = await airline.inner_text() if airline else None

price_text = await price.inner_text() if price else None

dep_time = await time.inner_text() if time else None

flights.append({"airline": airline_name, "price": price_text, "departure_time": dep_time})

await browser.close()

return flights

# Example usage:

results = asyncio.run(fetch_flights("LAX", "JFK", "2025-12-01"))

print(results)

In the above code, we load the Google Flights search, input the departure, destination, and date, then wait for the flight results. Each flight result is contained in an HTML <li> element with class pIav2d (the container for listings on the page).

Playwright’s API allows us to retrieve the inner text of each element, which we then store in a Python list of dictionaries for further use.

Step 3: Handle dynamic content and pagination

Google Flights often shows a limited number of results by default. To load all results, you might need to scroll the page or click a "Show more flights" button. With Playwright, you can simulate clicking that button in a loop until no more results load. This ensures you gather all available listings related to flights for your query.

For example, you could do something like:

# Pseudocode: click "Show more flights" until it disappears

while True:

try:

more_button = await page.wait_for_selector('button[aria-label*="more flights"]', timeout=5000)

await more_button.click()

await page.wait_for_timeout(2000) # small delay to load next batch

except TimeoutError:

break

This loop attempts to find and click the "Show more flights" button and breaks out when the button no longer appears (meaning all results are loaded).

Step 4: Use proxies for scale

If you plan to scrape Google Flights frequently or at scale, incorporate proxy servers to avoid IP rate limits. Playwright allows setting a proxy per browser context. Using a rotating residential proxies service (such as MarsProxies or similar) can help distribute your requests across different IP addresses, preventing Google from flagging and blocking your scraper.

Step 5: Save and analyze the data

After extraction, you can save the collected flight data to a file (JSON, CSV, etc.) for analysis. For instance, you might save the results list to a JSON file for later processing. With the data in hand, you could analyze price trends, feed it into a database, or use it in an application.

By following the above steps, you essentially build your own Google Flights scraper that mimics a user performing a search and then harvests the results. Just remember that scraping a complex site like Google Flights requires careful handling of dynamic content and anti-bot measures, which leads us to using proxies and solving CAPTCHAs.

Using an API for Google Flights data

If coding a full browser automation sounds daunting or you need a quicker solution, you can turn to third-party APIs. While there is no official Google Flights API from Google, several services provide search scraping as an API. These services handle the heavy lifting (browser simulation, proxies, CAPTCHA solving) on their end and return structured data to you.

One popular example is SerpApi’s Google Flights API, which allows you to query Google Flights search results and get JSON data back.

Using an API is straightforward: you make an HTTP request with the query parameters (like origin, destination, dates, etc.), and the API returns the flight data in JSON format. Below is a simple example using SerpApi in Python:

import requests

params = {

"engine": "google_flights",

"q": "Flights from NYC to LON, one-way, 2025-12-25", # search query or use departure/arrival codes

"api_key": "YOUR_SERPAPI_API_KEY"

}

response = requests.get("https://serpapi.com/search", params=params)

data = response.json()

# Extract some information from the results

for flight in data.get("best_flights", []):

airline = flight.get("airline")

price = flight.get("price")

print(airline, price)

In this snippet, we send a request to SerpApi’s endpoint with a query. SerpApi (and similar services) will perform the search on Google Flights internally and return the results.

Typically, the JSON includes structures for the top flight options (often under keys like best_flights or other_flights) with details such as airline name, price, departure/arrival times, duration, number of stops, etc.. You simply parse this JSON to get the data you need, without dealing with page navigation or HTML parsing yourself.

The advantages of using a scraping API are speed and ease of use. In a few lines of code, you get the data ready to use. These APIs also manage rotating IPs and handle extracting data from Google Flights reliably. For instance, SerpApi will ensure you get all the data even if Google tries to block automated queries.

The trade-off is cost: such APIs typically require a subscription or pay-per-request fee, and there may be limits on the number of queries per month. Still, for many projects, the convenience is worth it, especially if you lack the time to maintain a custom scraper.

No-Code options for Google Flights scraping

Not a programmer? No problem, since there are web scraping tool solutions that let you scrape Google Flights without writing code. Tools like Octoparse, ParseHub, or Apify provide user-friendly interfaces to extract information from websites.

Let’s take Octoparse as an example of a no-code approach.

Octoparse offers an auto-detect feature and even preset templates specifically for Google Flights data. This means you can point Octoparse to a page on Google Flights, and it will attempt to identify elements like flight prices, airline names, times, etc., automatically.

Here’s a quick step-by-step guide:

- Input the URL

Launch Octoparse (desktop app or cloud platform) and enter the URL for the Google Flights results you want to scrape. This could be a generic Google Flights search page or a specific pre-filled search URL. Start the process, and Octoparse will load the page within its interface.

2. Auto-detect and refine data fields

Octoparse can often auto-detect the repeating pattern of flight listings and propose data fields (e.g., airline name, price, duration, stops). You can review these fields and make adjustments if needed. For example, if you also need the flight duration or the number of layovers, ensure those fields are selected.

Octoparse allows you to click on elements in the page to capture data or adjust CSS selectors behind the scenes. You can also handle pagination or scrolling for the site, as it may handle the infinite scroll automatically, or you can configure it to click a "load more" button if present.

3. Run the scraper

Once your fields are set, run the extraction. Octoparse will navigate through the Google Flights results, scrape each flight listing with the available flight data, and compile the data. You can watch it click through pages and gather info in real time.

If Google prompts a CAPTCHA, some no-code tools can even integrate solutions to bypass it (Octoparse, for instance, has a CAPTCHA-solving and IP rotation feature built-in).

4. Export the data

After the run, you can export the scraped flight data in formats like CSV, Excel, JSON or directly to Google Sheets. This makes it easy to use the data for analysis or load it into databases.

Using no-code tools requires minimal technical knowledge. The upside is that the setup is quick, and you might get results within minutes by following a visual wizard. Also, these platforms often come with cloud scraping options, meaning the scraping can be done on their servers, and you don’t have to worry about IP bans (they often provide IP proxy pools).

The downside is flexibility: you are somewhat limited to the features of the tool. If Google changes its site structure, you might need to reconfigure the scraper or wait for the tool provider to update their template.

Managing anti-scraping measures

Since Google is aware that people may try to extract data automatically, Google Flights has anti-scraping measures. When you scrape Google Flights, especially at scale, you might encounter IP blocks or CAPTCHA challenges. Here’s how to manage these obstacles:

- IP rotation & proxies

Frequent requests from a single IP address will quickly trigger Google’s defenses (you might get blocked or see CAPTCHA verifications). Using rotating proxies is essential to stay under the radar.

A proxy network will assign your scraper a new IP periodically or per request. High-quality residential proxies are ideal since they route traffic through real consumer internet connections, making your requests look more like those of regular users.

- Handling CAPTCHAs

If Google suspects a bot, it may serve a reCAPTCHA challenge. Solving CAPTCHAs manually is not feasible for large-scale scraping, so you’ll need a solution to handle them. One approach is to integrate third-party CAPTCHA-solving services (like 2Captcha, AntiCaptcha, etc.), which can solve visual or audio CAPTCHAs for a fee.

When using Playwright or Selenium, you can also attempt to avoid CAPTCHAs by slowing down actions, enabling headful (non-headless) mode, and making sure to use a proper user-agent string so you appear as a real browser.

In practice, overcoming anti-scraping measures requires a bit of trial and error. Start with small scraping sessions and monitor if you get blocked. Adjust your strategy accordingly: increase rotation frequency, add random delays between actions, or integrate a web scraping tool/API that manages these aspects.

In most cases, these two simple methods are all you need to scrape Google Flights without any annoying interruptions.

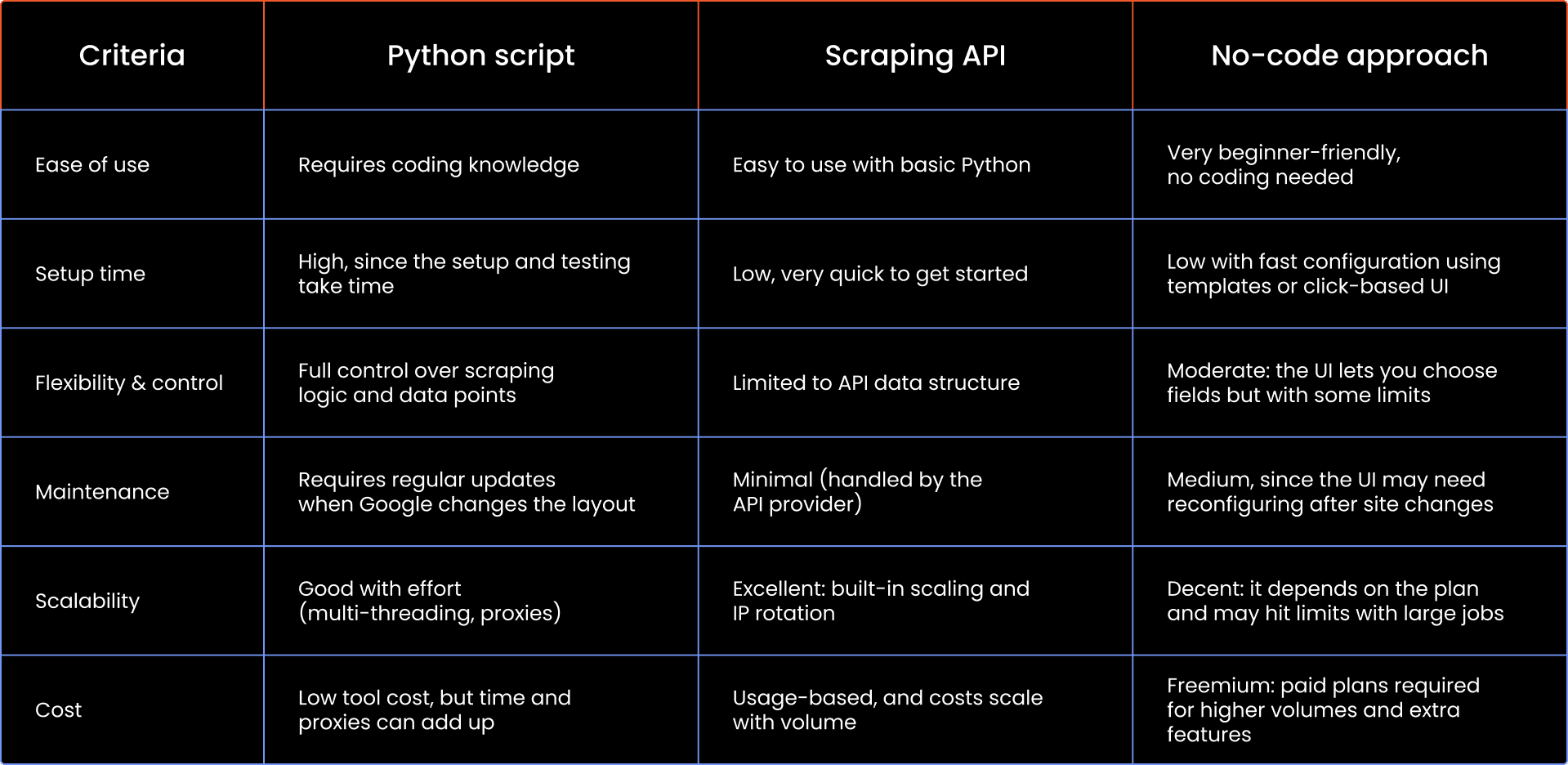

Scaling your scraping: own code vs API vs no-code

Now that we’ve discussed three approaches, how do they stack up against each other? Choosing between writing your own script, using an API, or a no-code solution depends on your specific needs and resources. Here’s a quick comparison:

Final thoughts

There are plenty of reasons to scrape Google Flights, from analyzing pricing trends or building an aggregator to simply looking for the best deals. Whatever scraping method you choose, having the right tools and a solid strategy makes all the difference. Don’t forget to join our Discord community to connect with others, get tips, and share experiences!

Is scraping Google Flights legal?

Scraping public data from Google Flights may violate Google's terms of service but isn’t illegal in most jurisdictions. Always check the site's terms and scrape responsibly (do not overload servers, send requests sensibly, and so on).

What tools are best for scraping flight data?

For coding: Python + Playwright. For quick results: SerpApi or similar APIs. For non-coders: Octoparse or ParseHub. Choose based on your skills and needs.

How do I handle CAPTCHA and IP blocks when scraping?

Use rotating proxies to avoid IP bans and CAPTCHA-solving services if needed. APIs and premium no-code tools often handle these for you automatically.

What flight details can I scrape?

Pretty much any data that you will ever need: you can scrape airline name, flight number, price, flight duration, stops, departure/arrival times, and more from Google Flights' search results.