If you had to pick one command line tool to carry with you into every bash script, cron job, or server debug session, would it be cURL or wGET?

Tough question, right? They both download files, they're both crazy lightweight, and they've both been around forever. But are they interchangeable? The way they handle downloads, and the kinds of tasks they’re best at actually differ in some important ways.

So if you're trying to figure out which one fits your workflow, or whether you should master both, you're in the right place. We're going to look at the core differences, real-world examples, and how to pick the one which makes your life easier right now. Let's break it down.

What is cURL?

Let's talk about cURL. Even if you've never typed a cURL command manually, chances are you've used a tool that relies on it. It's built into everything from software installers to backend scripts. Originally launched in the 90s, this command line tool has become essential to how the internet works. Its real power comes from libcurl, the library that allows thousands of programs to download fileS, make HTTP requests, and communicate over dozens of network protocols.

Key Features of cURL

Before we get to the whole cuRL and wget debate, here's what makes cURL tick:

- Protocol support cURL supports more than 20 protocols including HTTP, HTTPS, FTP, FTPS, SCP, and SFTP. This makes it incredibly versatile for developers working with everything from public APIs to private FTP servers or secure data transfers.

- Data transfer capabilities You can use cURL to download files, upload payloads, pass headers, and manage sessions all from your terminal. It's especially useful when you want to automate these tasks with shell scripts of CI pipelines.

- libcurl What makes cuRL stand out in the whole cURL and wget discussion is libcurl, the library that powers cURL and integrates into applications in dozens of languages. From browsers to IoT devices, libcurl keeps modern systems connected.

- Authentication You can pass credentials directly into a cURL HTTPS request, which is super handy when you need to hit a secured endpoint inside a shell script or automation pipeline.

- Header control Need to mimic browser traffic or test how a server responds to different clients? The cURL command lets you set custom headers with -H, great for simulation real-world HTTP requests and bypassing scraper blocks.

- Proxy support If you're using the cURL command to download files at scale or test geo-targeted content, proxy support is a must. cURL makes it easy to router traffic through authenticated HTTP, SOCKS5 or even residential proxies with a simple --proxy flag.

cURL Use Cases

Below we'll explain the cURL command across scenarios like authentication, proxies, and basic file downloads.

Downloading a file and saving it with a specific name

Let's say you want to download a file from a URL and save it with a specific name. You can tell cURL exactly what to name the file using the -o flag. Here is what that looks like:

curl -o custom-name.pdf https://example.com/report.pdf

Testing an API that needs authentication and a custom header

Say you're testing an API endpoint that needs a bearer token and expects JSON data. You also want to set a custom user agent so the request looks like it's coming from a browser and not from a script. Here is the syntax for your curl command:

curl -X POST https://api.example.com/data \

-H "Content-Type: application/json" \

-H "Authorization: Bearer YOUR_TOKEN" \

-H "User-Agent: Mozilla" \

-d '{"key":"value"}'

- curl -X POST https://api.example.com/data \ Tells cURL to make a POST request to the specified URL

- -H "Content-Type: application/json" \ Sets the Content-Type header so the server knows you are sending JSON

- -H "Authorization: Bearer YOUR_TOKEN" \ -H "Authorization: Bearer YOUR_TOKEN" \

- -H "User-Agent: Mozilla" \ Sets the user agent header to make your request look like it's coming from a browser

- -d '{"key":"value"}' The actual data you are sending to the server

What is Wget?

Now that we've covered cURL, let's look at the other half of the wget and curl equation: Wget. This free, open-source command line tool shines in headless environments, servers, cron jobs, shell scripts, you name it. It's ideal for situations where interaction isn't an option. The Wget command is built to download files over HTTP, HTTPS, or FTP.

Key Features of Wget

Here are the main features that define this command line tool:

- Recursive downloading If you've ever needed to download files from an entire directory on an FTP server or mirror a full website for offline access, Wget saves the day. The wget command can download recursively, pulling linked pages, assets, and subfolders while preserving the site structure. This is something cURL can't do out of the box, so in the wget and curl debate, Wget takes the win.

- Robustness When you're running cron jobs or downloading large datasets over a shaky connection, you need a tool that doesn't give up. Wget will keep trying until it finishes the job, and it can even pick up where it left off if the transfer was interrupted. It just gets the file, no matter how rough the connection.

- Proxy Support If you're working behind a corporate firewall or network that restricts direct internet access, Wget can download files through HTTP and HTTPS proxies with just a quick config tweak. You can even set it up in your environment variables, making it a good fit for automation.

- Timestamping Wget's timestamping is perfect when you need to sync with a remote server but don't want to download files you already have. It reads modifications dates and skips what hasn't changed, saving time and system load.

Wget Use Cases

Now let's get to the fun stuff, how you actually use wget. We'll walk through a few everyday situations where this command line tool really delivers.

Downloading a file to the current directory

Want to download a file and drop it straight into your current working directory? Use the following command:

wget https://example.com/file.zip

Once you hit enter, the file drops into your session's current folder, no questions asked.

Downloading and saving with a custom filename

If you need the file but hate the name it comes with, you can tell the wget command exactly what to call the file by adding -0 followed by your preferred file name like so:

wget -O report-latest.pdf https://example.com/data.pdf

Downloading a file recursively

If you're scraping and need everything: pages, images, or assets. This one-liner will save your life:

wget -r https://example.com/docs/

Wget will follow ever link its finds and download all the files it comes within that path

Downloading using a proxy

Here's how you can make wget play ball with a proxy

wget -e use_proxy=yes -e http_proxy=http://yourproxy:port https://example.com/data.pdf

This command tells Wget to route the download through the proxy server. Just plug in your proxy details and let the command line tool do the rest.

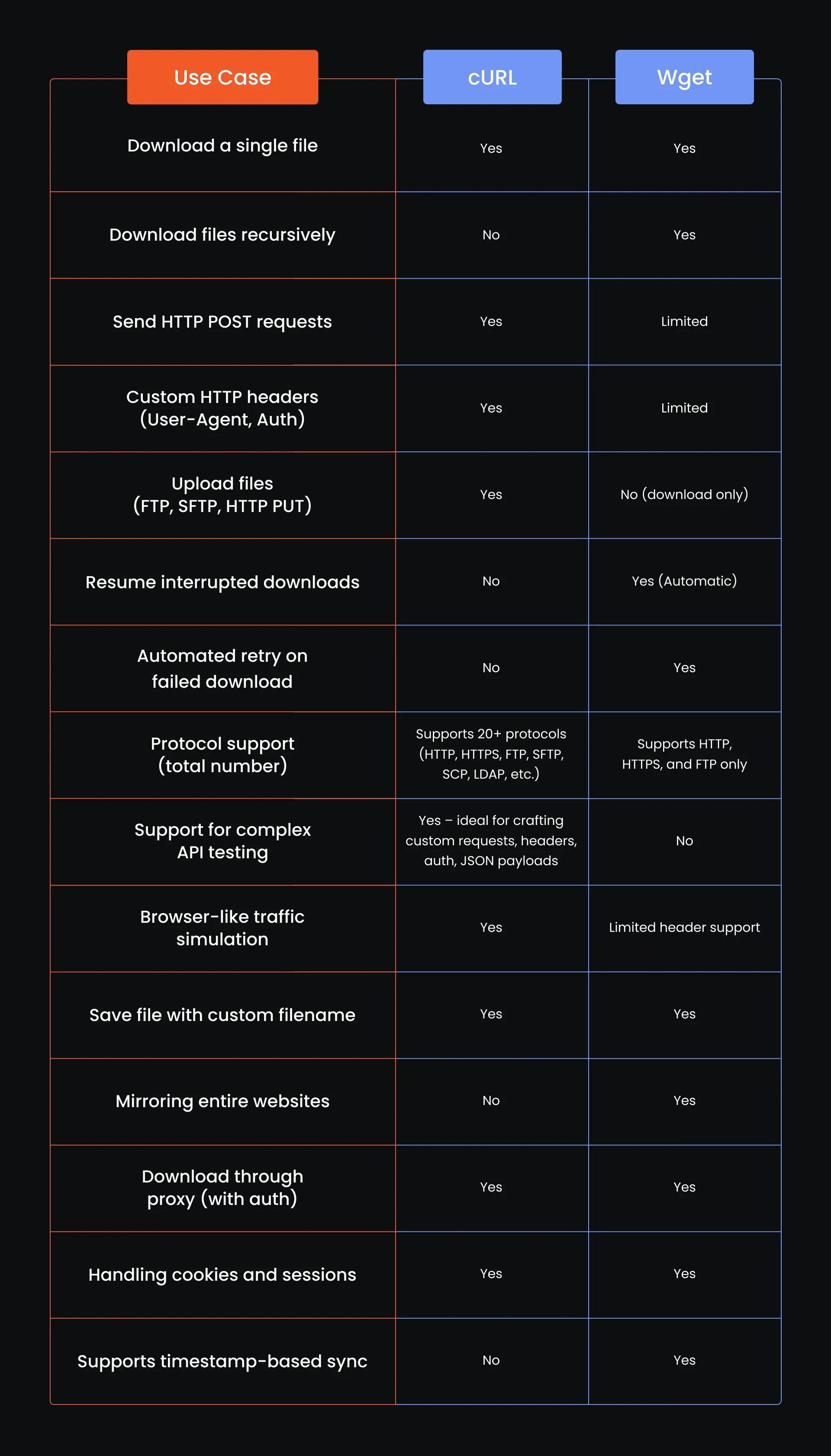

cURL vs Wget: Key Differences

cURL vs Wget: Which Is Faster?

Looking at wget and curl from a task-based perspective, each command line tool brings different strengths to the table. Wget is excellent for situations when you want to download files recursively or resume broken transfers without extra scripting. That's why it's the obvious choice for cron jobs, shell scripts, or when mirroring entire websites.

Meanwhile, cURL pills ahead in scenarios that demand flexibility. When you're dealing with token-based authentication, custom headers, or need to simulate browser-like traffic with precise control over HTTP requests, the cURL command does the heavy lifting. While it may not retry failed downloads out of the box like Wget, it gives you fine-tuned control for more complex command line workflows.

What Are Some Alternatives to cURL and Wget?

So you’ve seen what cURL and Wget can do, but those are not the only players in the field. You have options and each one shines in its on way depending on what you are trying to do:

- Postman For API testing with a graphical interface. It lets you send all types of HTTP requests, tweak headers, and see cookie/session data in a visual layout.

- HTTPie Think of it as a more human-friendly alternative to the cURL command. It formats JSON output and supports RESTfiul API workflows right out of the box.

- Aria2 This tool goes beyond what the wget command can do. It supports multi-source downloads, metalinks and even BitTorrent.

- PowerShell (Invoke-WebRequest / Invoke-RestMethod) Built for WAindows environments and ideal for quick scrioption, automnation, or fetching data via HTTP requests.

- Python + requests Great for those who want to move beyond command line tools. It is ideal for automating HTTP requests, managing cookies and crratingh workflows that scale.

Conclusion

Whether you are a DevOPs engineer, data scraper, or just getting your hands dirty with some command line magic, understanding the difference in the cURL and wget showdown gives you a huge leg up. Both tools are deceptively simple, but packed with power once you know how to wield them. And hey, if neither feels perfect today, you’ve got a whole toolkit of options ready to go. Script smart, test often, and may your curl https and wget commands always return 200.

Is it better to use curl or wget?

Use wget when you want to download files recursively, mirror websites or automate simple fetches. Go with cURL when you need to interact with APIs, send custom headers, or handle more complex HTTP requests.

Can curl replace wget?

In some situations, yes, cURL can replace wget, especially if you are just downloading files. But for tasks like recursive downloads, wget still has an edge.

What is the use of wget and curl?

Wget is mostly used to download files or even entire websityes from the internet, often without any interaction. cURL is more flexible, it can download, upload, send headers, and work with APIs.

Where does curl download by default?

If you run a curl commabnd without telling it where to save, it will just spit the file into your terminal window. Use the -o flag to save it with the original name, or -o filename to name it yourself.