Getting data from a website to Excel can be either the simplest thing you’ll do today, or the start of a long battle with dynamic content, session blocks, and bot traps.

If you’re pulling public data from something like Wikipedia, Excel’s built-in tools might be all you need. But try to extract product prices from a site like BestBuy or scrape data from LinkedIn, and you’ll see just how quickly things fall apart.

This guide shows you different methods to scrape website data to Excel automatically. We’ll cover everything from using Excel web queries and Power Query to scaling your scraper with browser-based tools and rotating proxies.

Method 1: Using Excel’s built-in tools

The first method uses something most people already have: Microsoft Excel. But let’s be clear, this isn’t true web scraping. You’re not simulating a browser or defeating bot checks. You’re just asking nicely for data from websites that are okay with giving it.

Excel web queries and Power Query send a polite request, and if the site returns a clean HTML table, Excel pulls it in. Sometimes that works great. Other times, the site responds with a JavaScript shell, and Excel has no idea where the data is.

So yes, this method can be a quick win, but only if the site is built for cooperation. Anything dynamic or protected will break the flow.

Power Query (Get & Transform)

The first built-in tool worth using is Power Query, also known as Get & Transform. Under the hood, it’s simple: Excel sends a basic GET request to the page, the site returns raw HTML, and Power Query tries to pull out anything that looks like a <table>.

If the site plays nice, you can scrape data into a clean Excel sheet and set it to refresh automatically later. But if the data is buried inside JavaScript, Excel won’t see it.

This works when:

- You’re working with simple HTML tables

- You want to automate updates inside the spreadsheet

- You need to filter, rename, or reshape data before it hits the sheet

- You’re not writing code and don’t want to leave Microsoft Excel

Example: Extracting top 250 movies from IMDb

IMDb’s Top 250 Movies list is a great test case. The data is static, the page layout is simple, and Microsoft Excel’s Power Query can pull it in without code. You’ll need Excel 2016 or later on Windows. Power Query for Mac still lacks full support for web scraping.

Let’s walk through the data extraction process from IMDB to your spreadsheet.

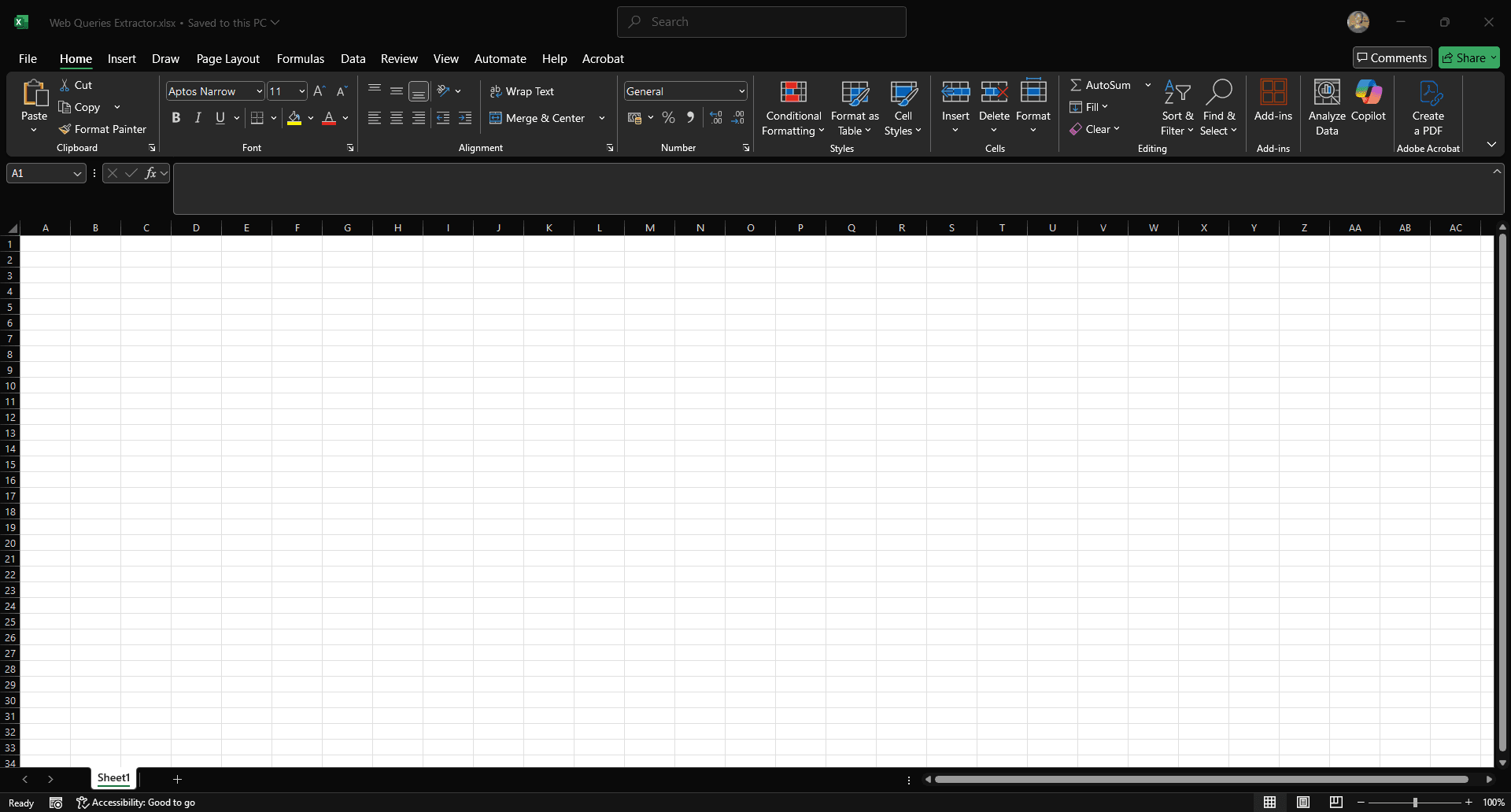

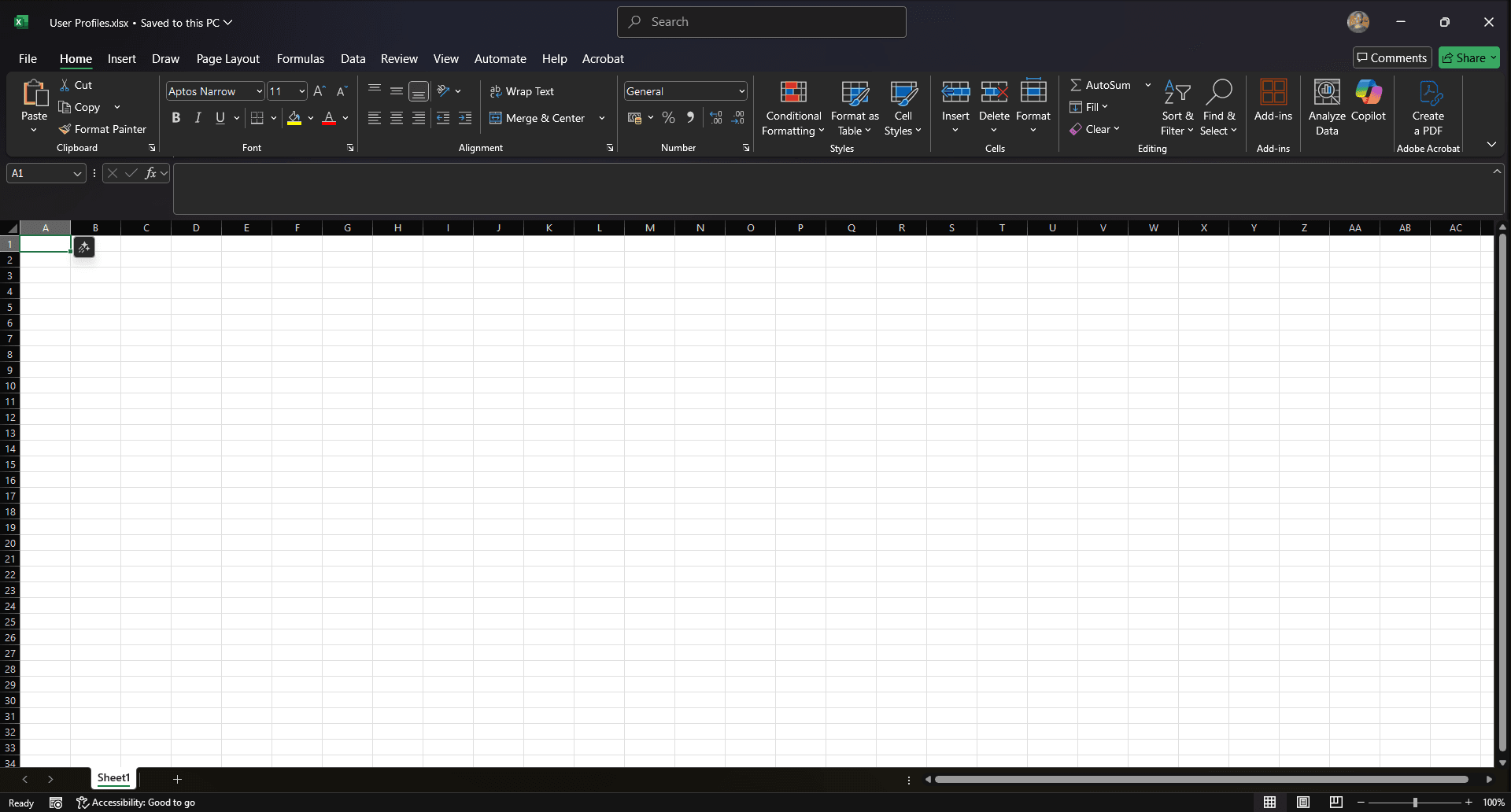

Step 1: Open Excel and start a new workbook

Launch Excel, create a new workbook, and give it any name you prefer.

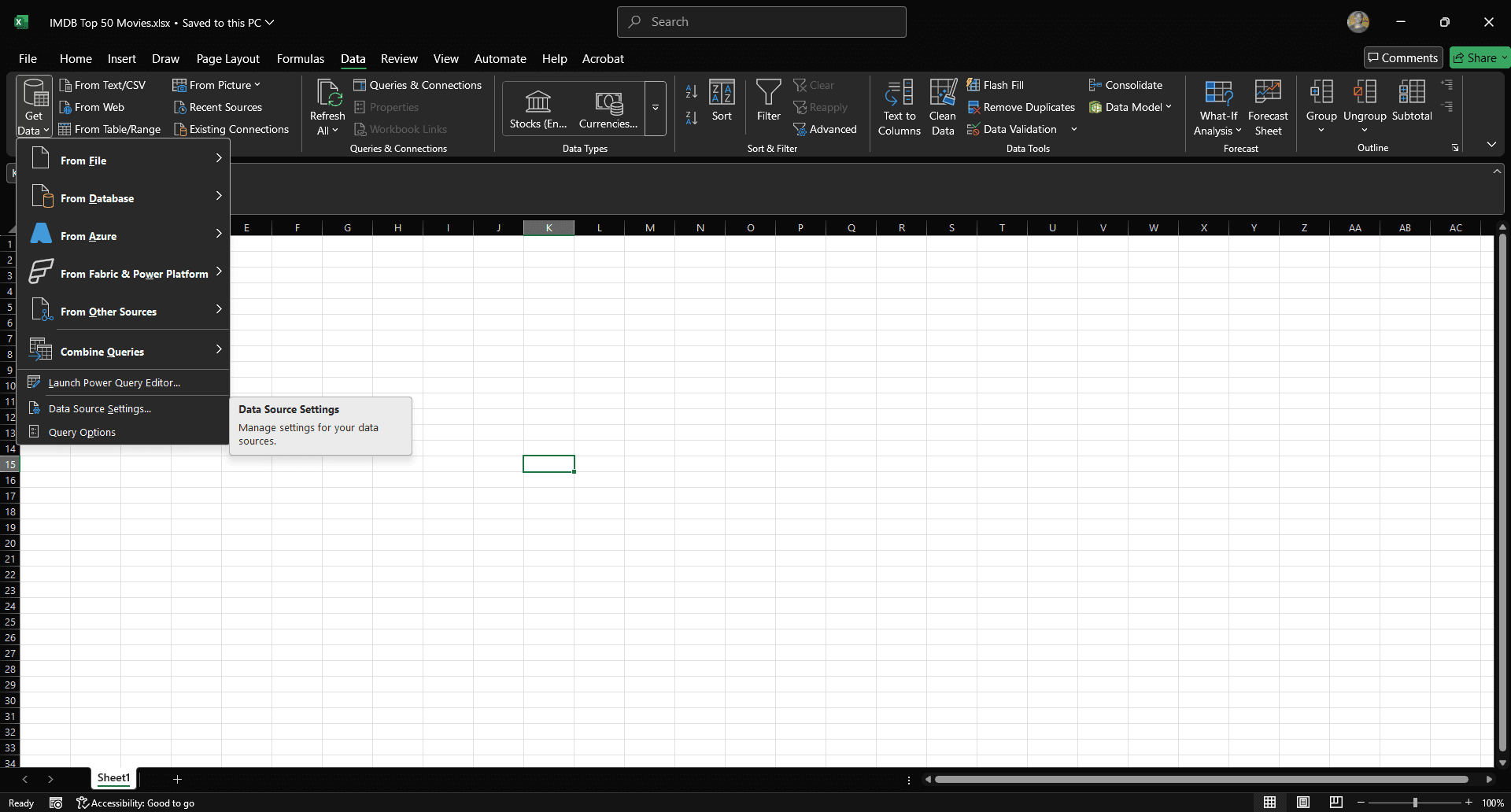

Step 2: Open the Power Query web import tool

Go to the top ribbon, select the 'Data tab,' and a dropdown will appear.

Step 3: Open the 'Get Data' menu

Click the container icon labeled 'Get Data' to open the dropdown list.

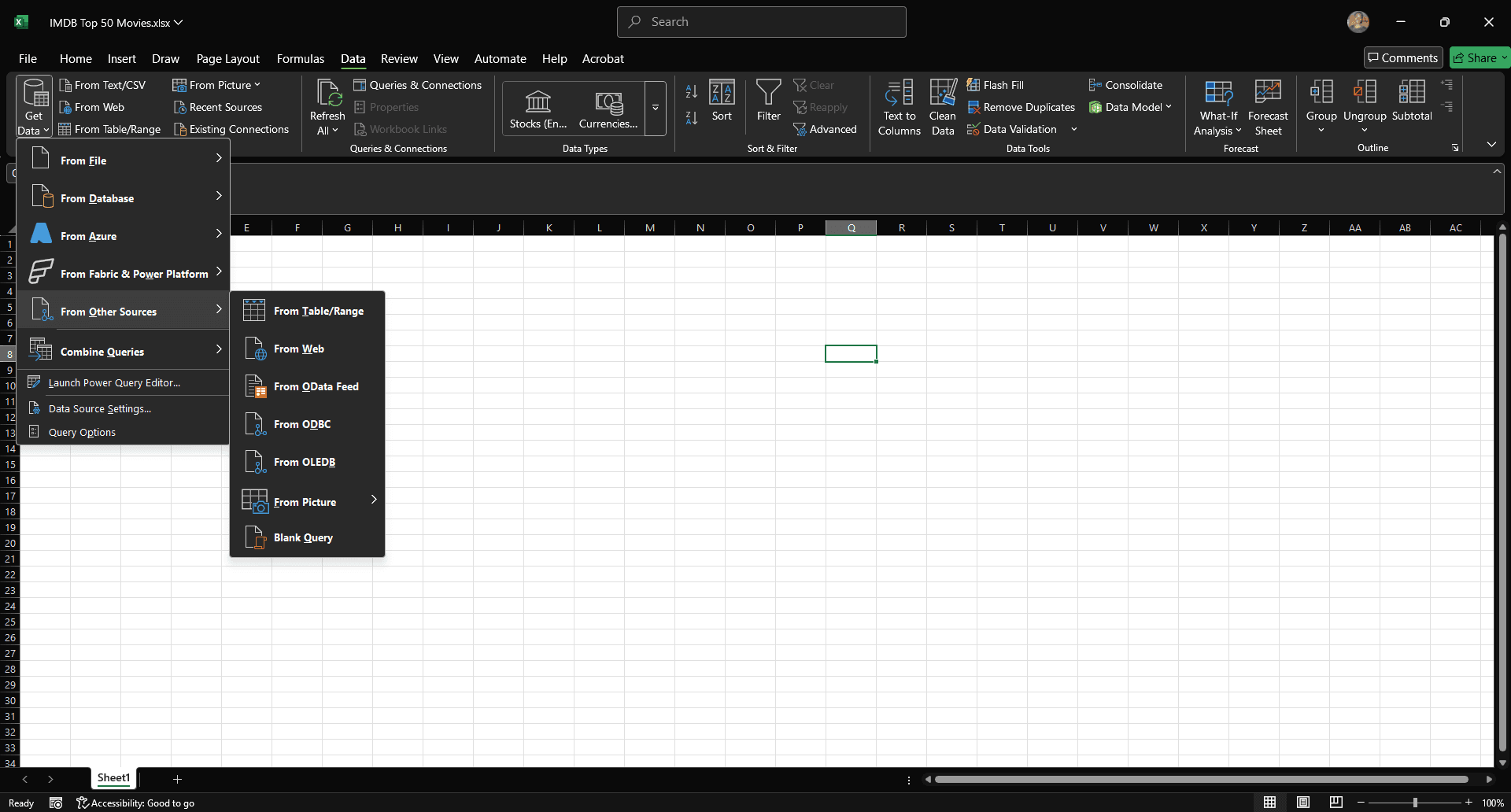

Step 4: Choose 'From Web' source

Select 'From Other Sources,' then choose 'From Web' from the dropdown.

Step 5: Enter the IMDb URL

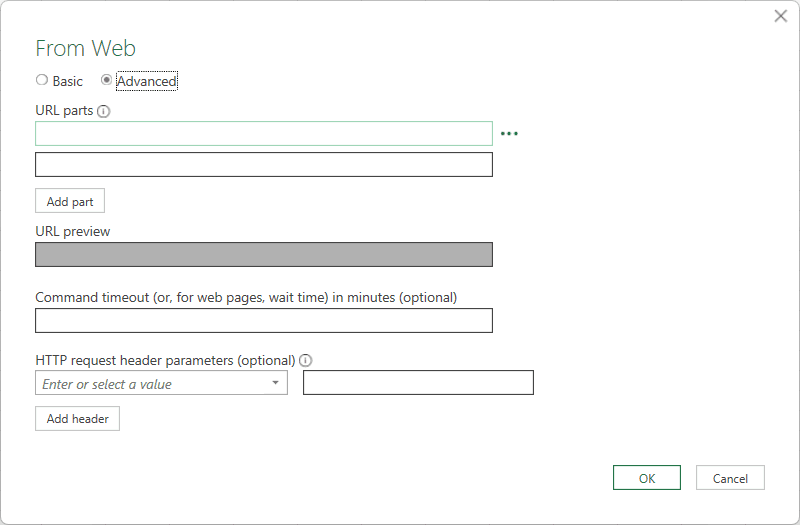

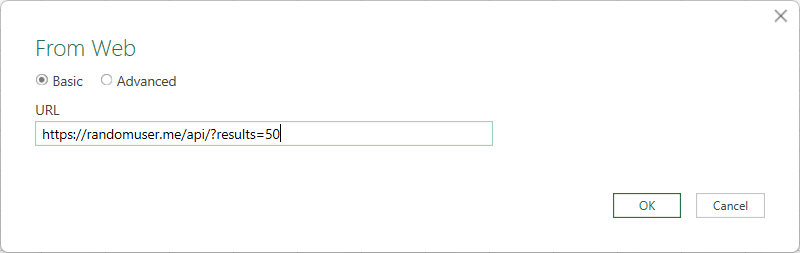

You’ll see a new menu with 'Basic' and 'Advanced' as your options.

'Basic' is for when you want to scrape from a single URL. 'Advanced' lets you add multiple URL parts. It’s useful when working with parameterized links or needing to combine multiple endpoints.

But here's the catch: Excel doesn’t fetch multiple full pages from different URLs at once. So even with 'Advanced,' you're still limited unless you build logic to loop or merge those sources manually. That’s where people often hit a wall.

For a cleaner setup, select 'Basic,' paste in your IMDb URL, and press 'OK.'

Step 6: Analyze the Navigator pane

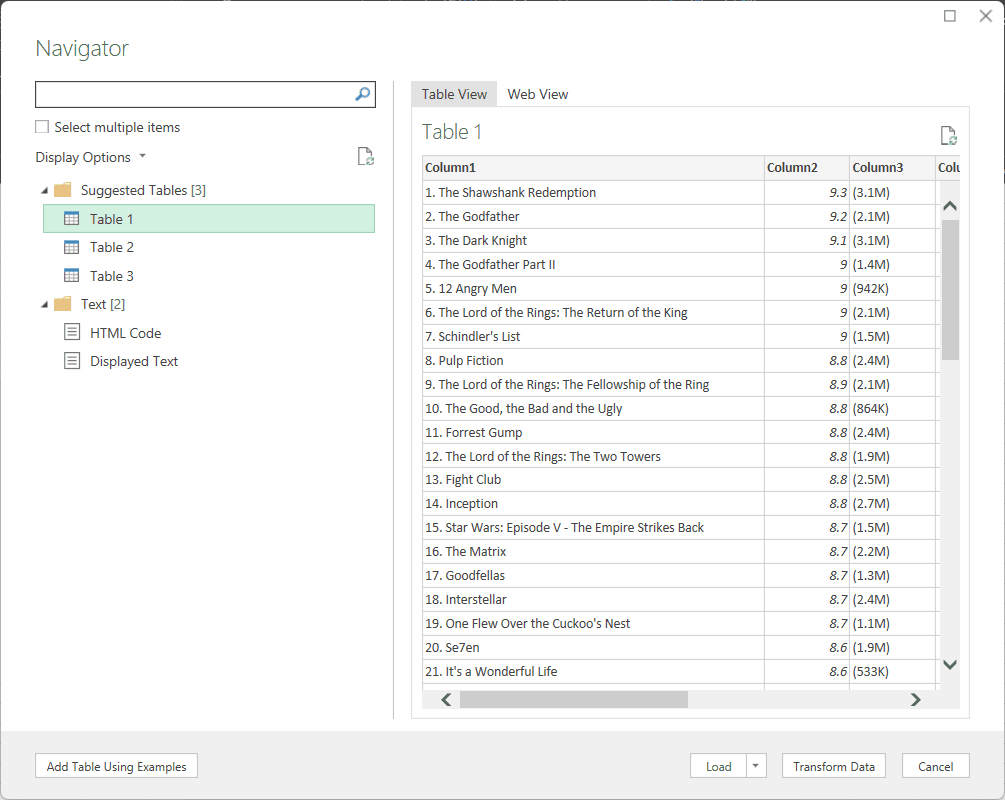

Once you hit 'OK' in the Power Query web importer, Excel tries to make sense of the page. What you’ll see next is the Navigator pane. This is where Excel previews all the data tables it thinks are worth extracting.

Step 7: Click Table 1

On the left, it lists all the HTML tables it could detect from the site. The right side is a live preview of whatever you click. Start with Table 1.

Once Power Query loads the tables, you’ll see IMDb’s Top 250 movies, clean and structured. On the bottom right, Excel gives you three buttons: 'Load,' 'Transform Data, ' and 'Cancel.' Don’t hit ‘Cancel’ unless you’re giving up (you’re not).

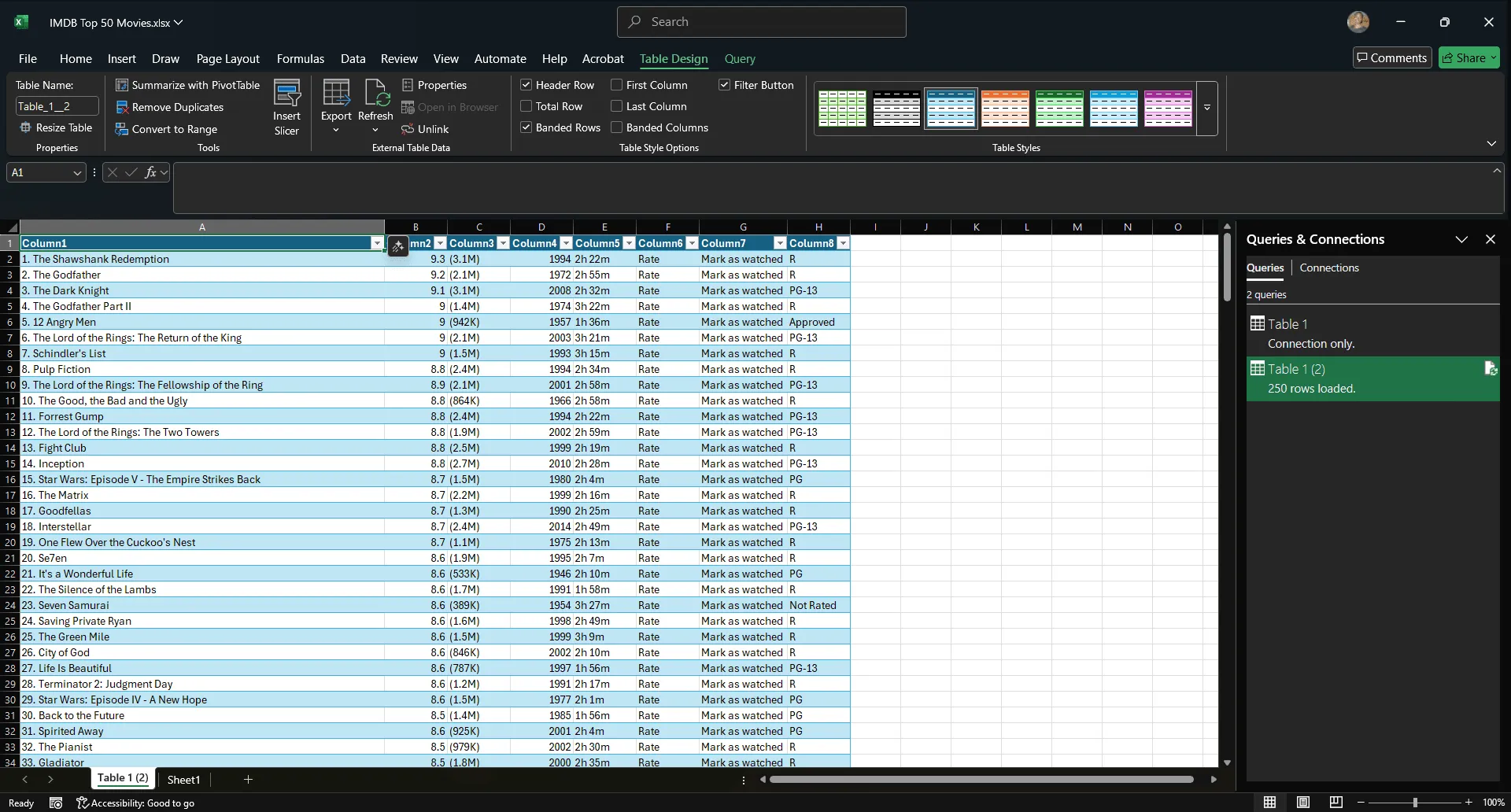

Step 8: Load or transform the data

If the table looks good, click 'Load' and drop it straight into your Excel spreadsheet. But if you want to filter rows, remove extra columns, rename headers, or split out values, 'Transform Data' is the smarter path. It opens the Power Query Editor, which lets you reshape the data before it lands in your sheet.

Since the data came through clean, there’s no reason to overthink it. Click 'Load,' and the list drops into your Excel sheet as-is.

That's it!

Web queries (legacy feature)

If you’ve been around long enough, you remember web queries. This was Excel’s earliest attempt at data extraction from websites. It was popular in the early 2000s, back when most websites still used clean HTML tables and a polite GET request could pull the goods.

Back then, there was no JavaScript to break things and no bot protection to fight. You could just point Excel to a page and scrape data straight into your spreadsheet. It was simple and it worked, until the web changed.

To use it today, you’ll need to enable the legacy import feature inside Microsoft Excel’s settings.

Step 1: Create a new workbook

Follow the same steps we used earlier: open Excel, create a new workbook, and give it any name you want.

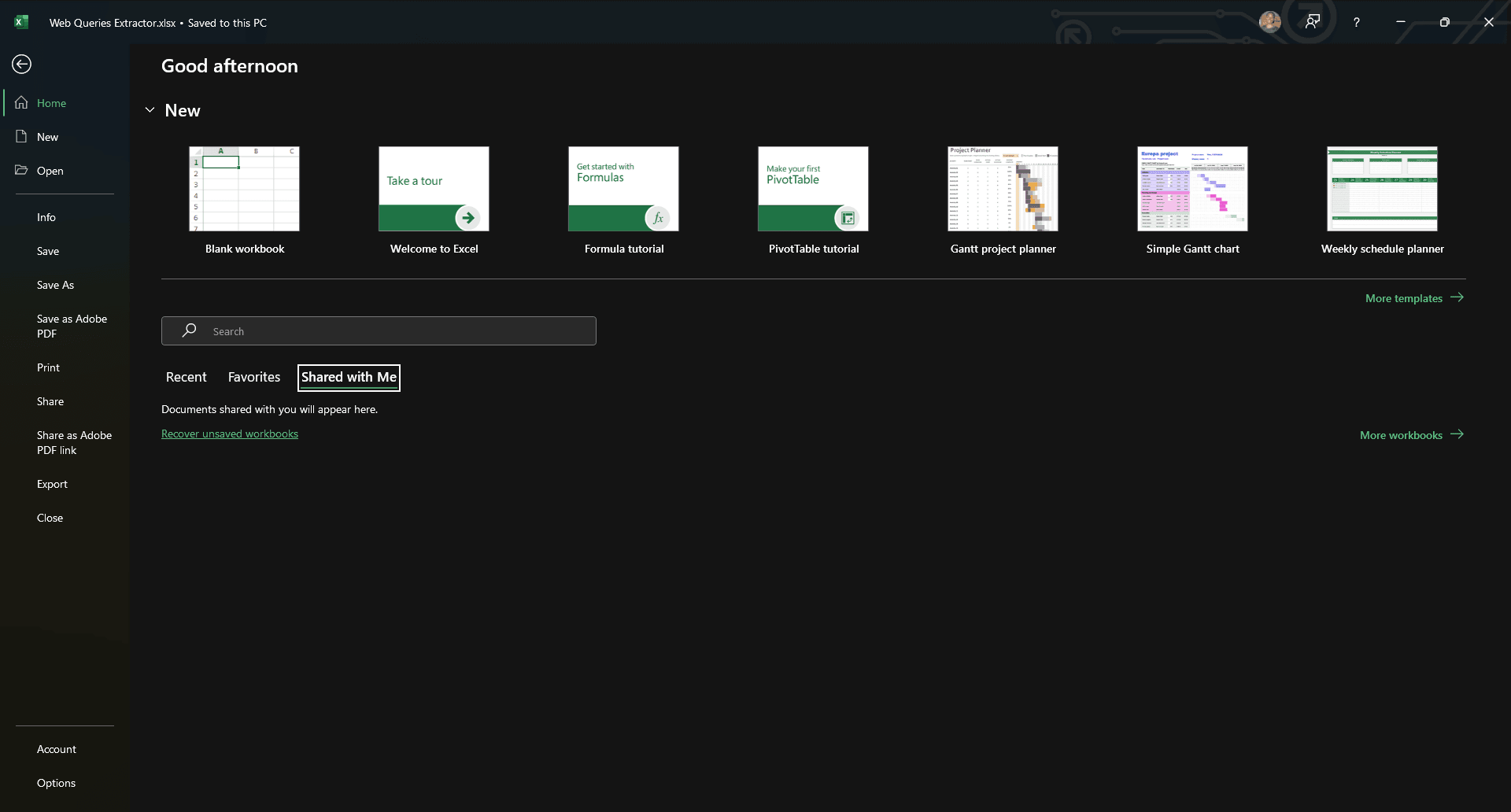

Step 2: Open the file menu

Click 'File' in the top-left corner of the ribbon.

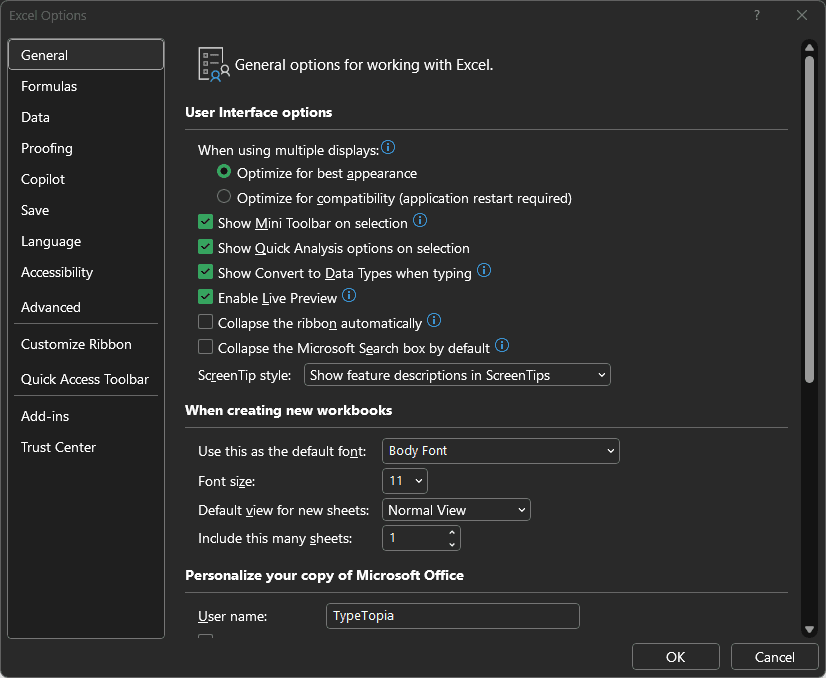

Step 3: Open Excel options

At the bottom of the left-hand menu, click 'Options.' This will open the Excel Options window.

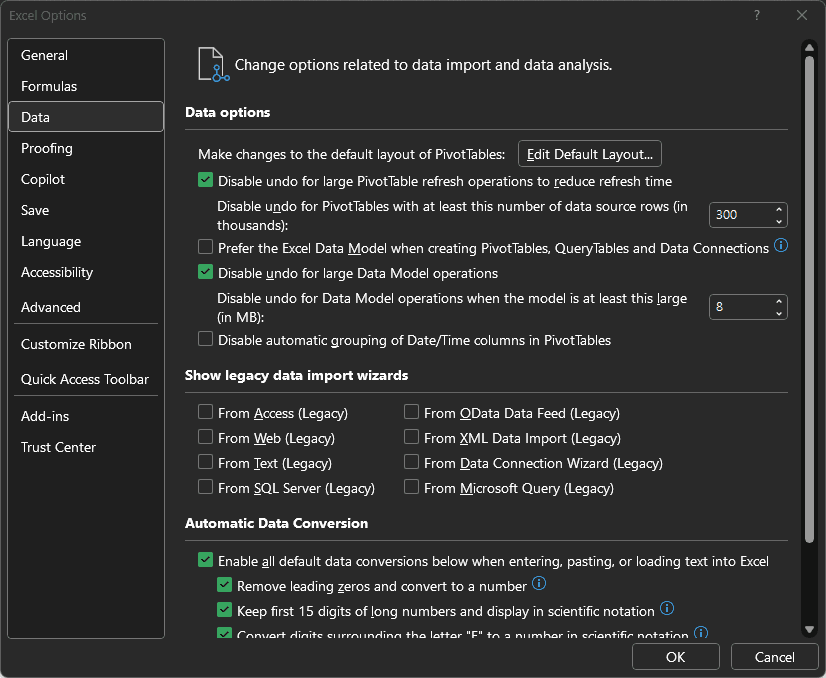

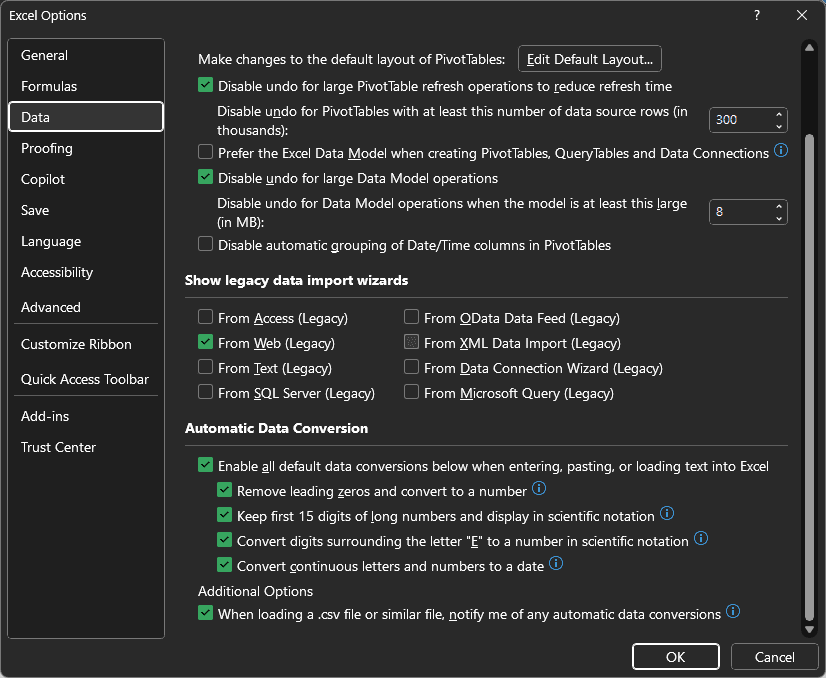

Step 4: Access data settings

In the left sidebar of the new window, select 'Data' to open Excel’s Data options.

Step 5: Enable legacy web import

Under Show legacy data import wizards, check the box for 'From Web (Legacy).'

Step 6: Close data options

Press 'OK' to close data options.

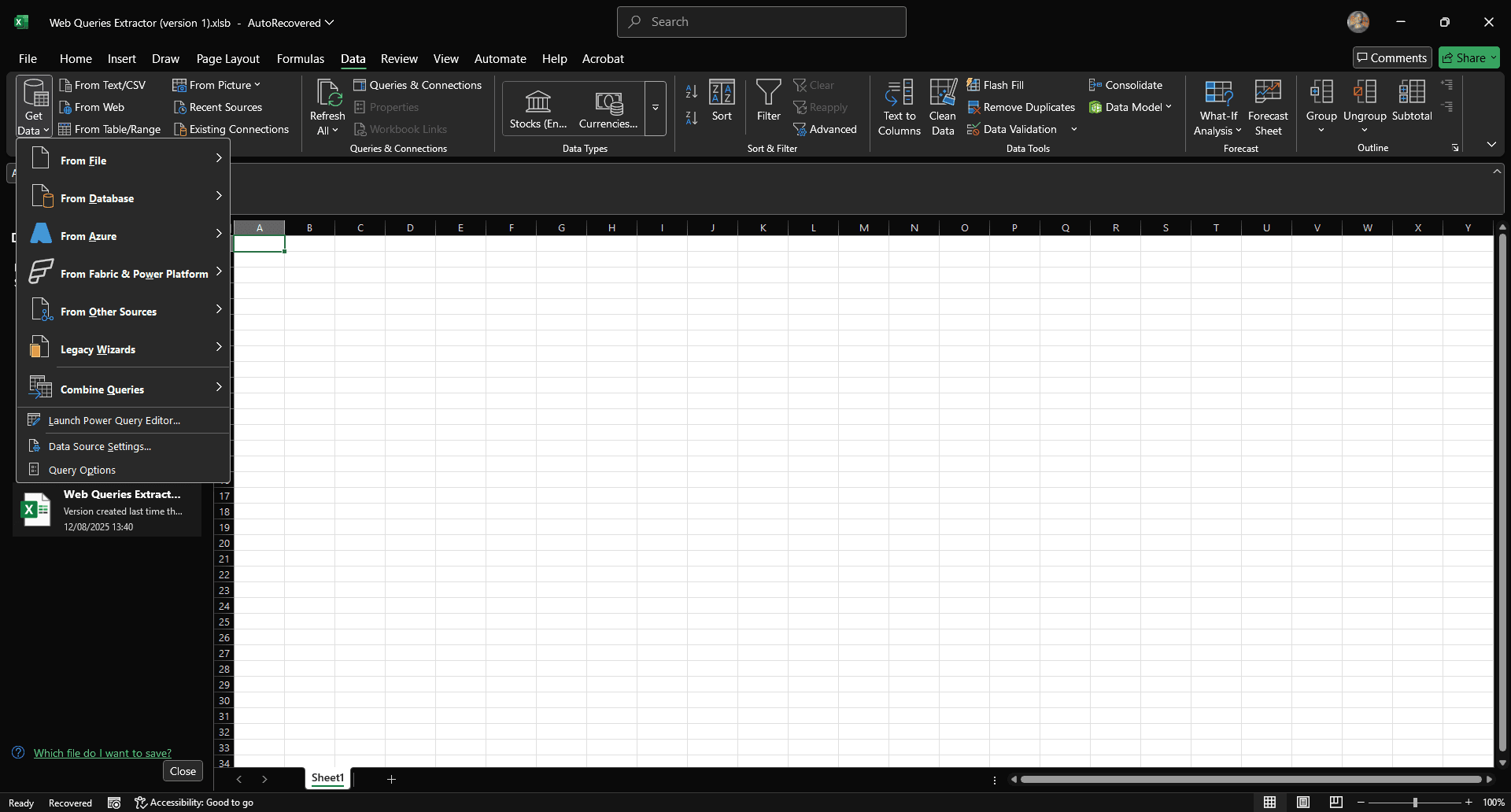

Step 7: Access legacy wizards menu

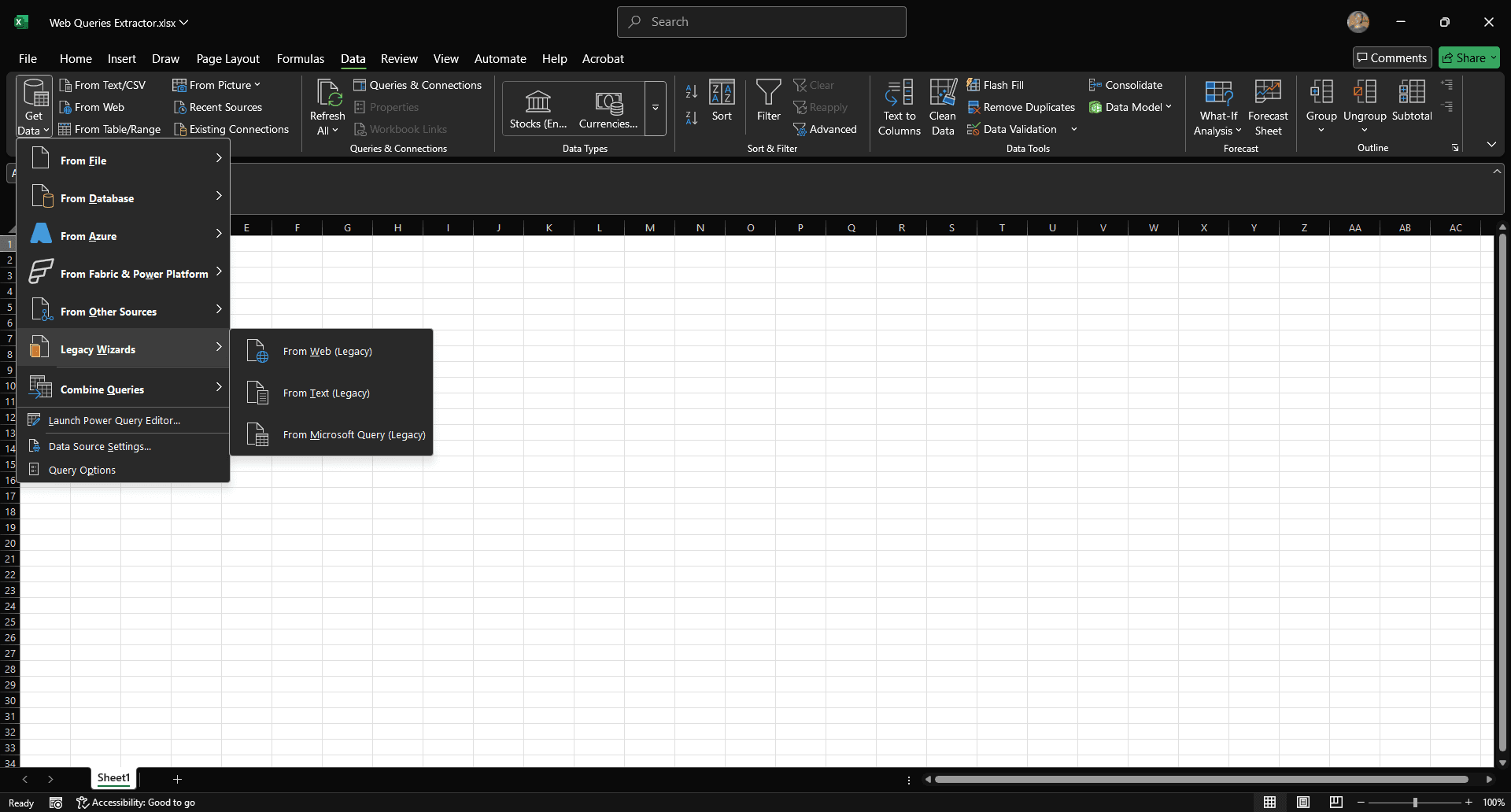

Follow the same steps we used for Power Query: go to ‘Data’ and then ‘Get Data.’ You’ll now see a new option labeled 'Legacy Wizards.'

Step 8: Select 'From Web (Legacy)'

Hover over 'Legacy Wizards,' then click 'From Web (Legacy)' from the menu on the right.

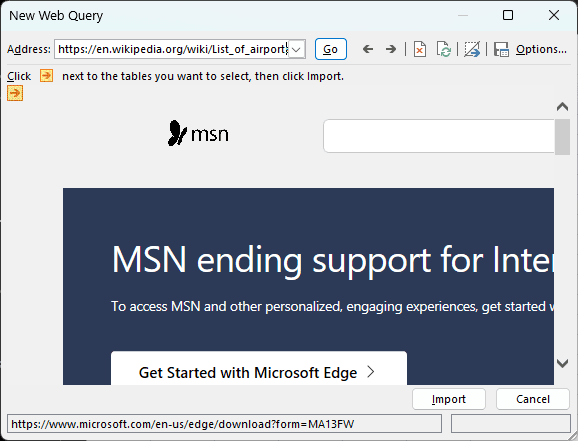

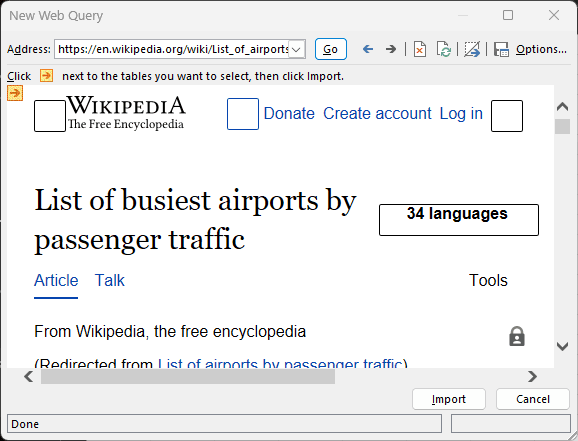

Step 9: Paste the URL and test the page

The trick here is knowing your target because this Excel web query method can only scrape from pages that load everything in plain HTML.

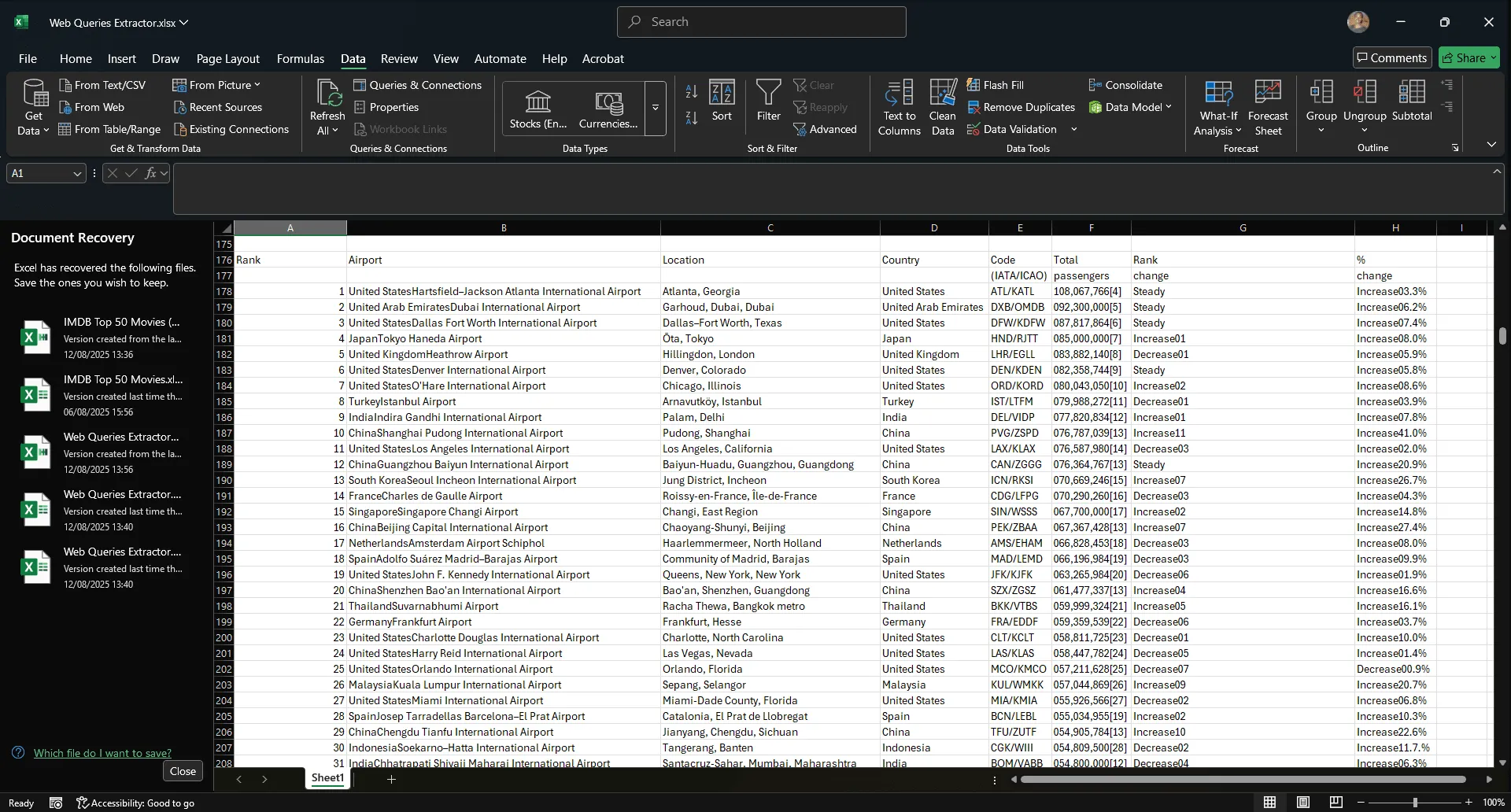

Our IMDb example won’t work here, so let’s go with Wikipedia’s List of busiest airports by passenger traffic as the example. The table sits right in the markup, so the web scraping tool inside Excel can grab it cleanly. Try this on a dynamic site and you’ll most likely encounter errors.

Step 10: Run the web query

Click 'Go' to run the web query. Excel will navigate to the web page and display a preview.

Step 11: Open import options

Click 'Import' to open the import options dialog.

Step 12: Import data to worksheet

Select the current worksheet and click 'OK.' Excel will populate the sheet with the extracted data.

That's it!

Method 2: No-code web scraping tools

You can scrape data from a website to Excel using the built-in tools above and even automate it with refreshable queries. But for dynamic content, login pages, or JavaScript-rendered tables, these tools will break down.

You can’t scrape data from LinkedIn, BestBuy, or Amazon with a polite request and a spreadsheet. If you're not building your own web scraper, then the next best move is to reach for a no-code web scraping software.

This kind of software does the heavy lifting for you. It sends HTTP requests, parses the HTML or DOM, navigates the page, targets the data you care about, and formats it for export.

Some export to CSV. Others send data straight into Excel. Underneath it all, it’s still a scraper, so if you want consistent automation, choose a tool that fits your source, your target data, and your workload.

Here is what you should look out for in a no-code web scraper:

- Ease of use

If using a no-code scraper feels like learning to code, you’re better off building your own. A learning curve is expected, but the tool should still be intuitive and easy to navigate.

- Browser emulation capabilities

Most modern websites build the page as you browse. If your data scraping tool can’t handle JavaScript rendering, scrolling, clicking, or login flows, it’s going to miss everything. Real scraping means simulating a browser session. That includes waiting for dynamic content, handling delays, and interacting with the page before trying to scrape data.

- Element targeting precision

Once your tool can act like a real browser, the next question is: can it grab what you need precisely and repeatably? A serious no-code web scraper should support CSS selectors and XPath.

Point-and-click is fine for static pages, but the second your data lives inside a dropdown, a modal, or a nested element, you need deeper targeting. Good scrapers also surface suggestions from the page structure, saving you from endless guesswork. And if the data’s hidden, the tool should know how to dig.

- Pagination handling

Once the tool finds the right data, it needs to keep going because sometimes, the most useful data spans multiple pages. Look for a scraper that supports both common pagination strategies: click-based navigation and URL pattern crawling.

- Scheduler and automation support

You don’t want to click 'Run' every morning. A no-code scraper gives you scheduling control depending on what your workflow needs. It should automate the scraping process, send the results to Excel, and alert you if something breaks.

- Rate limits, CAPTCHA handling, and IP rotation

Make no mistake: websites don’t roll out the red carpet for your scraper. They rate-limit, fingerprint, and throw CAPTCHAs the second your scraper stops acting human. You need a no-code tool that fights back quietly. Look for built-in CAPTCHA solving, randomized delays, and full proxy rotation support.

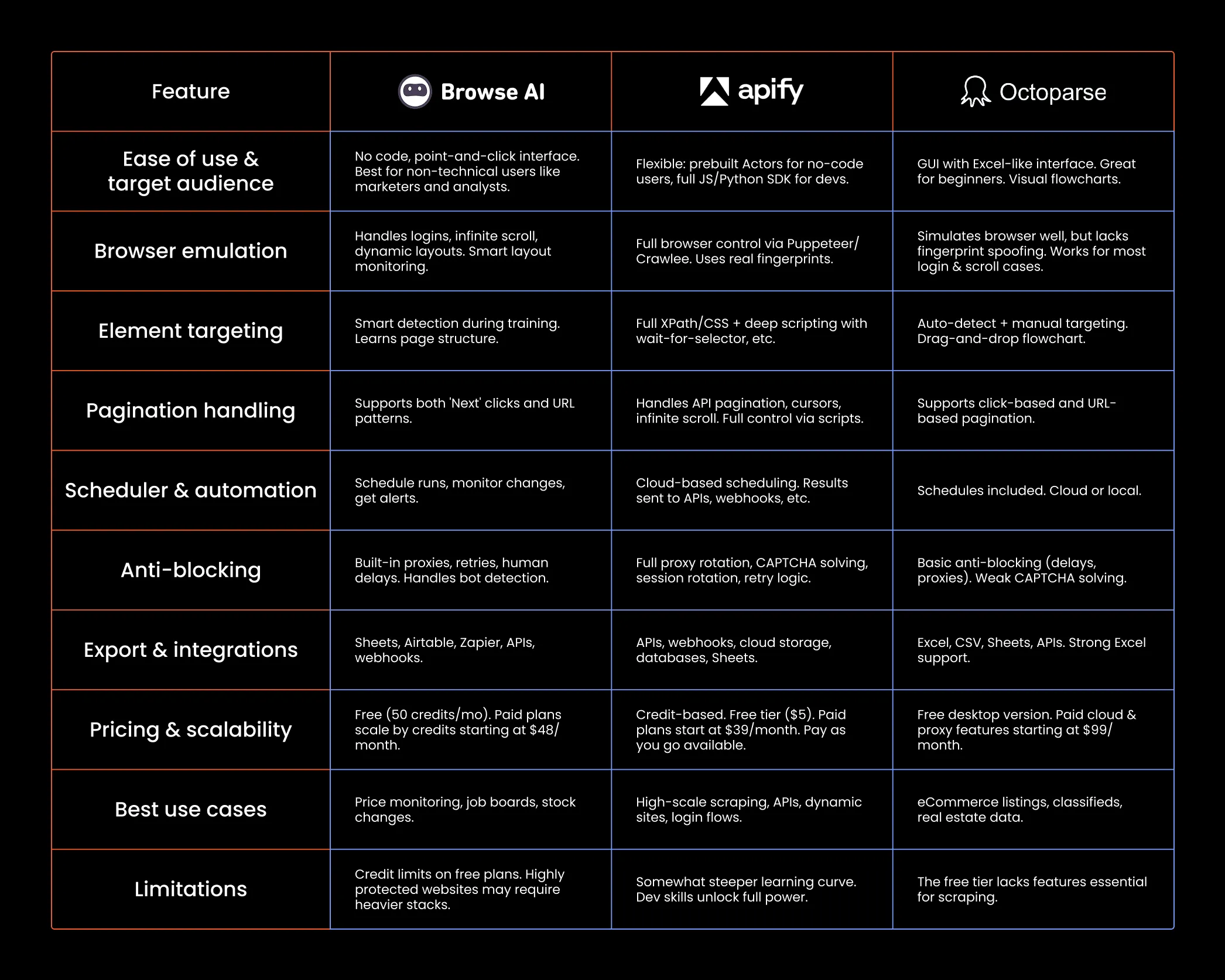

Tool highlights

Let’s compare popular no-code web scrapers on the internet right now based on the features we just discussed:

Method 3: Using website APIs

Sometimes, scraping is the wrong move. If the website offers an API, start there.

You won’t have to fight dynamic pages or dodge CAPTCHAs, and you can automate the pull directly into Excel using Power Query, Python, or a no-code tool with API support.

But here’s the tradeoff: not every site offers an API, and even when they do, the good stuff is often behind an API key, usage limits, or a paywall. Still, if your goal is to scrape data from a website to Excel automatically, this is the most stable path when it's available.

Before you load up a scraper, check the site’s/api/directory or developer docs. If it’s there, skip the scraping.

How does it work in practice?

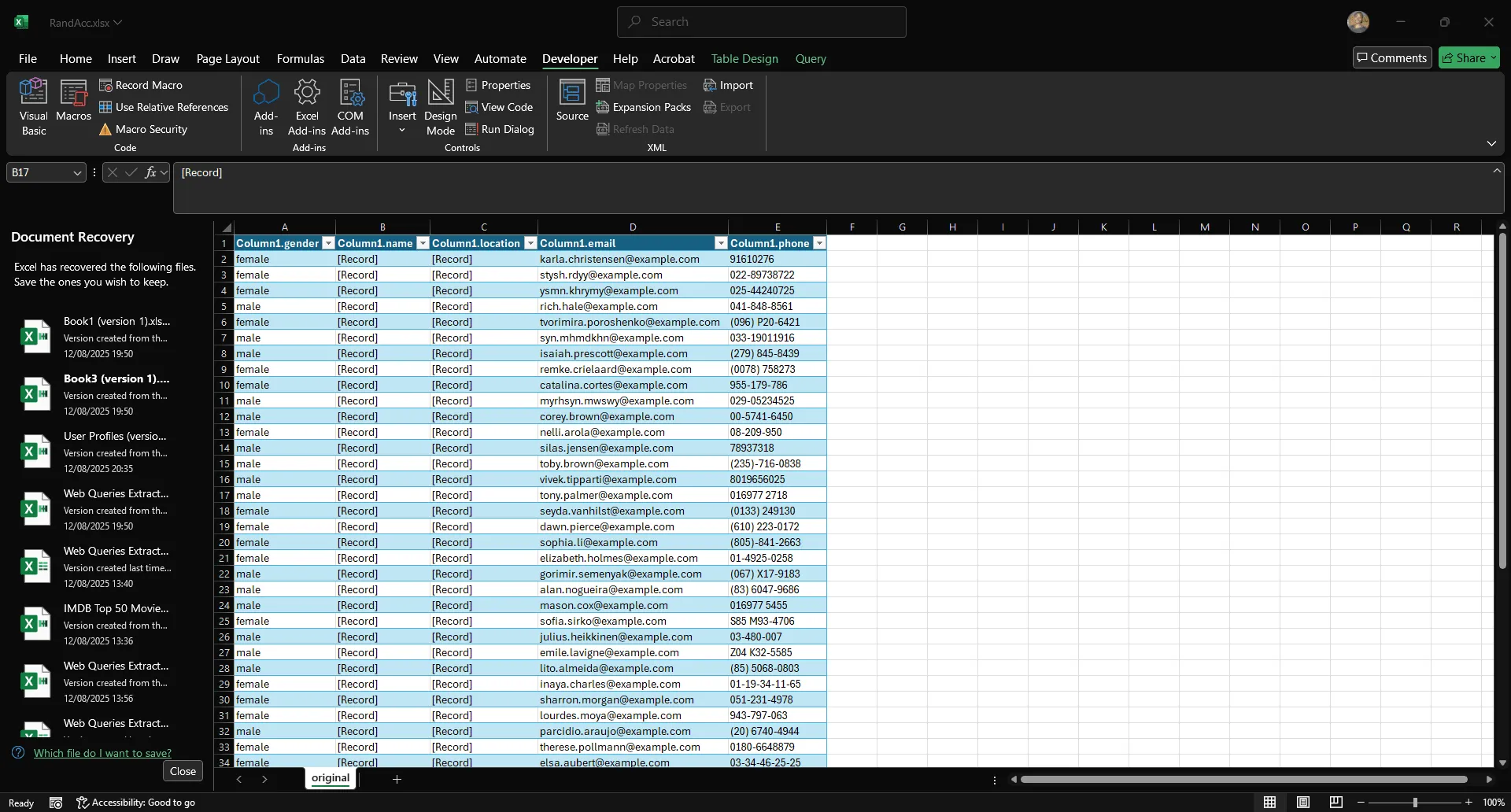

Let’s say you're a developer testing a platform and need realistic fake user profiles. A natural choice would be to use a free, open-source API like Random User Generator. If you want 50 profiles, you'd use this URL:

https://randomuser.me/api/?results=50

Now let’s use Power Query to import that data directly into Excel.

Step 1: Start a new workbook

Open a new Excel workbook and name it whatever you like.

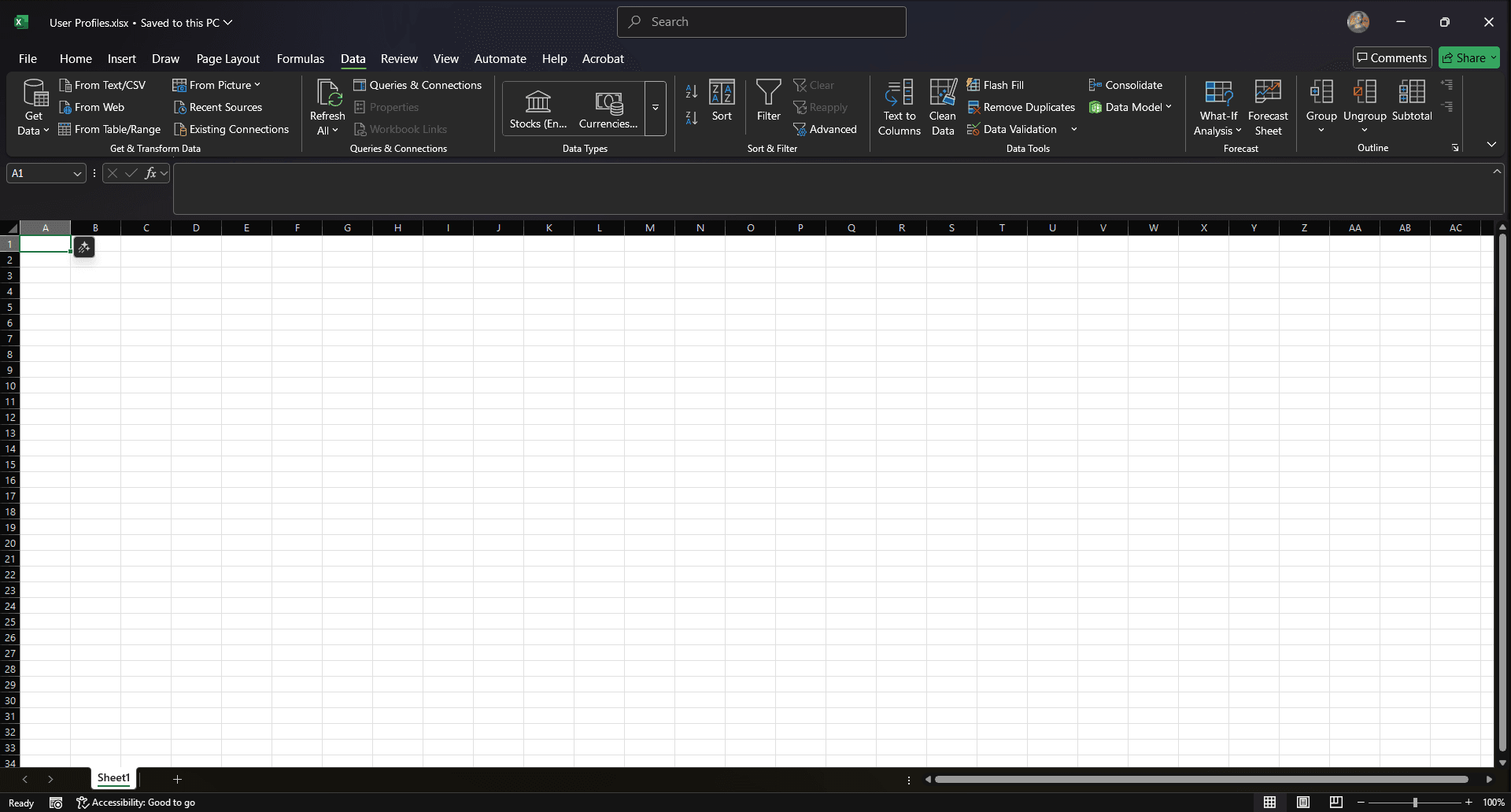

Step 2: Navigate to the data tab

In the top ribbon, go to 'Data.'

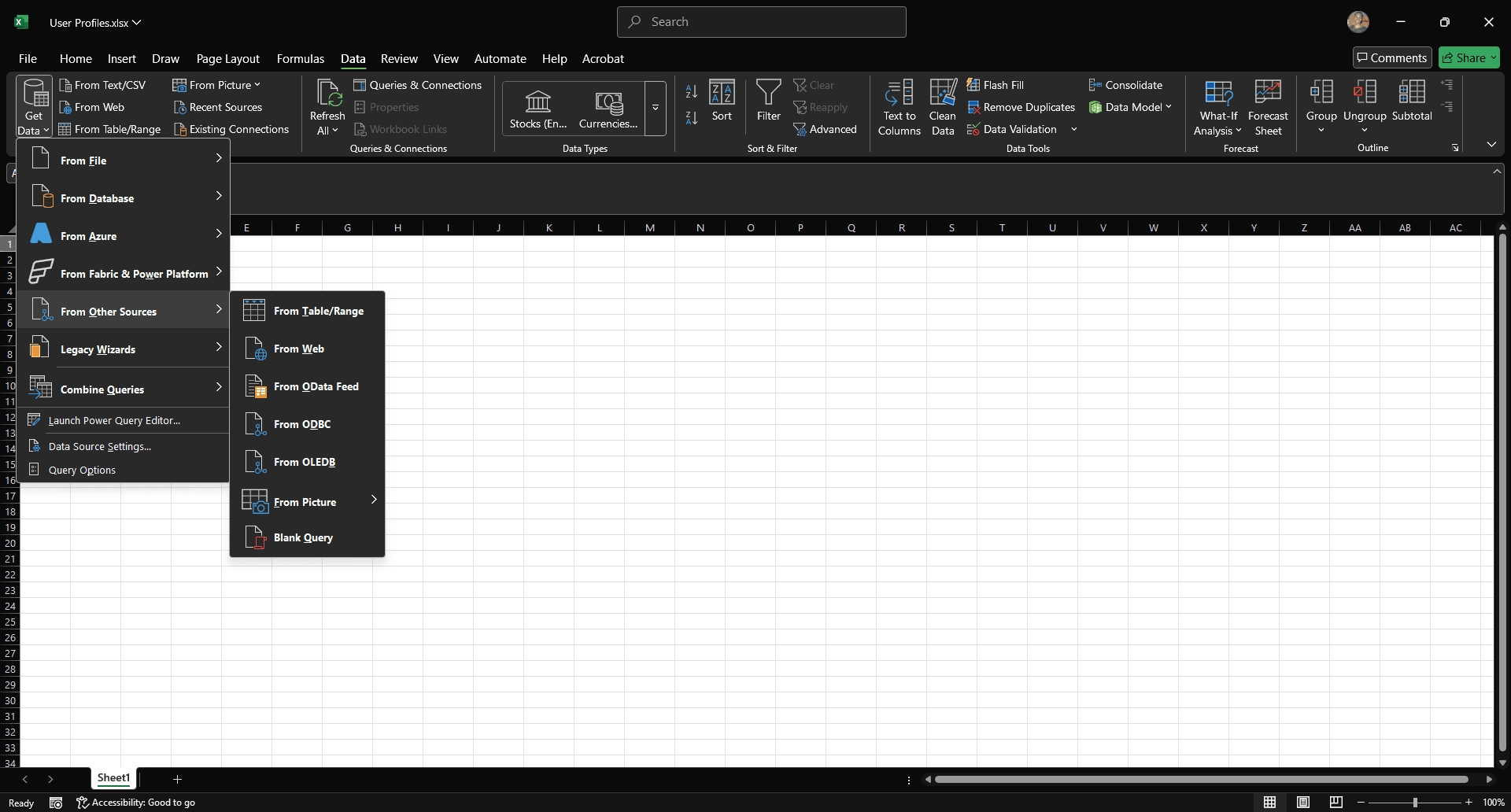

Step 3: Select 'From Web' source

Click 'Get Data,' and hover over 'Other Sources,' then select 'From Web' from the menu that appears.

Step 4: Paste the URL

In the new menu that appears, paste the API URL:

Step 5: Connect to the Power Query Editor

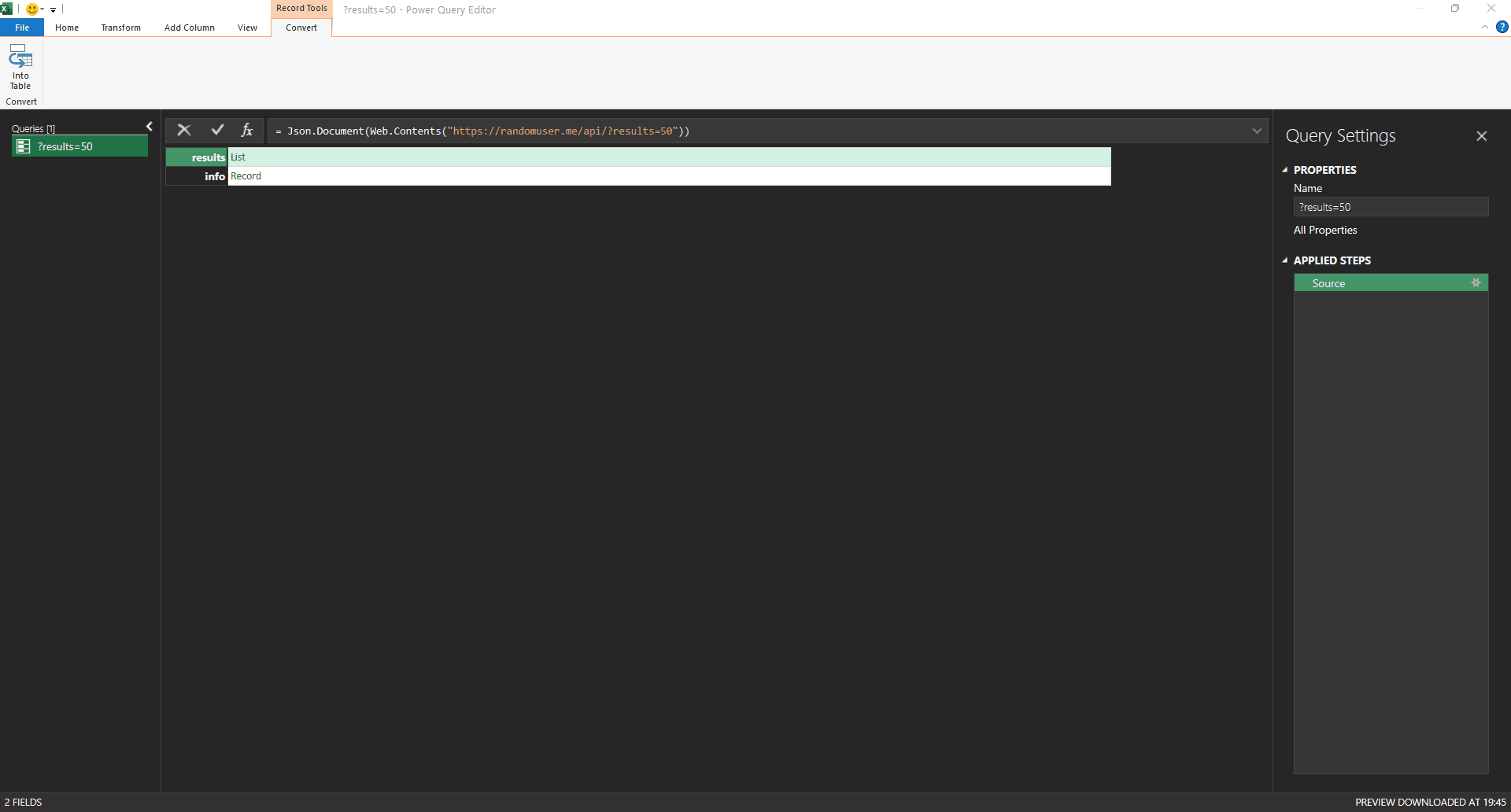

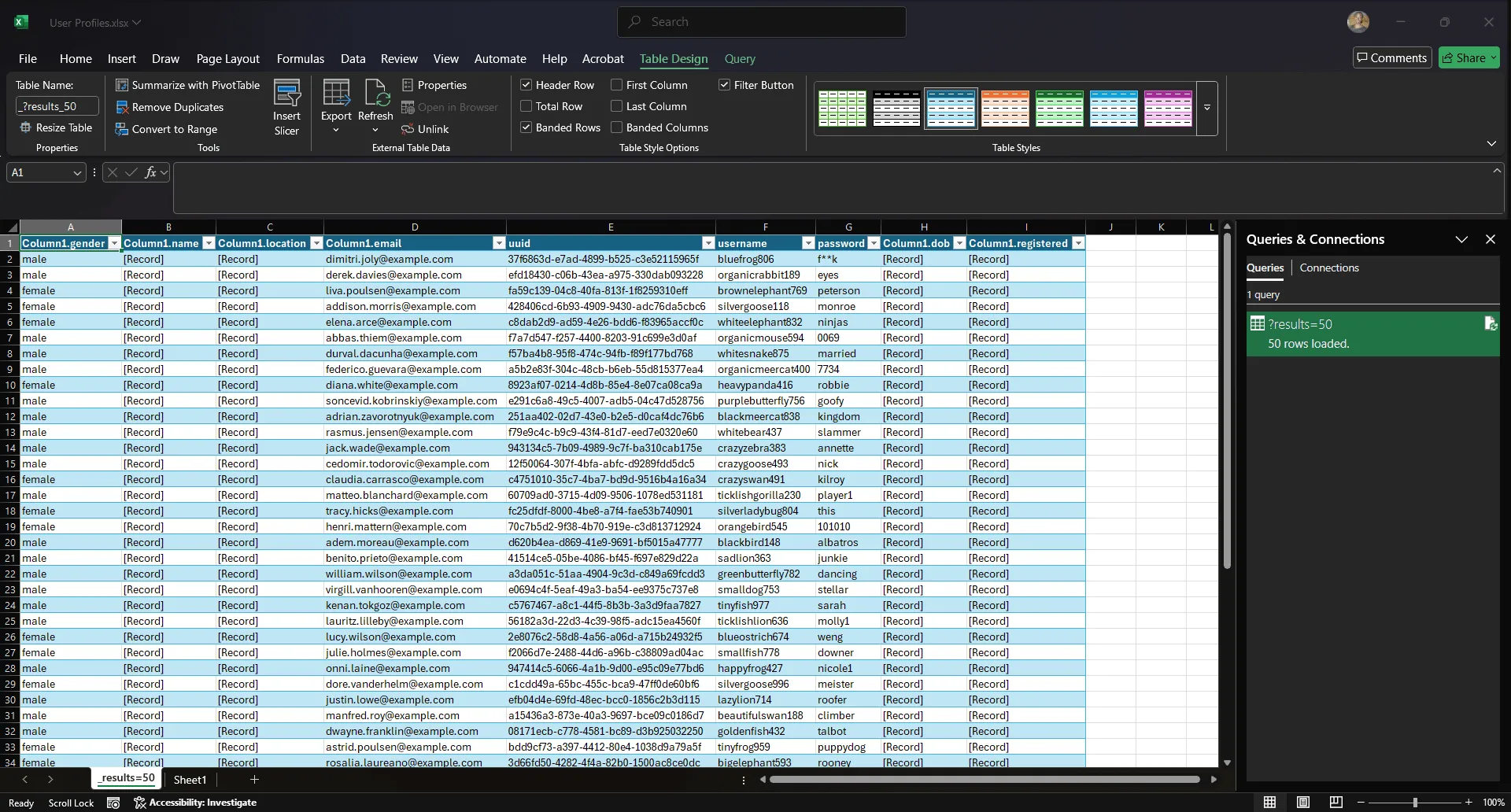

Click 'OK' to connect and wait for the Power Query Editor to load. You’ll see a preview with a table containing two fields: results and info.

Step 7: Expand the results list

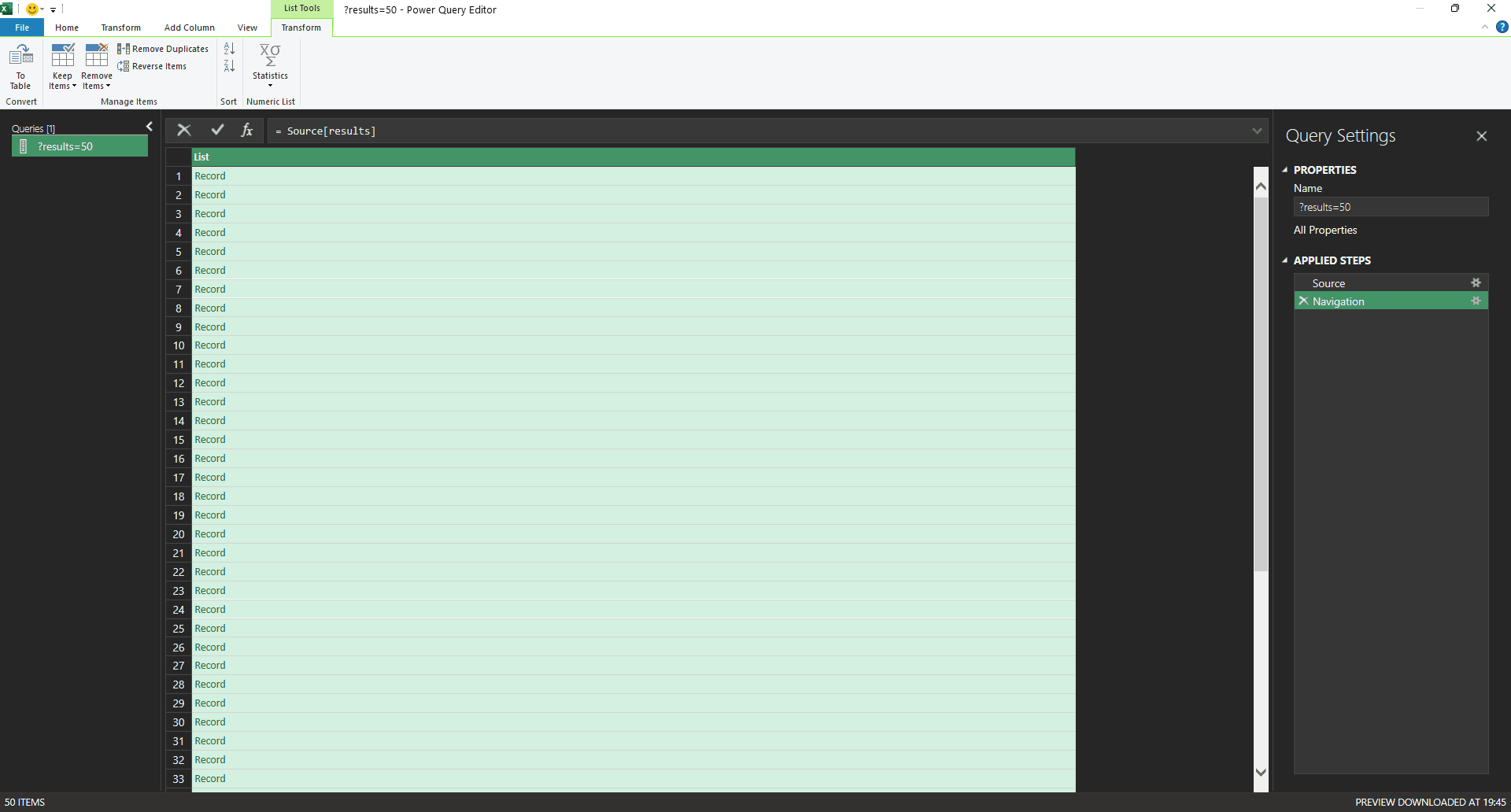

Click on the word 'List' next to the results. This opens the list of user records from the API.

Step 8: Convert list to table

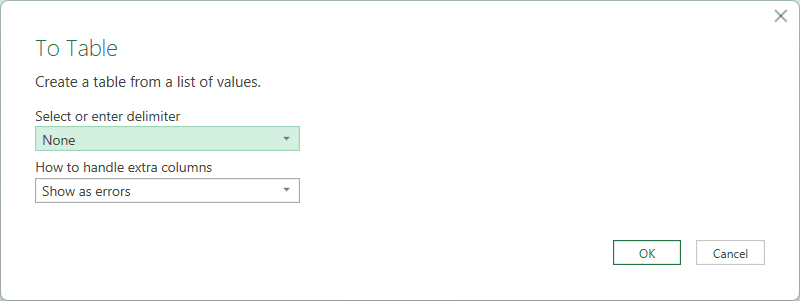

At the top ribbon, under the List Tools tab, click 'To Table.' It’s the first option on the left. This converts the list of records into a table so you can expand and work with the data in Excel. A new dialog box will appear:

Step 9: Confirm table settings

In the To Table window that appears, leave the default settings as they are: ‘Delimiter: None’ and ‘Extra Columns: Show as errors.’ Just click 'OK' to continue. We’re not splitting any text or handling extra columns here, so no changes are needed.

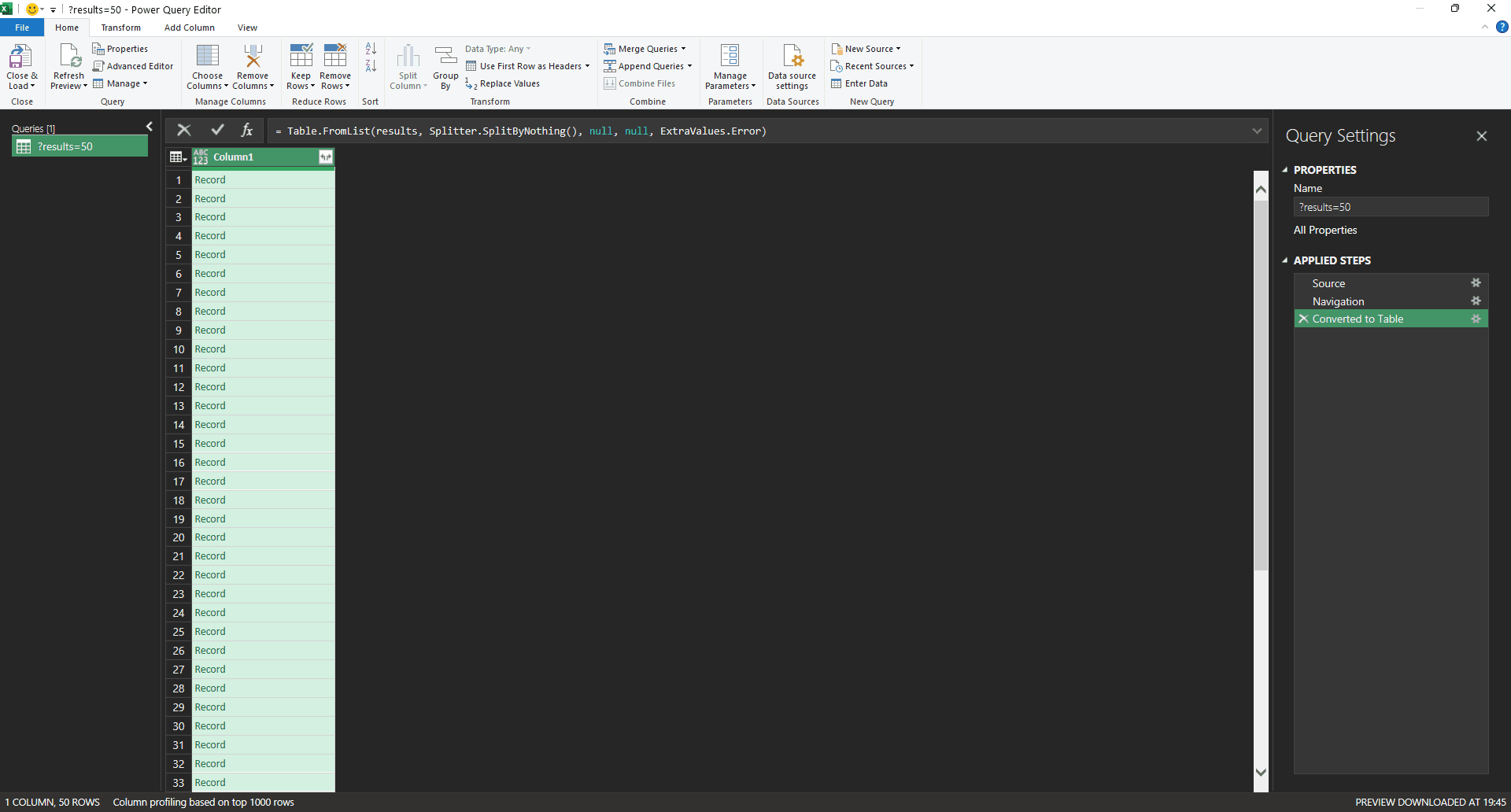

You will now see a table with a column named Column1 containing multiple record rows.

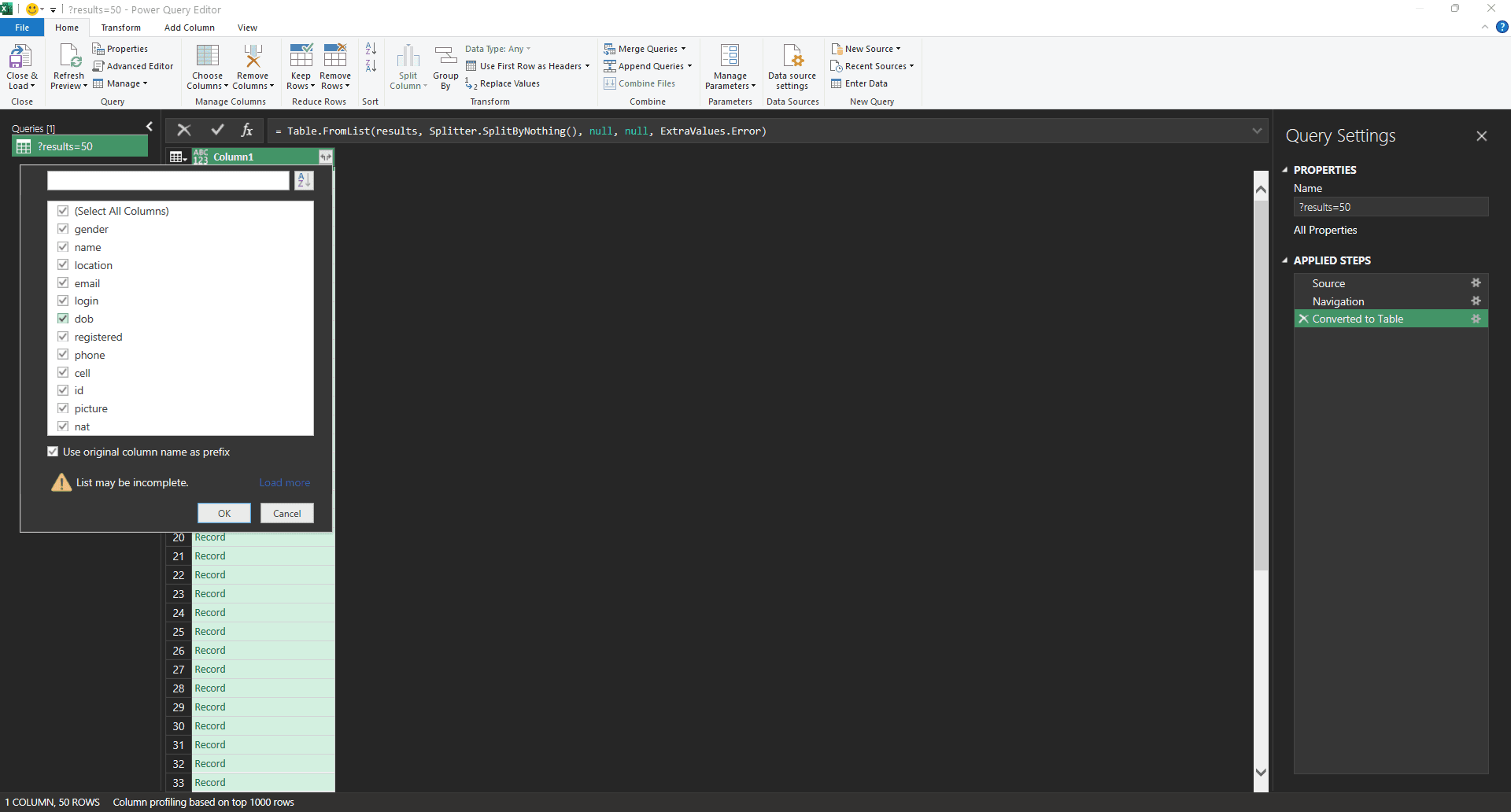

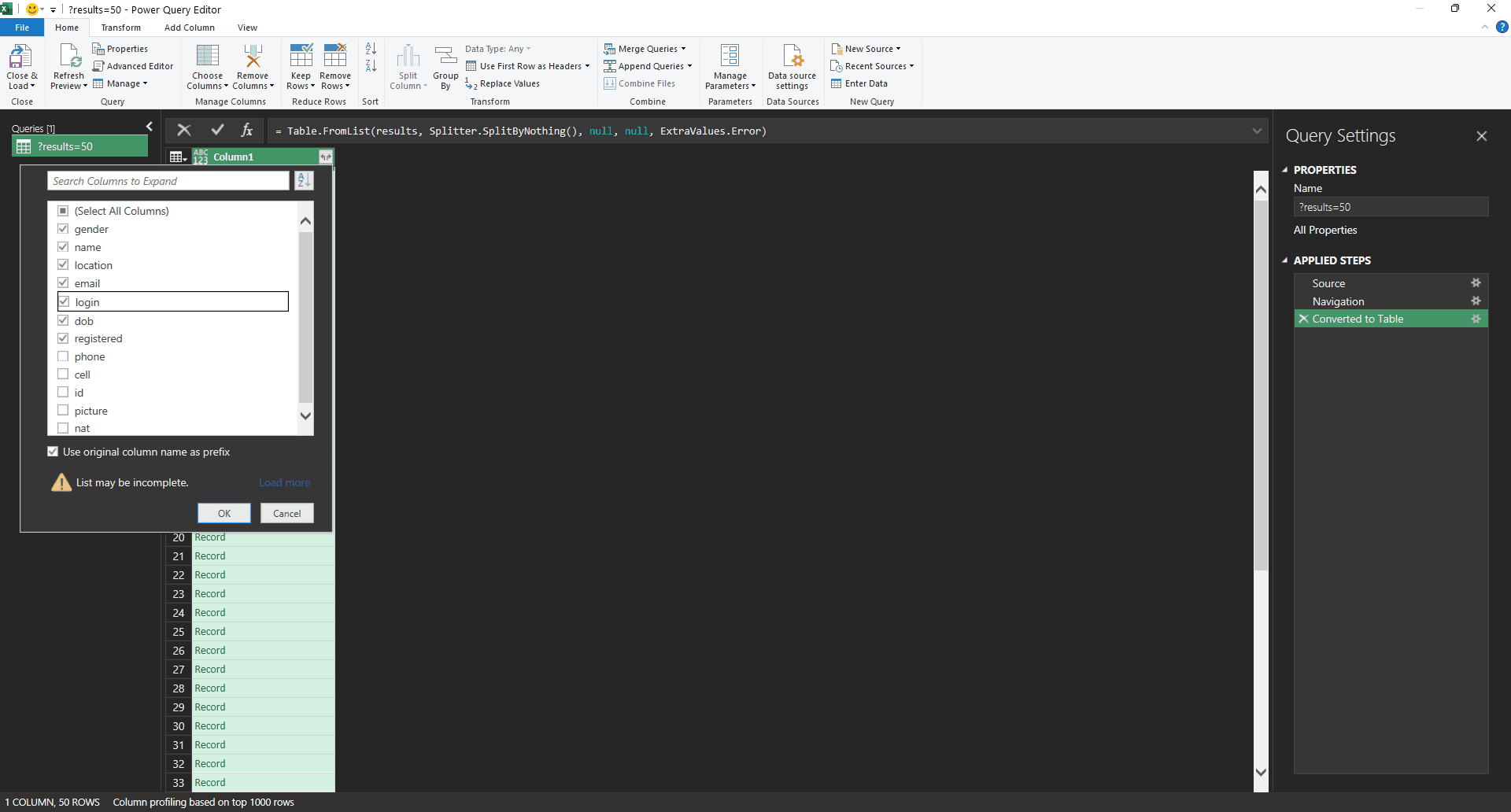

Step 10: Expand record fields

Next to Column1, click the expand icon (the one with two arrows pointing away from each other). A list of available fields will appear, all checked by default.

Step 11: Choose specific fields

Uncheck 'Select All Columns,' then manually check only the fields you want to keep in your Excel sheet.

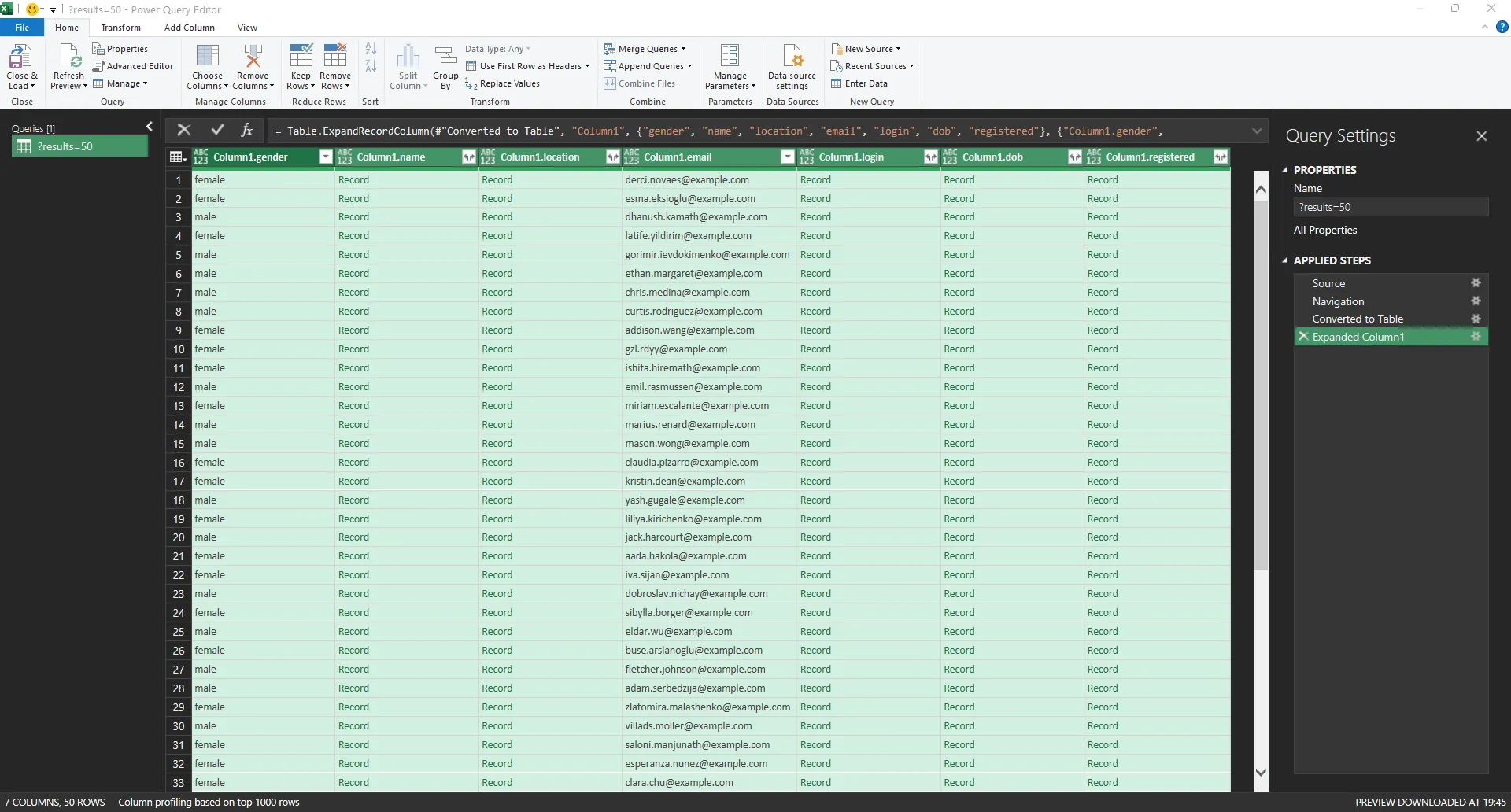

Step 12: Load flattened table

Click 'OK.' Your data will now appear in a flat table, though some columns, like name and location, will still contain nested records.

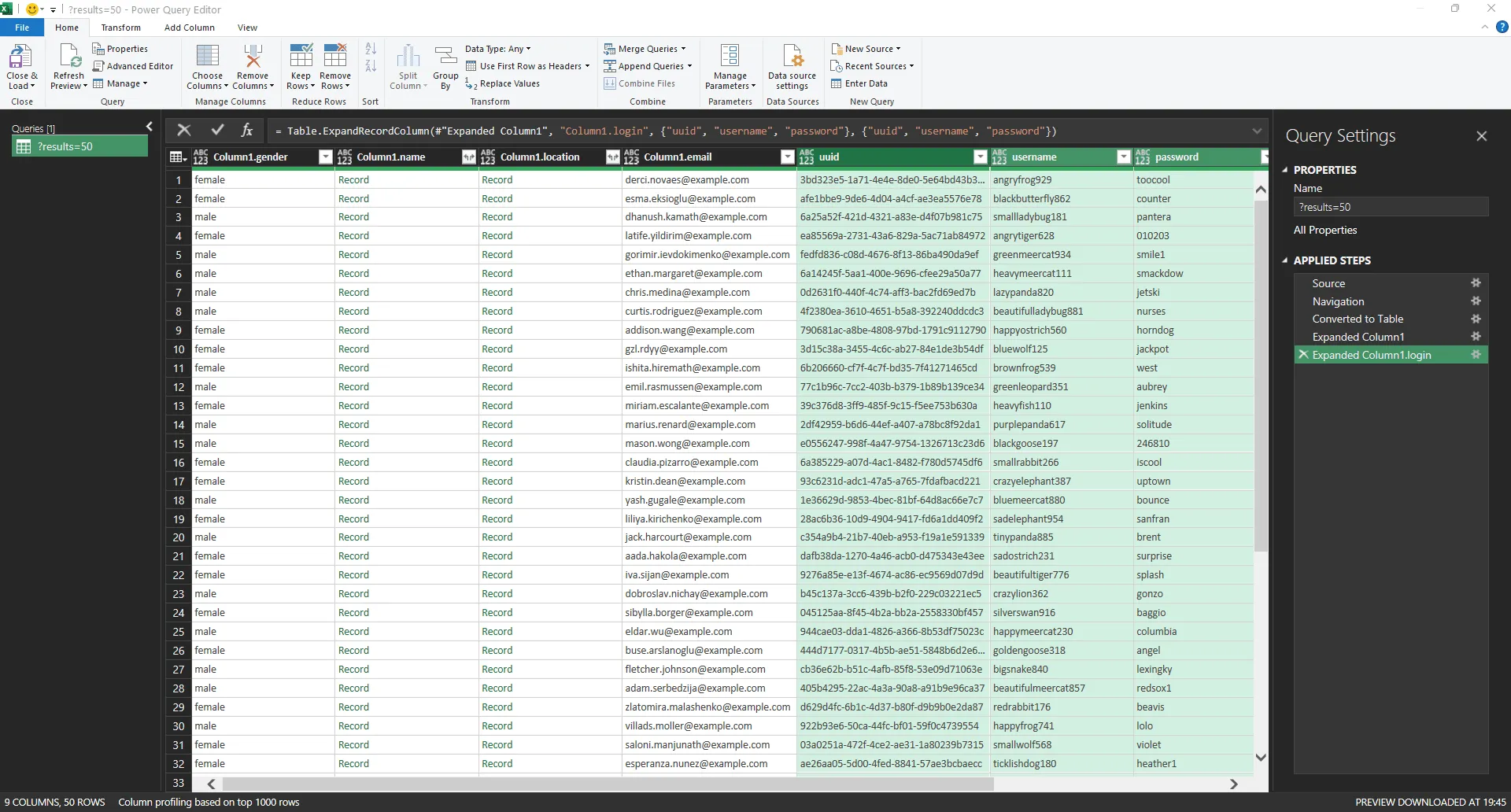

Step 13: Expand nested columns

For any column that still displays as a record (such as login, name, or location), click the expand icon next to the column name. Then, select the specific fields you want to include. For example, expanding the login column lets you choose fields like username or uuid.

Step 14: Load data to Excel

Once all your desired fields are visible, go to the top ribbon and click 'Close & Load.' Power Query will close, and the data will begin loading into your Excel sheet automatically.

That's it!

Other automation approaches

Beyond Microsoft Excel’s built-in tools, no-code web scraping software, and APIs, there are situations where you’ll want more control over what happens after the data lands in your spreadsheet. That’s where Visual Basic for Applications (VBA) comes in.

Visual Basic for Applications (VBA)

VBA is Microsoft’s long-standing scripting language to automate tasks in Excel and other Office apps. It can scrape simple pages through XMLHTTP or WinHTTP, but that’s not where it’s most valuable today.

Modern websites with JavaScript or bot protection will break that flow instantly. Where VBA shines is in taking the data you’ve already imported, whether from an API, Excel web queries, or a web scraping tool, and automating what happens next.

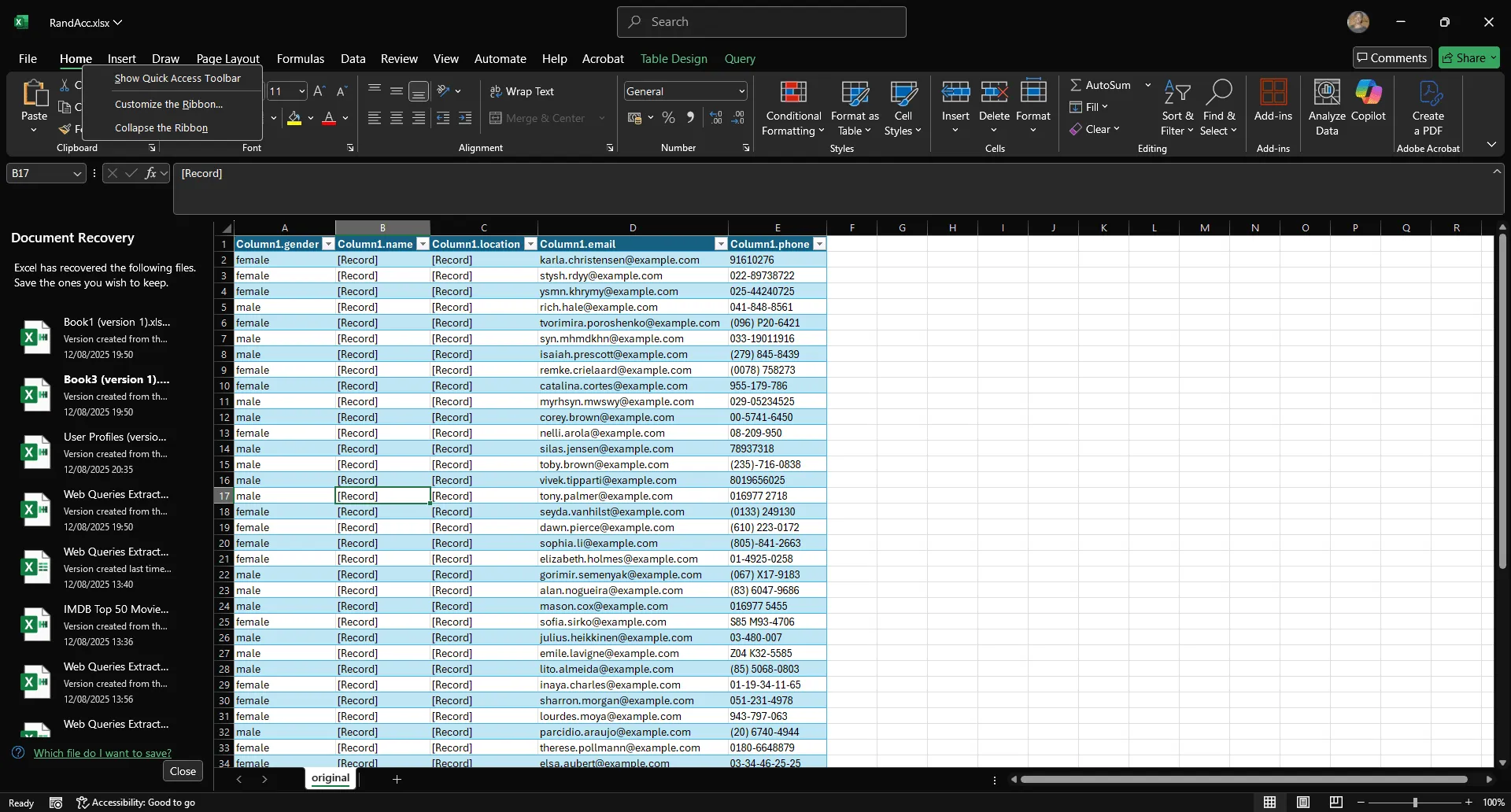

Say you just pulled in a list of 50 fake profiles from the Random User Generator API into your Excel spreadsheet. You need to separate out only female profiles and send them to another team without touching the rest of your dataset.

A small VBA macro can filter those rows, copy them to a new sheet, and save the file automatically.

Let's talk about how you can do that:

Step 1: Enable the Developer tab

In your open worksheet, enable the Developer tab by right-clicking anywhere on the ribbon (e.g., ‘Home’ or ‘Insert’) and selecting 'Customize the Ribbon.'

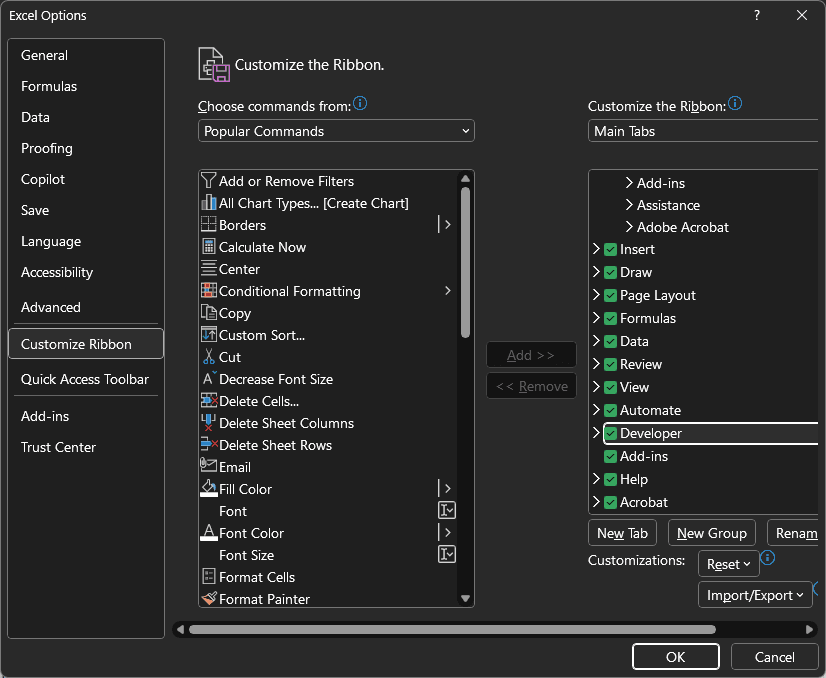

Step 2: Add developer tab to the ribbon

The Excel Options window will appear. In the right-side panel, check the box for 'Developer.'

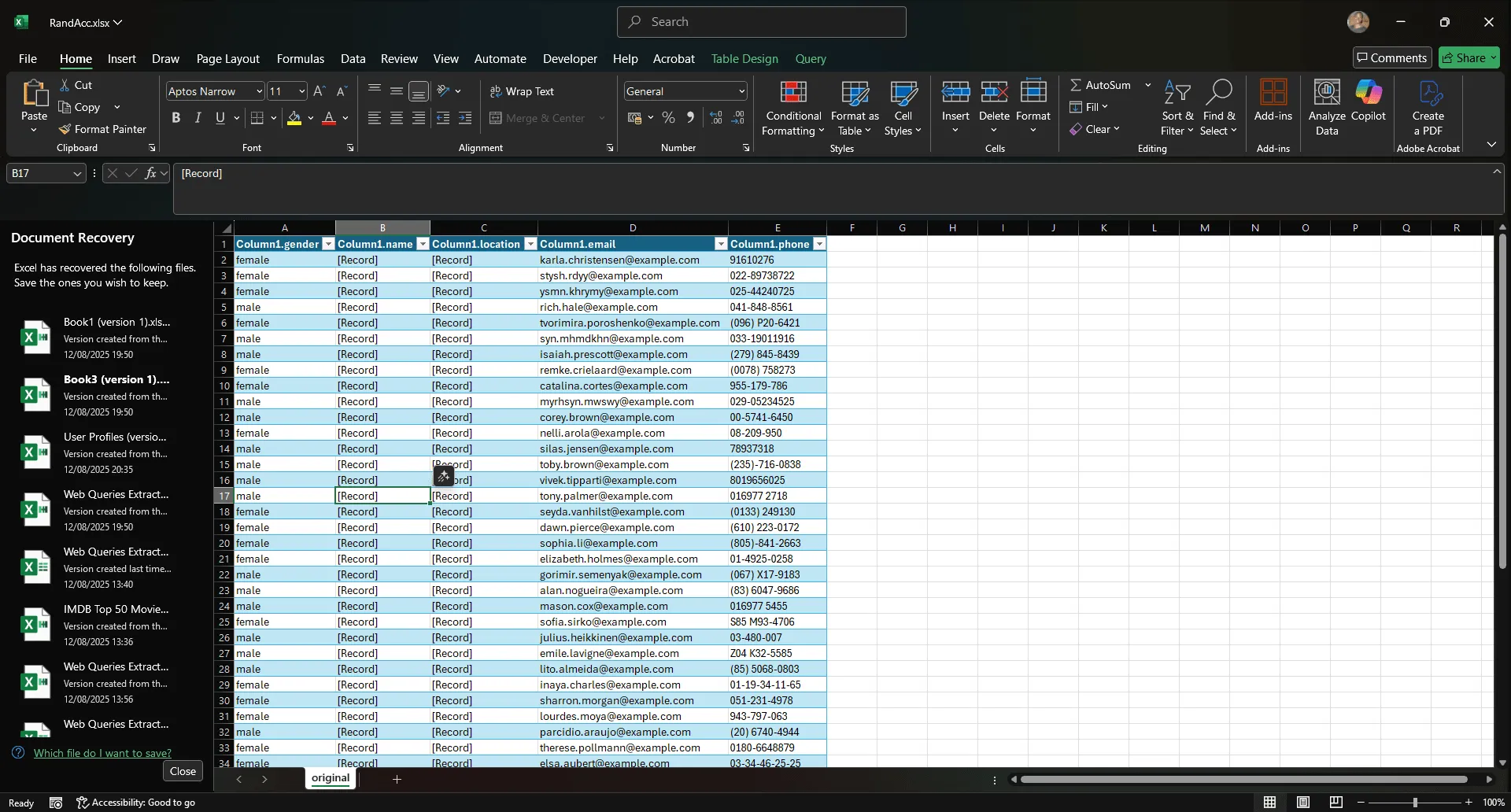

Step 3: Confirm and view developer tab

Click 'Ok.' You'll now see a new tab on the ribbon called ‘Developer.’

Step 4: Open the Visual Basic editor

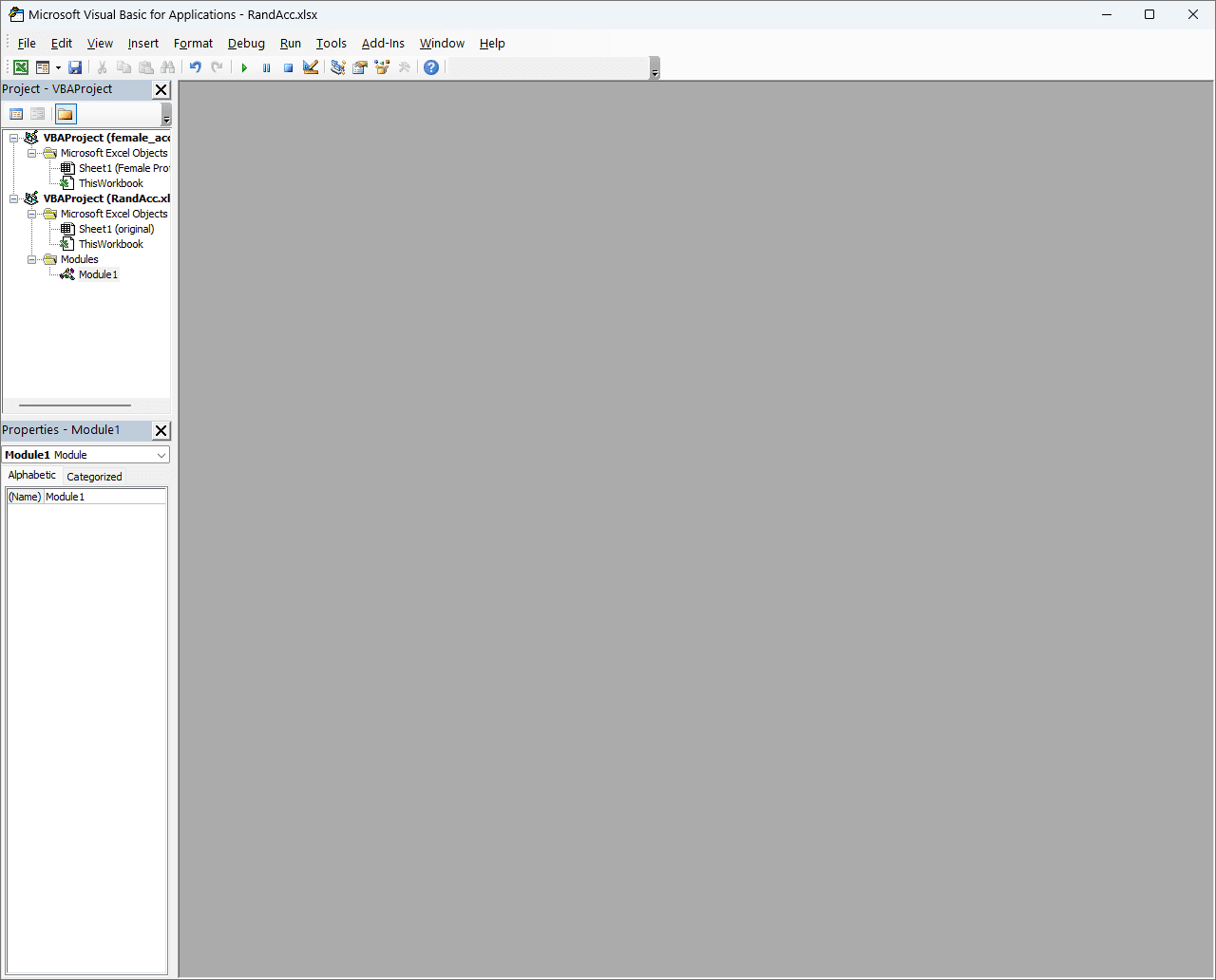

Click the 'Developer' tab, then click the 'Visual Basic' button on the far left.

Step 5: View the Visual Basic editor

You'll now see the VBA editor:

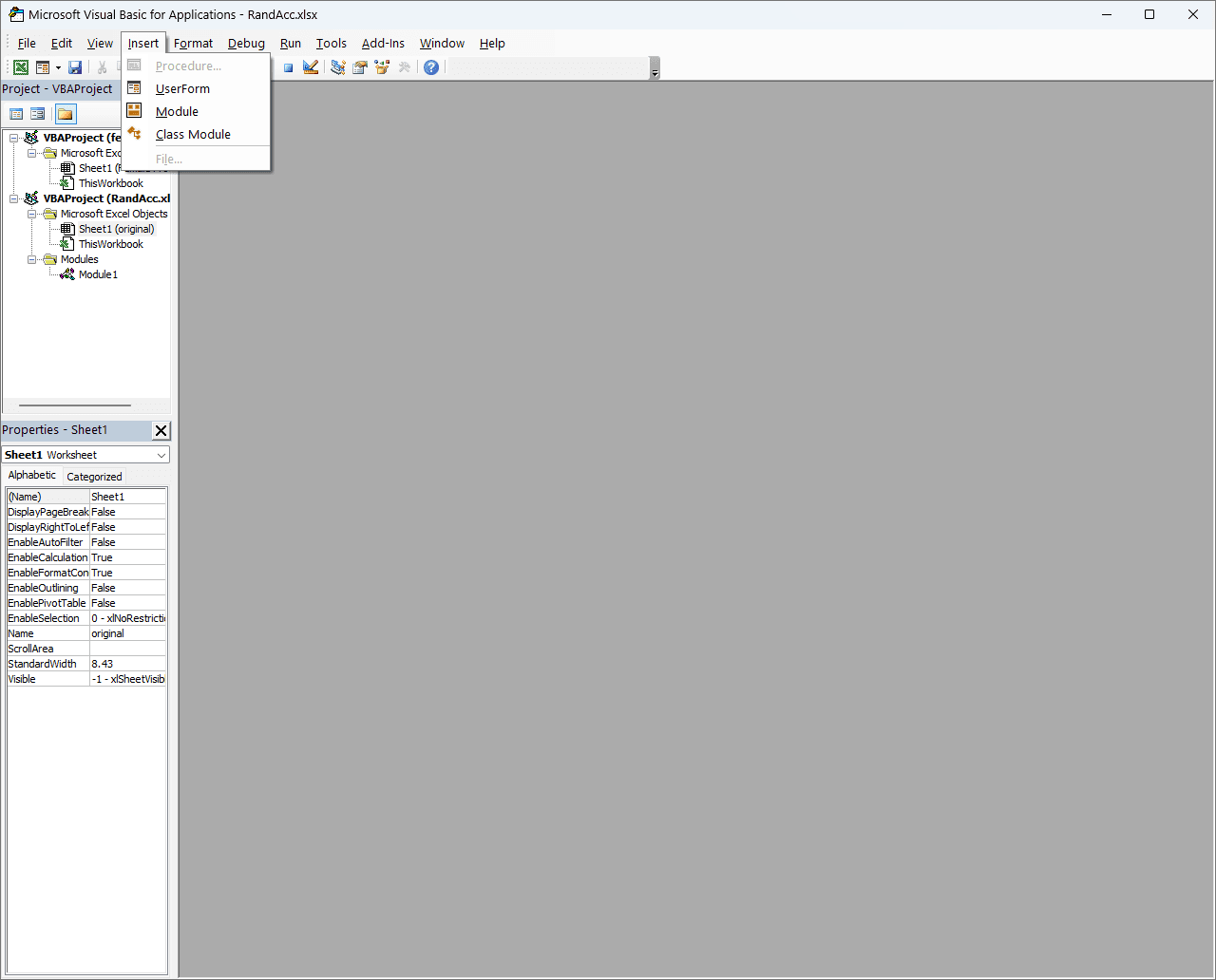

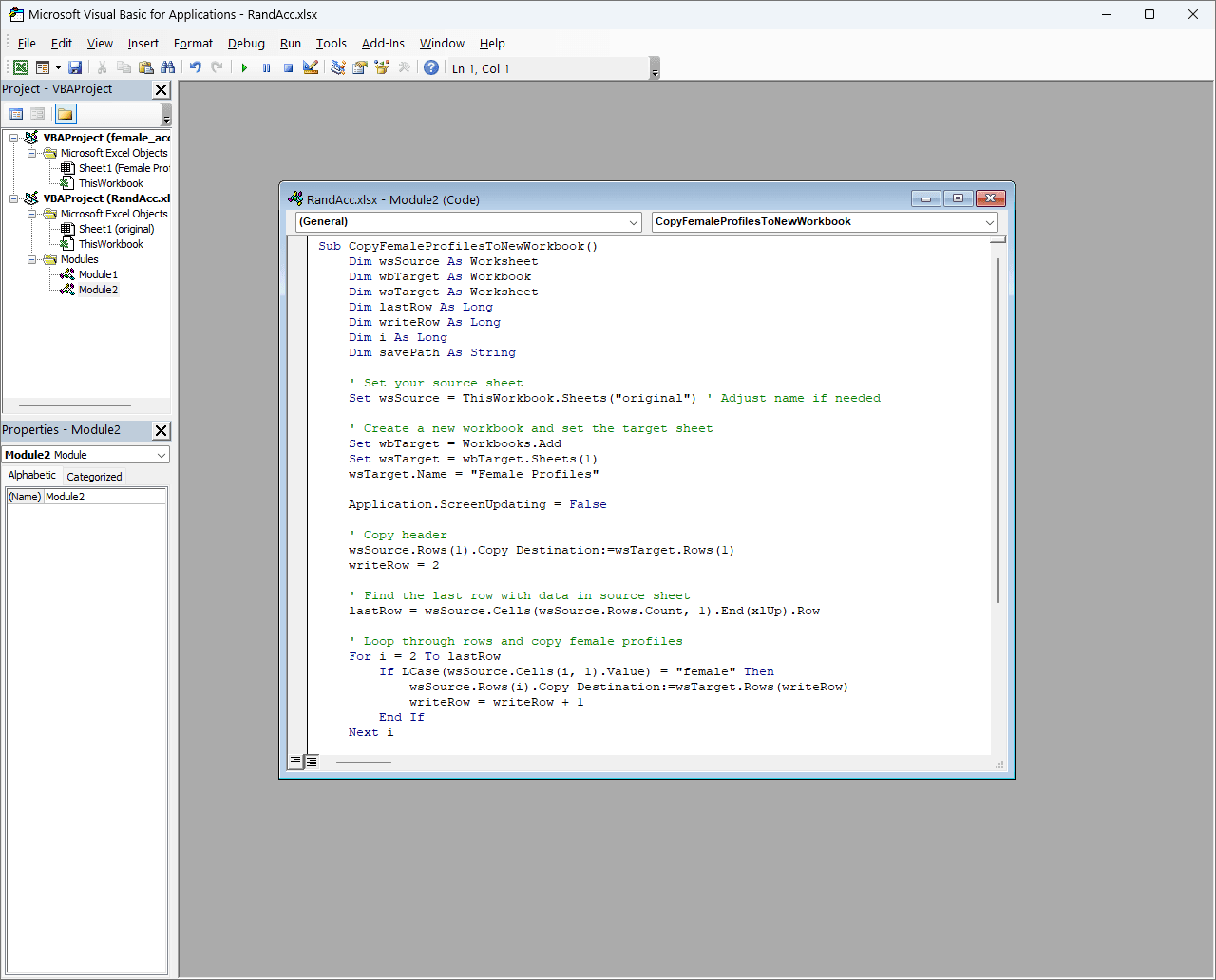

Step 6: Insert module and paste the code

Click 'Insert,' then select 'Module.'

Paste this code in:

Sub CopyFemaleProfilesToNewWorkbook()

Dim wsSource As Worksheet

Dim wbTarget As Workbook

Dim wsTarget As Worksheet

Dim lastRow As Long

Dim writeRow As Long

Dim i As Long

Dim savePath As String

' Set your source sheet

Set wsSource = ThisWorkbook.Sheets("original") ' Adjust name if needed

' Create a new workbook and set the target sheet

Set wbTarget = Workbooks.Add

Set wsTarget = wbTarget.Sheets(1)

wsTarget.Name = "Female Profiles"

Application.ScreenUpdating = False

' Copy header

wsSource.Rows(1).Copy Destination:=wsTarget.Rows(1)

writeRow = 2

' Find the last row with data in source sheet

lastRow = wsSource.Cells(wsSource.Rows.Count, 1).End(xlUp).Row

' Loop through rows and copy female profiles

For i = 2 To lastRow

If LCase(wsSource.Cells(i, 1).Value) = "female" Then

wsSource.Rows(i).Copy Destination:=wsTarget.Rows(writeRow)

writeRow = writeRow + 1

End If

Next i

' Build file path to save the new workbook

savePath = ThisWorkbook.Path & Application.PathSeparator & "female_acc.xlsx"

' Save and close the new workbook

wbTarget.SaveAs Filename:=savePath, FileFormat:=xlOpenXMLWorkbook

wbTarget.Close SaveChanges:=False

Application.ScreenUpdating = True

MsgBox "Female profiles saved to: " & savePath, vbInformation

End Sub

Here's what that looks like in practice:

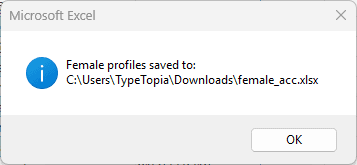

Step 7: Run the script and view save location

Click 'Run.' A message will confirm where the new workbook was saved.

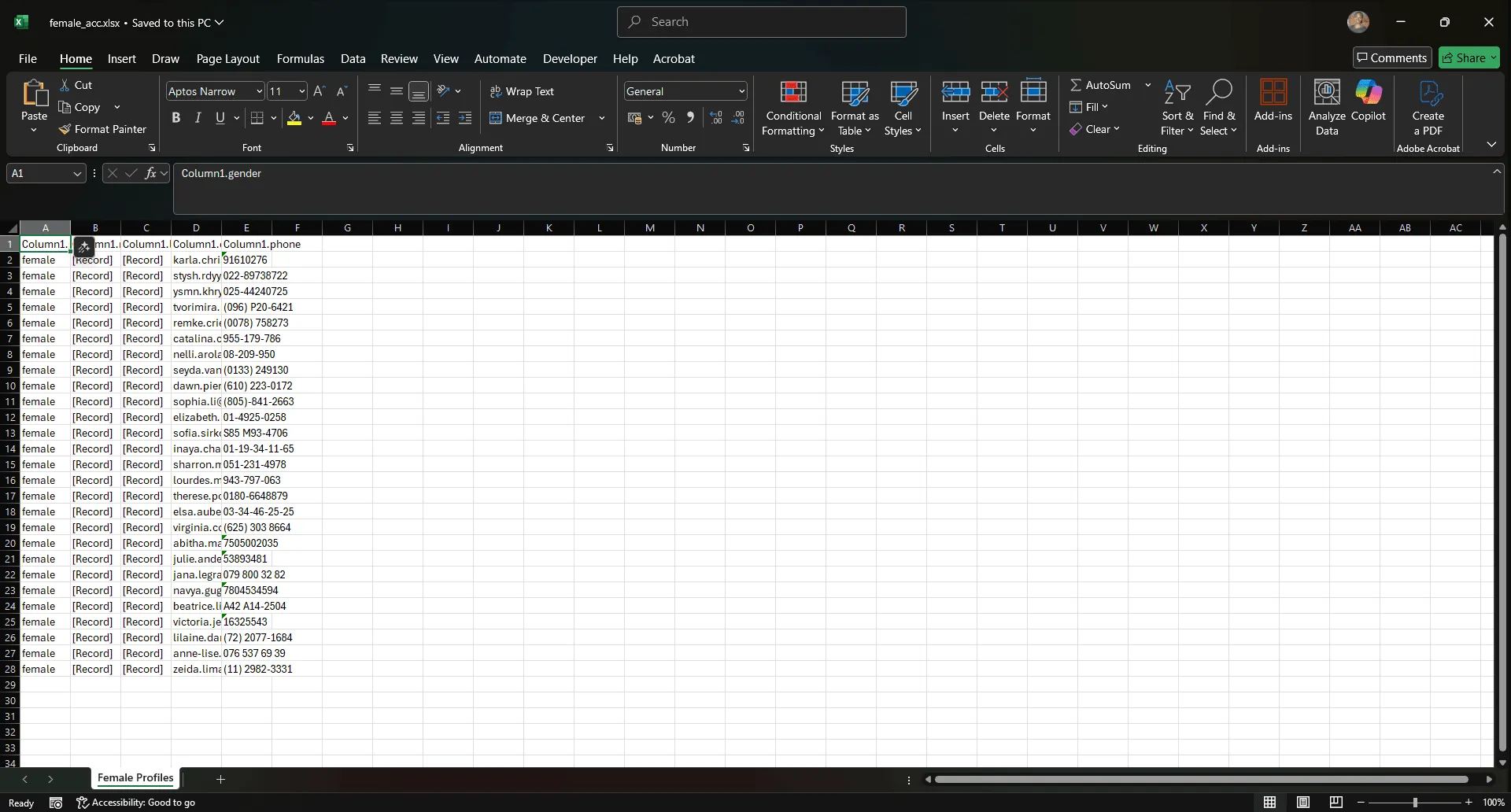

Step 8: Open the new file

That's it! Open the file, and you'll now see the exported data.

Python automation (for developers)

At a certain point, you hit the ceiling. Excel can’t parse JavaScript. Web queries choke on redirects. No-code scrapers start timing out. Your target blocks your IP, flags your behavior, or starts returning empty shells.

That’s when you know it’s time to switch gears. If you're trying to extract data from a website to Excel automatically and the usual tools aren't cutting it, the answer is Python.

For sites with low defenses, requests paired with a rotating proxy service can be surprisingly effective. It’s fast, scriptable, and easy to set up in batch. When structured right, this approach handles most scraping tasks where the HTML is server-rendered. We walk through exactly how to do this step-by-step in our "How to Scrape Amazon Product Data With Python in 2025" guide.

But when the site is more defensive, you’ll need deeper customization with headless browsers. Selenium with Undetected ChromeDriver gives you full control over a real browser environment, stealth included.

This lets you log in, click through flows, load hidden content, and scrape website data like a human would. Combine that with Residential Proxies, timezone spoofing, and behavioral scripts, and you’ve built a bot that blends in. It’s slower, but when scraping at scale or under fire, stability is everything.

The key is to pick the stack that matches the site. Don’t overbuild when a simple pull works. But don’t underestimate how aggressive sites have become in blocking scrapers. The AI boom made everything worse.

Best practices

Web scraping is a gray area that can turn black depending on the target. If you're scraping publicly available data, out in the open, served without login or payment walls, you're usually fine.

But once you start pulling data from gated content, password-protected pages, or anything sold behind a paywall, you may have crossed a legal line in many jurisdictions. Always check the site’s robots.txt file. It's not a law, but it tells you what the site owner considers off-limits to crawlers.

Conclusion

You’ve now seen every viable route to scrape website content to Excel automatically.

Built-in Microsoft Excel tools are great for low-friction static web page data extraction when the website wants to cooperate. No-code web scraping tools can help you scrape static and dynamic website content without writing a line of code, but only if the page isn’t built like Fort Knox. If there’s an API available, skip the scraping and just plug it into Excel directly.

But once you’re dealing with dynamic content, CAPTCHAs, or anti-bot systems, it's time to build your own custom Python scraper. And that’s how you win the long game of scraping: not with brute force, but with the right tool at the right moment. Want more tips on extracting website data to Excel without getting blocked? Join our Discord community!

Can I extract data from a website that requires login credentials?

Yes, but it’s more complex. You’ll need to log in first using session-based scraping or browser automation tools like Selenium. You also need to be careful because scraping behind a login may violate the terms of service or legal boundaries.

How can I scrape data from multiple pages of a website into Excel?

Use a scraping tool or Python script with pagination logic to loop through each page. Store the results in a CSV or Excel file as you go. Power Query can sometimes handle multi-page data if the URL pattern is predictable.

What should I do if the website uses JavaScript to load data?

Use tools that can run JavaScript, like Selenium or Playwright. Static scrapers like requests or Power Query won’t work here. You’ll need a headless browser to fully render the content.

Is it legal to scrape data from any website?

It depends. Public content is generally fair game, but scraping private or gated content can violate laws or terms of service. Always check robots.txt and understand the site's policies before scraping.

How can I schedule automatic data extraction to Excel?

You can automate data pulls with Power Query refresh schedules or by writing a Python/VBA script triggered by Task Scheduler (Windows) or cron (Mac/Linux). Output the data to an Excel file or a connected CSV.